ABSTRACT

Data Mining (DM) currently represents a key element for improving the acquisition of knowledge and for providing proper feedback in educational environments. In this field, as well as in others, psychomotor skills represent a unique way to advance in the knowledge of human behaviours. Our research apply in two equally promising, but different domains. On one hand, we would be able to improve the learning of psychomotor skills at educational level with the use of different DM techniques. This may includes learning martial arts or supporting the acquisition of locomotor abilities. On the other hand, we would like to expand our DM research far beyond the basis of the aforementioned educational field. Thus, we can evaluate other users, such patients, with the aim of improving the re-learning of motor capabilities during recovery processes on rehabilitation, or even to detect cognitive impairments, analysing slight psychomotor alterations at early stages using DM. The latter includes gait analysis, which are currently used for screening, but not so much for predicting purposes. Although our research is still at early stages, we are following the principles set on previous researches, such those included in our intelligent Expertise Level Assessment (iELA) method.

Keywords

1. INTRODUCTION

In order to properly apply state-of-the-art DM techniques for personalised learning, we have developed the following taxonomy, distinguishing between mutually-exclusive and non-mutually-exclusive movements [31]. This differentiation is particularly relevant for psychomotor activity analysis, since learners should be modelled according subtle details, which are not often obvious. In the first mutually-exclusive group we can find most of the Human Activity Recognition (HAR) systems, which basically use DM and Machine Learning (ML) techniques to analyse human movements, that cannot be executed at the same time (running, walking, sleeping, etc). In our case, it is extremely pertinent to be able to analyse non-mutually-exclusive movements, meaning distinguish different behaviours which are similar. This is the case of analysing distinct personal gait registries or matching several gaits gathered from different users or patients. For recognising the specific non-mutually-exclusive human movements, we can use inertial sensors, where the information is often collected in form of Time-Series (TS), like the ones depicted in Fig. 1.

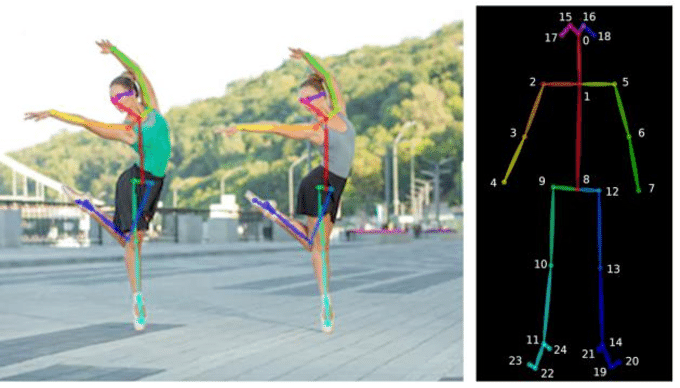

Fig. 2 depicts another method suitable for breaking down non-mutually-exclusive human movements, in this case utilising AI-based video analytics.

Many other different controllers may be used for the purpose of gathering information related to mutually-exclusive and non-mutually-exclusive human movements, such Microsoft Kinect sensor [29] or LIDAR1 sensor [20]. Whether the method and sensors used, the aim of the research disclosed on this paper is to analyse, with different DM techniques, several data sets gathered from the execution of human movements, to anticipate psychomotor learning behaviours supporting appropriate feedback or analysis.

This research may also have a specific impact on achieving well-being through active ageing, since the correct execution of certain movements at certain ages allows us to take care of ourselves properly. Therefore, the use of DM techniques, which allow us to automatically assess whether we perform certain motor movements correctly or if we preserve certain psychomotor skills, is essential for our comfort and well-being.

2. RELATED RESEARCH

The first steps for our research relies on the iELA method disclosed in [31], which uses quaternions, a specific group of hyper-complex numbers, to fuse the transformed inertial data, gathered during the practice of different martial arts. iELA uses DM to provide an innovative method to infer performance level, showing its relevance assisting psychomotor learning and educational systems. iELA follows the principles set in [34], which details how the use of different sensors, together with a specific methodology, to support psychomotor learning processes in educational environments. These foundations are also used in [35, 12, 9, 18] since motor tasks can be consolidated into memory through repetitions, creating a long-term neuromuscular memory for specific tasks.

Other intelligent tutoring system related with psychomotor skills can be found in [24], although in this case the authors use physiological sensors. Regarding video analysis, [28] introduce the design, development, deployment and evaluation of video games to support locomotor acquisition in a classroom setting. Other examples are related with the learning of playing piano [8], practising martial arts [33], performing dance step movements [33, 16] or improving sport technique [7], among others.

Some researches, as in [25, 37, 36, 15], use video analysis techniques for human pose estimation, in contrast, iELA foundations analyse inertial data gathered with the use of IMU2 sensors, as in [22, 32, 13, 5, 21]. iELA analyses the data collected in form of TS, as in [40] but using two different approaches: extracting features, as in [3, 4], and using Convolutional Neural Networks (CNNs), as in [19, 14], taking into account that TS have practically the same topology than images, as mentioned in [6]. Other researches use another DM techniques [23], machine learning methods [26] or neural networks [39, 2, 11].

The use of various methods to estimate accurately the human pose is also common, as in [27, 38, 12] which use two different types of data, the one provided by the IMU and the one gathered from video footage.

In addition to the analysis of psychomotor activities in Educational DM systems, the detection and analysis of the human pose in other research areas, can also take advantage of the different DM techniques described above. Thus, [1] provides automated movement assessment for stroke rehabilitation using video analysis. At this point it is important to denote how pre-dementia stages (motor cognitive risk syndrome) are characterised in some cases by slow gait, as disclosed in [10]. Consequently, this research has an impact on other areas related to psychomotor aspects and, therefore, could also provide tools to promote innovation in active and healthy ageing, increasing healthy life expectancy3.

3. EXPECTED CONTRIBUTION

In aspects exclusively related to the detection of the human pose in educational environments, in addition to iELA, neither of the examples described above, evaluate the level of performance. As iELA is setting up the principles for evaluating the level of performance of complex movements in martial arts, our aim is to extrapolate iELA to other domains, including educational o health behavioural. The use of quaternions to fuse inertial information, with the addition of other sources, such as those provided by video footage, may provide innovative mechanisms on educational and non educational environments that require the analysis of psychomotor skills.

Although one of the desired goals is to merge information coming from different sensors, including video footage, we carefully need to deal with one of the main drawbacks of using a video-based approach for human activity classification purposes, which are the issues in terms of privacy [17]. This will be particularly relevant for fulfilling IDEA (inclusion, diversity, equity, and accessibility) approaches, as well as for satisfying the guidelines for a trustworthy AI, including transparency, explainability, fairness, robustness and the aforementioned privacy.

On this research, we will also consider the analysis of the affective state of the participants within data gathering and in relation to the execution of the movements under our study.

4. RESEARCH QUESTIONS

The affirmative answer to the iELA research question carried out in the Master Thesis [31]:

- Can we use these DM driven analysis (iELA) to automatically classify practitioners according their expertise level?

represents the starting point for defining the next research question to be carried out in the on going Doctoral Thesis:

- May we use DM approaches to assess the level of expertise accomplished while performing motor activities on different psychomotor learning schemes (from martial arts techniques to physical movements to benefit active ageing) thus achieving a domain transfer?

which will be the main objective of this research.

5. PROPOSED METHODOLOGY

Our proposed methodology is based on iELA, a DM method for an intelligent expertise level assessment which analyses high volumes of inertial data. In this case, when breaking down inertial data, without fusing it into quaternions, we cannot clearly distinguish whether the representation corresponds to an expert user, which may be extrapolated to a patient without a pathology, who performs certain motor activity, as depicted in Fig. 3. When fusing this information into quaternions, see Fig. 4, we can clearly have information about how the motor activity was performed. On the other hand, if we analyse the same movement executed by a beginner user, which may be also extrapolated to a patient with a pathology, then we can obtain a different depiction, as in Fig. 5.

This approach is based in analysing quality datasets gathered while executing human psychomotor activities. The DM analysis of this data will follows iELA approaches, and may include the use of CNNs and the examination of the extracted features from TS.

6. CONCLUSIONS

Research in the analysis of psychomotor skills represents an important challenge, overall when we are going far beyond the distinction of the mutually exclusive movements disclosed alongside this article. At this stage we are looking forward for feedback to support our research with the aim of demonstrating how our iELA method provides a top-notch approach in the treatment and analysis of different psychomotor behaviours on educational, learning or even on health scenarios.

The use of the DM techniques developed in this research may have different fields of application at educational level, but also can be useful for achieving the benefits of active ageing.

7. ACKNOWLEDGMENTS

The work reported is framed in the project "INTelligent INTra-subject development approach to improve actions in AFFect-aware adaptive educational systems" (INT2AFF) led by Dr. Santos at UNED and funded by the Spanish Ministry of Science, Innovation and Universities, the Spanish Agency of Research and the European Regional Development Fund (ERDF) under Grant PGC2018-102279-B-I00, specifically within the scenario "SC4-motor skills", which focuses on analysing the influence of emotions in motor skills learning.

8. REFERENCES

- T. Ahmed, K. Thopalli, T. Rikakis, P. Turaga, A. Kelliher, J.-B. Huang, and S. L. Wolf. Automated movement assessment in stroke rehabilitation. Frontiers in Neurology 12 (agosto): 720650, 2021.

- D. Anguita, A. Ghio, L. Oneto, X. Parra, and J. L. Reyes-Ortiz. Human activity recognition on smartphones using a multiclass hardware-friendly support vector machine. In J. Bravo, R. Hervás, and M. Rodríguez, editors, Ambient Assisted Living and Home Care, pages 216–223, Berlin, Heidelberg, 2012. Springer Berlin Heidelberg.

- A. Avci, S. Bosch, M. Marin-Perianu, R. Marin-Perianu, and P. Havinga. Activity recognition using inertial sensing for healthcare, wellbeing and sports applications: A survey. page 11, 2010.

- M. Barandas, D. Folgado, L. Fernandes, S. Santos, M. Abreu, P. Bota, H. Liu, T. Schultz, and H. Gamboa. TSFEL: Time series feature extraction library. 11:100456, 2020.

- A. Bayat, M. Pomplun, and D. A. Tran. A study on human activity recognition using accelerometer data from smartphones. 34:450–457, 2014.

- Y. Bengio, A. Courville, and P. Vincent. Representation learning: A review and new perspectives. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35(8):1798–1828, 2013.

- V. Camomilla, E. Bergamini, S. Fantozzi, and G. Vannozzi. Trends supporting the in-field use of wearable inertial sensors for sport performance evaluation: A systematic review. 18(3):873, 2018.

- B. Caramiaux, F. Bevilacqua, C. Palmer, and M. Wanderley. Individuality in piano performance depends on skill learning. In Proceedings of the 4th International Conference on Movement Computing, pages 1–7. ACM, 2017.

- A. Casas-Ortiz. Capturing, modelling, analyzing and providing feedback in martial arts with artificial intelligence to support psychomotor learning activities. 2020. Retrieved June 15, 2021, from http://www.ia.uned.es/docencia/posgrado/master-tfm-archivo.html.

- Y. Cedervall, A. M. Stenberg, H. B. Åhman, V. Giedraitis, F. Tinmark, L. Berglund, K. Halvorsen, M. Ingelsson, R. Erik, and A. C. Åberg. Timed up-and-go dual-task testing in the assessment of cognitive function: A mixed methods observational study for development of the uddgait protocol. International Journal of Environmental Research and Public Health 17 (5): 1715, 2020.

- A. Choi, H. Jung, and J. H. Mun. Single inertial sensor-based neural networks to estimate COM-COP inclination angle during walking. 19(13):2974.

- A. Corbí and O. C. Santos. MyShikko: Modelling knee walking in aikido practice. In Adjunct Publication of the 26th Conference on User Modeling, Adaptation and Personalization, pages 217–218. ACM, 2018.

- O. Dehzangi and V. Sahu. IMU-based robust human activity recognition using feature analysis, extraction, and reduction. In 2018 24th International Conference on Pattern Recognition (ICPR), pages 1402–1407. IEEE, 2018.

- A. Dempster, F. Petitjean, and G. I. Webb. ROCKET: exceptionally fast and accurate time series classification using random convolutional kernels. 34(5):1454–1495, 2020.

- F. Demrozi, G. Pravadelli, A. Bihorac, and P. Rashidi. Human activity recognition using inertial, physiological and environmental sensors: a comprehensive survey. 8:210816–210836, 2020.

- A. Dias Pereira dos Santos, K. Yacef, and R. Martinez-Maldonado. Let’s dance: How to build a user model for dance students using wearable technology. In Proceedings of the 25th Conference on User Modeling, Adaptation and Personalization, UMAP ’17, page 183–191, New York, NY, USA, 2017. Association for Computing Machinery.

- J. Donahue, L. A. Hendricks, S. Guadarrama, M. Rohrbach, S. Venugopalan, T. Darrell, and K. Saenko. Long-term recurrent convolutional networks for visual recognition and description. In 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 2625–2634, 2015.

- J. Echeverria and O. C. Santos. Kumitron: A multimodal psychomotor intelligent learning system to provide personalized support when training karate combats. 2021. First International Workshop on Multimodal Artificial Intelligence in Education (MAIEd 2021) held in conjunction with the AIED 2021. To be published in CEUR.

- H. I. Fawaz, B. Lucas, G. Forestier, C. Pelletier, D. F. Schmidt, J. Weber, G. I. Webb, L. Idoumghar, P.-A. Muller, and F. Petitjean. InceptionTime: Finding AlexNet for time series classification. 34(6):1936–1962, 2020.

- M. Fürst, G. Shriya T. P., R. Schuster, O. Wasenmüller, and D. Stricker. Hperl: 3d human pose estimation from rgb and lidar. 2020.

- N. Golestani and M. Moghaddam. Human activity recognition using magnetic induction-based motion signals and deep recurrent neural networks. Nature communications, 11(1):1551, March 2020.

- E. A. Heinz, K. S. Kunze, M. Gruber, D. Bannach, and P. Lukowicz. Using wearable sensors for real-time recognition tasks in games of martial arts - an initial experiment. In 2006 IEEE Symposium on Computational Intelligence and Games, pages 98–102. IEEE, 2006.

- C. Jobanputra, J. Bavishi, and N. Doshi. Human activity recognition: A survey. 155:698–703, 2019.

- J. W. Kim, C. Dancy, and R. A. Sottilare. Towards using a physio-cognitive model in tutoring for psychomotor tasks. page 14, 2018. Retrieved May 10, 2021, from https://digitalcommons.bucknell.edu/cgi/ viewcontent.cgi?article=1055context=fac_conf.

- J. Lee and H. Jung. TUHAD: Taekwondo unit technique human action dataset with key frame-based CNN action recognition. 20(17):4871, 2020.

- A. Mannini and A. M. Sabatini. Machine learning methods for classifying human physical activity from on-body accelerometers. 10(2):1154–1175, 2010.

- T. v. Marcard, G. Pons-Moll, and B. Rosenhahn. Human pose estimation from video and IMUs. 38(8):1533–1547, 2016.

- J. McGann, J. Issartel, L. Hederman, and O. Conlan. Hop.skip.jump.games: The effect of “principled” exergameplay on children’s locomotor skill acquisition. British Journal of Educational Technology, 51, 11 2019.

- S. Obdrzalek, G. Kurillo, F. Ofli, R. Bajcsy, E. Seto, H. Jimison, and M. Pavel. Accuracy and robustness of kinect pose estimation in the context of coaching of elderly population. Conference proceedings : ... Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society. Conference, 2012:1188–93, 08 2012.

- Y. Okugawa, M. Kubo, H. Sato, and V. Bui. Evaluation for the synchronization of the parade with openpose. Proceedings of International Conference on Artificial Life and Robotics, 24:443–446, 01 2019.

- M. Portaz, A. Corbi, A. Casas-Ortiz, and O. C. Santos. Intelligent expertise level assessment using wearables for psychomotor learning in martial arts (master’s thesis). National Distance Education University (UNED), 06 2021. Retrieved Apr 15, 2022, from http://www.ia.uned.es/docencia/posgrado/ master-tfm-archivo.html.

- S. M. Rispens, M. Pijnappels, K. S. van Schooten, P. J. Beek, A. Daffertshofer, and J. H. van Dieën. Consistency of gait characteristics as determined from acceleration data collected at different trunk locations. Gait Posture, 40(1):187–192, 2014.

- O. C. Santos. Towards personalized vibrotactile support for learning aikido. In Lavoué, H. Drachsler, K. Verbert, J. Broisin, and M. Pérez-Sanagustín, editors, Data Driven Approaches in Digital Education, volume 10474, pages 593–597. Springer International Publishing. Series Title: Lecture Notes in Computer Science.

- O. C. Santos. Training the body: The potential of AIED to support personalized motor skills learning. 26(2):730–755, 2016.

- O. C. Santos and M. H. Eddy. Modeling psychomotor activity: Current approaches and open issues. In Adjunct Publication of the 25th Conference on User Modeling, Adaptation and Personalization, UMAP ’17, page 305–310, New York, NY, USA, 2017. Association for Computing Machinery.

- K. Sun, B. Xiao, D. Liu, and J. Wang. Deep high-resolution representation learning for human pose estimation. In CVPR, page 11, 2019.

- A. Toshev and C. Szegedy. DeepPose: Human pose estimation via deep neural networks. In 2014 IEEE Conference on Computer Vision and Pattern Recognition, pages 1653–1660. IEEE, 2014.

- T. von Marcard, B. Rosenhahn, M. J. Black, and G. Pons-Moll. Sparse inertial poser: Automatic 3d human pose estimation from sparse IMUs. 2017.

- S. Wan, L. Qi, X. Xu, C. Tong, and Z. Gu. Deep learning models for real-time human activity recognition with smartphones. 25(2):743–755, 2020.

- H. Zhou, H. Hu, and Y. Tao. Inertial measurements of upper limb motion. 44(6):479–487, 2006.

1Acronym of LIght Detection And Ranging.

2Acronym of Inertial Measurement Unit

3https://ec.europa.eu/social/main.jsp?langId=encatId=1062

© 2022 Copyright is held by the author(s). This work is distributed under the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) license.