ABSTRACT

Typical data science instruction uses generic datasets like survival rates on the Titanic, which may not be motivating for students. Will introducing real-life data science problems fill this motivational deficit? To analyze this question, we contrasted learning with generic datasets and artificial problems (phase 1) with a community-sourced dataset and authentic problems (phase 2) in the context of an 8-week virtual internship. Retrospective survey questions indicated interns experienced increased motivation in phase 2. Additionally, analysis of intern discourse using Linguistic Inquiry and Word Count (LIWC) indicated a significant difference in linguistic measures between the two phases. Phase 1 had significantly greater measures of pronouns with a small-medium effect size, 2nd person words with a medium-large effect size, positive emotion with a medium effect size, interrogations with a medium-large effect size, question marks with a medium-large effect size, risk with a medium-large effect size, and causal words with a medium effect size. These results in conjunction with a retrospective survey suggest that phase 1 had more questions asked, more causal relationships defined, and included linguistic features of success and failure. Results from Phase 2 indicated that community-sourced data and problems may increase motivation for learning data science.

Keywords

INTRODUCTION

Data science curriculum development is challenging due to prerequisites in statistics, programming, and machine learning [36]. Dataset complexity is another challenge: while educators acknowledge that data science embraces messy data, typical practice is to use sanitized or “canned” datasets to demonstrate a particular approach [3][10]. For example, the UCI Machine Learning Repository, a popular source of datasets, lists datasets on irises, adult income in 1996, and the geographic origin of wines as its top three downloaded datasets [9].

The practice of using canned datasets illustrates the pedagogical tension between keeping intrinsic cognitive load low [34] without sacrificing learning opportunities to develop key data science skills for working with messy data. Cognitive load theorists have proposed that motivation is particularly important for learning complex skills over time, because motivation causes learners to invest in germane cognitive load [20].

In the context of learning data science, dataset manipulation is a potential avenue for increasing motivation. Personalization has been used in previous research to increase motivation for learning [8]. For example, personalization might entail allowing the learner to choose the dataset or matching a dataset based on the learner’s preference profile. However, this type of data personalization can be challenging because the data in question may not be accessible or suitable for advancing a learning goal.

The alternative explored in the present study is to use datasets and problems sourced from community partners. In the framework of self-determination theory [27], this approach should build intrinsic motivation through the constructs of relatedness (by working on a problem of concern in their community), autonomy (by deciding how to address the problem of concern rather than being told to perform a specific analysis), and potentially competence (by making progress on the problem and so increasing self-efficacy in data science).

Within our research context of an 8-week data science virtual internship, we hypothesized that interns would experience increased motivation during the final phase of the internship in which they worked on community-based problems. To evaluate this hypothesis, we conducted retrospective surveys and analyzed the communications between interns for linguistic indicators of increased motivation, effort, confidence, competence, and emotion.

Background

Internship & motivation

Traditional internships offer a markedly different context for learning compared to formal education. While formal education typically engages in prescriptive or rote learning, internships are grounded in real-world tasks that have material impacts on interns in terms of compensation and future employment opportunities. As such, internships have substantial potential to enhance motivation around learning in a way that parallels, and perhaps even surpasses, project-based learning in formal education [2].

The motivational impacts of internships have been found across the literature. [15] worked with IT interns to consider the roles of tasks, learners, and mentors in a project-based intern program. It was found that mentors increased the learners’ successful expectancies and therefore increased learners’ self-efficacy. Because of this, it was hypothesized that mentoring increases the learner’s self-determination and subsequently motivation. [19] is a study based on 167 college interns working in retail, it was discovered that emotional sharing is positively related to learning and mentoring. Research in cognitive neuroscience leads us to believe that emotions activate neural circuits which engage sensory systems that increase attention and motivate perceptual processing [17]. Positive emotion was hypothesized to relate to motivation in that by increasing these perceptual factors, learners would be more compelled to learn.

Similar results have been found in virtual internships [13]. Virtual internships are becoming a popular alternative to in-person structures due to the Covid-19 Pandemic, and they have the potential to offer a unique educational strategy. Research has shown that learning efforts outside of traditional classrooms are needed to address systemic disparities within education [30]. In [26] it was seen that virtual internship programs provide a quality opportunity for non-traditional students to participate in practical experiences regardless of their physical location and other obstacles.

The virtual internship discussed in this paper follows through on goals to help students during the pandemic. This includes paying learners, offering them loaner laptops, and creating a pedagogy that motivates students when working with a local community partner in need.

[18] showed that service-learning increased civic skills, problem-solving, and motivation. This leads to the idea that service-learning internships have the potential to enhance motivation, especially if the service is aligned with the intern’s beliefs and values. Service-learning gained attention in the 1970s [30] and has become increasingly popular in engineering [4] as well as data science, as evidenced by such programs as Data Science for the Social Good.

Research Context

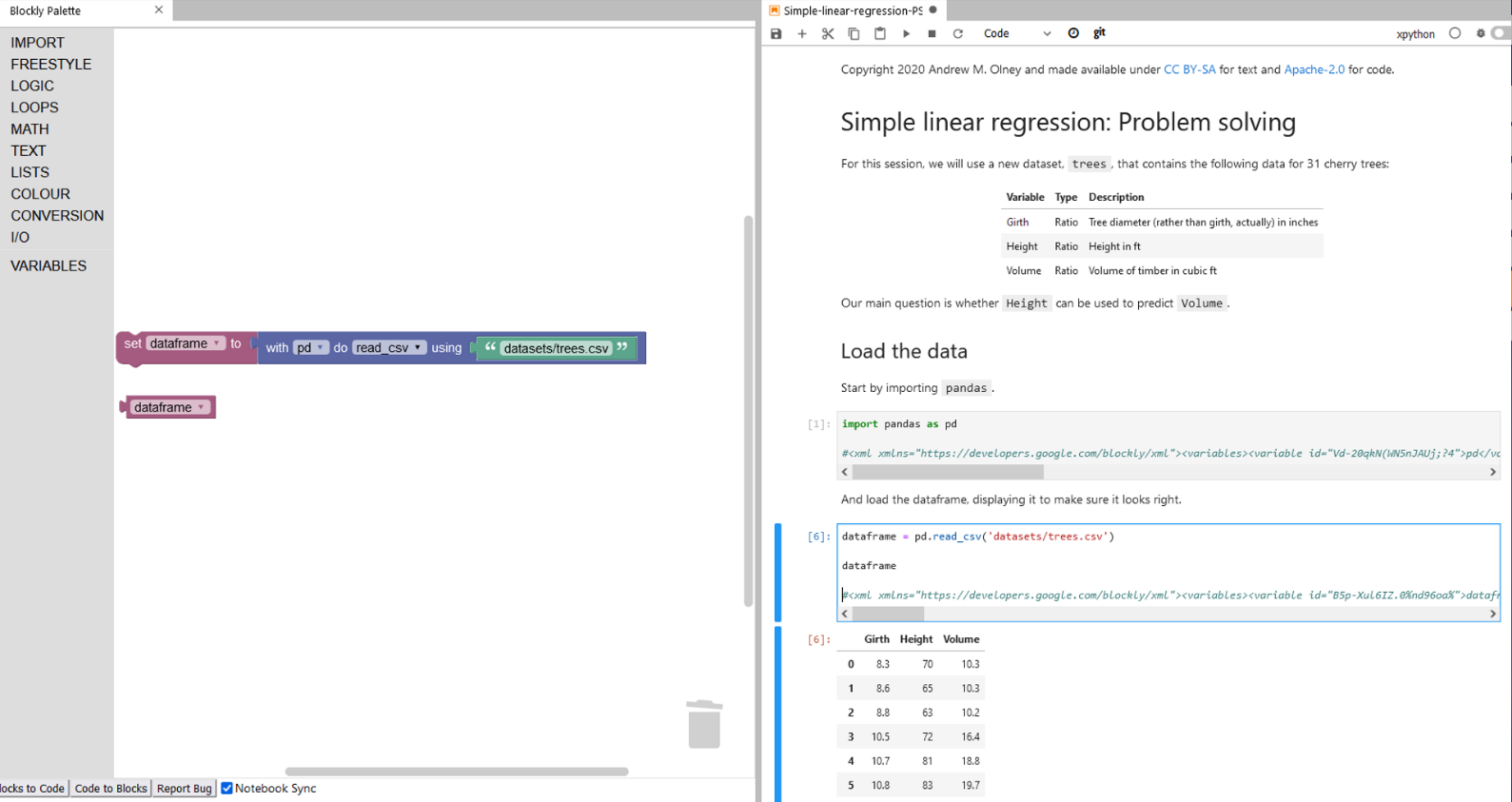

We designed an 8-week data science internship with two phases. Phase 1 of the internship was an educational data science boot camp that consisted of Jupyterlab Python notebooks[1] with a Blockly plugin [23] so that students could solve data science problems with a block-based programming language. Learners were split into pods which was a distributed team of students ranging from 3-4 people including a student mentor. The notebooks, as seen in Figure 1, consisted of materials that span across data science topics, such as cleaning data, Random forests, regression trees, and cross-validation. To cover these topics according to a fixed schedule, Phase 1 used common generic datasets with artificial problems (i.e., problems proscribed by the learning materials). Each topic was covered by introducing it as a worked example in the morning followed by problem-solving in the afternoon. At the end of the day, they would engage in peer grading and review, which would culminate in a group discussion led by a faculty member. In addition to the notebooks, students were provided a reference manual that abstracted key steps from the notebooks, based on an observation that interns sometimes struggled with learning transfer [24]. Further, Phase 1 implemented a problem-based learning environment, with mentors to help get through the questions. This style of learning has been seen to increase intrinsic motivation [5] [16]. This is a type of instructional design suggested by [31] such that students learn from failure, and that they cannot fail in this environment due to the time allowed to rework, fix, and learn from their mistakes. This is motivating due to the lack of pressure on the student to be correct.

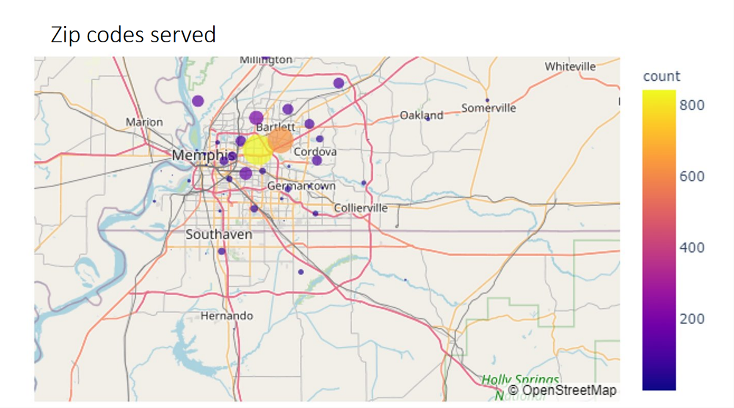

In Phase 2 of the internship, students were regrouped into two teams such that they could work on a respective community-partner project. The projects consisted of harm-reduction data from a clean needle and Narcan mutual aid group (A Betor Way), and event data from a group that helps battered women get into safe homes and supplies resources (Restoration Time Family Youth Services). Both community partners are locally based. Before Phase 2, each partner presented to the interns and faculty for 10 minutes and took questions about their work and data. After the presentations, each intern rank-ordered their preferences of which project they wanted to be on, which was considered and distributed based on their preferences and the number of students in each group. Each team worked with 1-2 faculty members that they met with on a regular basis (e.g., 2-3 times a day). The faculty members provided guidance and helped set goals and schedules. Each team was also provided with a captain that was nominally for coordinating within team members. An important factor of Phase 2 is that it keys in on reinvention and reconfiguration during a learning task, which has been seen to add meaning to the learning experience [6]. In an experiential activity, such as a real-world authentic problem, motivation to learn is cultivated [24]. This can be seen in a study conducted over five forms of experiential learning, which reported a high self-perception of learning in a service-learning setting [7].

LIWC

Linguistic Inquiry and Word Count (LIWC) calculates linguistic measures using a dictionary-based approach that counts words in the given text according to the categories in which they belong [25]. For example, if you use the word “sad” it will be counted as a target word that matches a dictionary word for the category of negative emotion words. The dictionary is composed of 94 categories and almost 6,400 dictionary words like this. The full comprehensive text can be found in [25]. The 2015 version of the software, which was used in this study, supports the languages of Spanish, German, Dutch, Norwegian, Italian, and Portuguese.

The usage of LIWC in discourse spans many topics in education such as understanding learners’ difficulties, discovering motivational insights to learning styles, and analyzing the sentiment of experts and non-experts over time. In [11] it was seen that various language modeling tactics, including LIWC, lead to the understanding that researchers could interpret learners’ needs and difficulties in learning. This insight was used to change the materials and course settings such that the learner experience was increased [11]. LIWC was also used in [36] to develop research around learning styles while classifying the motives behind them. This study found that LIWC could be used to identify the motives for different students’ needs and what learning styles could be used to facilitate them. Further showing the application of LIWC in educational settings, [1] studied the emotional states of learners in an online Stack Overflow learning community. They found that learners were more analytical and less authentic over time, meaning learners progressed in their learning capabilities but became less authentic in their posts. It was also found that the clout (e.g., confidence) levels of non-experts decreased overtime in their question-and-answer posts, while experts only had a decrease in clout for their answer posts – not the question posts. This indicates that learners became less confident in their materials over time, while experts doubted their answers but had strong questions. LIWC has been used across varying processes, tasks, and materials to understand the social reality of learner discourse.

In the present study, LIWC2015 was used to analyze the change in discourse between Phase 1 and 2 as an indicator of motivation, emotional resonance, confidence, competence, and effort. These constructs were analyzed using LIWC with data extracted from Discord, an online messaging and communication platform that learners used to communicate with each other, their mentors, and faculty. It is important to note that from a psychological perspective, style words such as pronouns and 2nd person reflects how people are communicating, while content words such as the ones that fall under the positive and negative emotion category convey what people are saying [35]. In terms of what people are saying, different variables, such as word count, can be used to determine the characteristics of a speaker, such as effort. In this way, the discussed constructs can be operationalized in terms of the LIWC variables. For example, affective indicators of emotional tone, affect, positive emotion, and negative emotion could be used to represent emotional tendencies. The reason for looking at this construct is the expectation that when students have a moral responsibility to help a community partner in need, they will have a larger emotional resonance with the task and therefore be more motivated.

methods

Participants

There were 10 participants in this study, 5 men and 5 women. For the A Betor Way project, there were 3 men and 2 women. For the Restoration Time Family Youth Services project, there were 2 men and 3 women. All but one intern was from a Historically Black College and University (HBCU) in the southern U.S. The HBCU participants came from a variety of majors, and the remaining participant was an incoming data science graduate student. Three participants were mentors. One was an incoming graduate student, and the other two were former interns.

Materials and Procedure

Data for LIWC analysis were collected through mining the conversations students were having over the messaging platform Discord. Channels for each pod were created, a help channel, a general chat channel, and two channels for each community-partner project. The text logs from Phase 1 were collected using the pod channels, and the text logs from Phase 2 were collected using the community-partner channels. Voice channels for general, help, each team, and each project were also created, but data from these sources has been excluded because we did not save this method of communication.

Discord chat logs were exported as JSON files using the Discord Chat Exporter[2]. Then, we used a Python script to collect word counts and length of words per post by each student by afternoon and morning notebook. We aggregated the posts from each student into a single text for Phase 1 and again for Phase 2. The resulting texts were put into an Excel file and used for LIWC analysis with each student and all the words they used per phase as a datapoint.

Survey items were distributed to students retrospectively (e.g., at the end of phase 2) over Google Forms. The items were constructed by centering the constructs of motivation and affiliation. Questions used a 5-point Likert scale, designating no influence in the question asked to a very strong influence. Two of the questions, the last two rows in Table 2, used the number three option as a designator for no influence whatsoever.

Results

Measure | Phase 1 | Phase 2 | p | d | |||

|---|---|---|---|---|---|---|---|

| M | SD | M | SD | |||

pronoun | 59.00 | 48.85 | 40.90 | 55.86 | .048 | .34 | |

you | 13.20 | 16.22 | 4.75 | 7.42 | .027 | .67 | |

interrog | 8.50 | 5.70 | 4.84 | 5.44 | .048 | .66 | |

posemo | 16.10 | 13.24 | 9.40 | 13.61 | .037 | .50 | |

cause | 9.81 | 7.91 | 5.55 | 8.30 | .009 | .53 | |

risk | 3.58 | 3.36 | 1.75 | 2.69 | .027 | .60 | |

Qmark | 8.68 | 8.16 | 4.44 | 6.63 | .019 | .57 | |

LIWC

All measures reported by LIWC were analyzed using the Wilcoxon signed ranks test, but due to space constraints, we only report significant results in Table 1. The indicated p-values are not corrected for significance due to chance (type 1 error), which is expected when performing 88 tests at once. When we corrected the p-values using the strict Holm-Bonferroni method and less strict Benjamini-Hochberg method, no significant differences were found. Post-hoc power analysis using GPower 3 revealed that the design was underpowered, with power .28 to find a medium effect with α = .05.

All measures in Table 1 significantly decreased from Phase 1 to Phase 2. Personal pronouns are “I, them, her itself” and include ‘you’. This measure had small-medium effect size, whereas the other measures had a medium or medium-large effect size You measures 2nd person words such as “you, your, yourself”. Iterrog means interrogatives such as “how, when, what”. Posemo means positive emotion and includes words such as “love, nice, sweet”. Cause means causation words, such as “before, effect”. Risk means words associated with risk such as “danger, doubt”. Finally, Qmark means the number of question marks used.

Survey Results

Only 5 interns responded to the survey. In the survey results, it was found that students self-reported higher motivation when working on the community projects in Phase 2. Table 2 shows the survey questions and their respective scores., The scores across all questions indicate that the interns perceived an increase in motivation during Phase 2. Additionally, interns developed a sense of understanding that data science can affect their local communities and it helped develop a sense of connection to their communities. Overall, the outcomes of this survey were very positive, but limitations exist in the lack of power present with such a low N.

Question | M | SD |

|---|---|---|

How did working with a community partner increase your motivation? | 5.00 | .00 |

How did working on your team’s project in-crease your connection to the community? | 4.00 | .49 |

How did knowing that your project was with community-based data increase your efforts? | 5.00 | .00 |

To what degree did working with a community partner influence your perspective on data science’s power to have a local impact? | 4.43 | .19 |

To what degree did your interest in social jus-tice work change since the beginning of the community project | 4.20 | .44 |

discussion

Interpretation

Students reflexive posting indicates that they wrote more questions, explained the processes, took more risks, and had a more positive attitude in Phase 1. Nothing resulted in an increase in Phase 2, which was contrary to our predictions. We anticipated an increase in motivation and secondarily an increase in effort, competence, confidence, and emotion. The only construct in the LIWC analysis that matches what we were expecting was positive emotion, but the results came out in the other direction. However, our primary hypothesis of motivation comes out correct in the retrospective survey results.

Pronoun means total pronouns used. This is represented by the words I, them, her, itself. It was found that the use of pronoun words dropped in Phase 2, which could be because there was more direct mentoring in Phase 1. This is exemplified in the sentence “You’re gonna set one of them to Import: “pandas” – as – “pd”. This sentence shows the use of variable pronouns directed at another person in need of assistance. Compare this to the sentence: “Sorry, I got super dugged dow with a dumb error on the scatter matrix, but I’m done now. I’m trying to add a new/return-dependent color to the Narcan Given histogram.” This shows the use of primarily one type of pronoun – personal. It is possible that in Phase 1 lots of different pronouns were used, indicating acts of mentoring and calls for help of assistance, while Phase 2 used a smaller category of pronouns to update the chat on what they were doing on the project. Along with questions being asked, we can interpret the causal words, 2nd pronoun usage, and pronoun usage. These categories indicate the prevalence of explanations by means of needing to indicate causal relationships, direct others on what to do, and refer to objects and people. Additionally, since pronoun and you words both decreased, this means no other subtypes o pronouns changed. Since you is nested in pronoun, this implies that the change was all in you. In [32] pronouns were negatively related to relationship quality. This means that since you decreased, an improvement in relationship quality changed from Phase 1 to Phase 2. However, this could just be due to time, not to the Phase 2 activity.

You means 2nd person words used. This is represented by the words you, your, yourself. It was found that the amount of you dropped in Phase 2, which could indicate there was more instruction in Phase 1. This is similar to the thought pattern behind the pronoun decrease – there was more variability in pronouns, especially 2nd person words, in the first phase but the instruction of this type was lacking in Phase 1. However, we do not see an increase in 1st person words in Phase 2, which means if there was an increase in these types of words in Phase 1 it did not happen at any significant level.

Interrog means interrogatives, and Qmark means question marks. These words are represented by how, when, what and ? respectively. They will be discussed together due to the correlation of the categories and ideological similarities in what they represent – questions. There were more interrogatives and question marks in Phase 1 than in Phase 2, and they both had about the same number in both phases. This could be because interrogatives and question marks are linked in their representation of questions, which makes sense for these to be more prominent in Phase 1 than Phase 2 due to the direct problem-solving nature of tasks in Phase 1. In Phase 2, there seemed to be a culture of putting question marks behind things they were trying to verify, such as: “I guess we have to get dummies for all the non-numerical stuff, right?”, whereas in Phase 1 there were more questions from both interns and mentors, such as the question: “What kind of error are you getting?”. Mentors needed to ask questions to assist, as well as interns asking the questions to get answered. This two-ended need for questions could account for the almost double amount of question marks and interrogatives in Phase 1. The results of interrog and Qmark indicate the construct of questions, so it can be said more questions were asked in Phase 1. This makes sense because there is a back-and-forth dialogue between learners and mentors – both must ask each other questions. In Phase 2, questions seemed to be one direction, as in learners and mentors were asking questions that a faculty member could answer with or without another question. Here, it is important to consider that faculty posts were not measured, so if they responded back with a question, it was not reported. Additionally, it was found in [28] that lower-status language is more self—focused and tentative, while high-status language speaks more often and freely makes statements. The use of first-person plural usage correlated with higher rank, but the opposite pattern was found for question marks compared to lower-ranked members of a crew. A reduction of question marks could indicate an increased sense of status and therefore, self-efficacy.

Cause means causation words, such as before, effect. These words were higher in Phase 1 than in Phase 2, which could be because of a need for understanding the problems in Phase 1, and the mentors giving answers in this fashion. Take the example sentence “Ok, it’s because the last 3 freestyle blocks go outside the main block, like this: [screenshot]”. It shows a mentor answering a question in a causal style. Examples like this are plentiful in Phase 1, but they are lacking in Phase 2 due to less of a need to understand what is happening and more of a need to update the channel on what they are accomplishing. The explanation of how or why they are doing such a thing is not there because everyone already understands the underlying mechanisms. Because causal words went down, the language can be said to have been less complex over time as the interns had more knowledge and didn’t have to explain themselves as much when talking to each other. This can be related to literature from [14] that such discussions are the most complex part of an article because results must be integrated and differentiated from past findings. It can also be related to the literature of [12] which studied how prepositions signal the speaker is providing more complex and concrete information about a topic, showing that words greater than six letters are also indicative of more complex language. These causal relationships show that there is a complexity to the conversation which does not occur in Phase 2 possibly due to the nuanced form of problem-based learning. Overall, these results can be interpreted as more questions being asked, and more causal relationships being defined.

Risk means risk in the LIWC dictionary, and it accounts for words such as danger, doubt. This could be because of learners’ hesitancy in what the materials they are learning. It represents a tenability in answering problems because there is an underlying fear of getting the questions wrong. Posemo means positive emotion, which is represented by the words love, nice, sweet. These words were more prominent in Phase 1 than in Phase 2, contrary to our hypothesis. This could be because learners were more likely to express gratitude for answers in Phase 1, and there were not that many questions in Phase 2. Additionally, it could represent the happiness one feels when getting an answer correct or getting the solution they need. It could also represent the gratitude learners had to their mentors for helping them. In this sense, the linguistic feature of positive emotion represents the other opposite of risk, meaning these two categories might have a relationship in the problem-based learning context. The results of risk and positive emotion are a bit fuzzier, but they seem to reflect a dichotomy of failure and success. Risk words include a language of doubt and tentability. This is a bit strange to see in Phase 1, but it starts to make sense when contextualized to the failure literature of [31]. When students are in an environment where they have the option to fail, doubt occurs, and a sense of risk is invoked due to the consequential fear of getting problems wrong. Positive emotion reflects the opposite of this – the joy of getting answers correct. Since there are no problem sets in Phase 2, only a project to complete, there are less iterations of the failure and success dichotomy, which results in fewer positive emotion and risk words. Additionally, since positive emotion words went down, this could indicate there was less agreement in Phase 2 than Phase 1. The task was more difficult and had multiple solutions in Phase 2. Since risk also went down, this could mean that concerns went down. This would make sense because the learners had an increased ability at this point. Lowering of these concerns could indicate increased self-efficacy.

Limitations

The study has several limitations. The study was nonexperimental with low sample size, reducing our power to find an effect or claim causality. The order of the phases was not counterbalanced, by necessity, so it is possible that some changes in discourse are due to maturation and not the community project in Phase 2. The analysis done in LIWC uses a dictionary approach – words are matched based on predefined categories instead of studying word relationships and their contextual clues [22]. Finally, the survey questions were asked retrospectively, so there was no baseline to measure change, and the participants may have had bias responses due to the retrospective phrasing of the questions.

Future Research

In the future, more participants should be studied because the power of this research is very low for both LIWC and Survey measures. Motivational surveys should be distributed before and after the internship, instead of just after. Also, questions should be reworded and put on the same scale.

This study should also be used as a comparison metric for the same internship next year. By doing so, insights could be found on the similarity between years to see if any language changed over the course of a year’s development of the program.

Another future research point would be to include the 4 summary variables in the LIWC dictionary (e.g., analytic, clout, tone, and authentic) to see if there were any changes over the phases per percentile scoring. Word count could also be analyzed, as well as words per sentence.

Finally, it would be good to measure the language usage in a time series of days per week over the four weeks to see what changes happen per day. This would allow us to see the movement and variability of sentiment change.

Conclusions

This study shows that learners asked more questions, described more relationships, and were more positive in Phase 1. It was also found that students were more motivated in Phase 2.

It is important to look at the LIWC analysis between phases because it highlights the psychological underpinnings of learners. There is potential to discover constructs laying within the text. In the case of this study, those constructs were more questions asked, discussion of causal relationships, and the success and failure influences.

Even though our hypotheses of confidence, competence, and effort were not detected, we did find increases of motivation, pronoun usage, 2nd person words, questions, positive emotion, causal words, and risk in Phase 1. This is valuable research because it suggests that in a problem-based learning environment with mentors more questions will be asked, more relationships will be discussed, learners will be willing to take more risks, and they will emotionally reap the rewards of getting things correct by taking those risks.

This study also resulted in a finding of our secondary hypothesis of an emotion change, but it occurred in the opposite direction than we were expecting. This result was attributed to the joy of success when solving problems, instead of the joy of working with a community partner. Although we did not see a linguistic increase in positivity, we did find that learners had an increased interest in social justice activities and were more motivated to complete the project. This means that despite the LIWC results, we can still say Phase 2 had an impact on learners.

The significance of this research lays in educational design such that project-based service-learning programs do increase motivation, and a problem-based environment induces the discussed constructs.

ACKNOWLEDGMENTS

This material is based upon work supported by the National Science Foundation under Grant No. 1918751. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation. Additionally, we would like to thank Dr. Andrew Tawfik and Linda Payne for helpful and engaging conversations.

REFERENCES

- Adaji, I. and Olakanmi, O. 2019. Evolution of Emotions and Sentiments in an Online Learning Community. International Workshop on Supporting Lifelong Learning. (2019).

- Barron, B., Schwartz, D., Vye, N., Moore, A., Petrosino, A., Zech, L. and Bransford, J. 2011. Doing with Understanding: Lessons From Research on Problem- and Project-Based Learning. The Journal of Learning Sciences. 7, 3–4 (2011), 271–311. DOI:https://doi.org/10.1080/10508406.1998.9672056.

- Baumer, B. 2014. A Data Science Course for Undergradu-ates: Thinking With Data. The American Statistician. 69, 4 (2014), 334–342. DOI:https://doi.org/10.48550/arXiv.1503.05570.

- Bielefeldt, A.R. and Swan, C.W. 2010. Measuring the Value Added from Service Learning in Project-Based Engineering Education. International Journal of Engineering Education. 26, 3 (2010), 535–546.

- Blumenfeld, P., Soloway, E., Marx, R.W., Krajcik, J.S., Guzdial, M. and Palincsar, A. 2011. Motivating Project-Based Learning: Sustaining the Doing, Supporting the Learning. Educational Psychologist. 26, 3–4 (2011), 369–398. DOI:https://doi.org/10.1080/00461520.1991.9653139.

- Cam, P. Dewey’s Continuing Relevance to Thinking in Education. Philosophical Reflections for Educators.

- Coker, J.S. and Porter, D.J. 2016. Student Motivations and Perception Across and Within Five Forms of Experiential Learning. The Journal of General Education. 65, 2 (2016), 138–156. DOI:https://doi.org/10.5325/jgeneeduc.65.2.0138.

- Cordova, D.I. and Lepper, M.R. 1996. Intrinsic motivation and the process of learning: Beneficial effects of contextu-alization, personalization, and choice. Journal of Educational Psychology. 88, 4 (1996), 715–730. DOI:https://doi.org/10.1037/0022-0663.88.4.715.

- Dua, D. and Graff, C. (2019). UCI Machine Learning Re-pository [http://archive.ics.uci.edu/ml]. Irvine, CA: University of California, School of Information and Com-puter Science.

- Erickson, T., Finzer, B., Reichsman, F. and Wilkerson, M. 2018. Data Moves: One Key To Data Science At The School Level. Proceedings of the International Conference on Teaching Statistics. 10, (2018), 6.

- Geng, S., Niu, B., Feng, Y. and Huang, M. 2020. Under-standing the focal points and sentiment of learners in MOOC reviews: A machine learning and SC-LIWC-based ap-proach. British Journal of Educational Technology. 5, 1 (2020), 1785–1803. DOI:https://doi.org/doi:10.1111/bjet.12999.

- Graesser, A.C., McNarma, D.S., Louwerse, M.M. and Zhi-qiang, C. 2004. Coh-Metric: Analysis of text on cohesion and language. Behavior Research Methods, Instruments & Computers, 36, 2 (2004), 193-202. DOI: https://doi.org/10.3758/BF03195564

- Grangeia, T. de A.G., Jorge, B. de, Franci, D., Santos, T.M., Setubal, M.S.V., Schweller, M. and Carvalho-Filho, M.A. de 2016. Cognitive Load and Self-Determination Theories Applied to E-Learning: Impact on Students’ Participation and Academic Performance. Plos One. (2016). DOI:https://doi.org/10.1371/journal.pone.0152462.

- Hartley, J., Pennebaker, J.W., Fox, C. 2003. Abstracts, in-troductions and discussions: How far do they differ in style? Scientometrics. 57, 3 (2003), 389-398. DOI: 10.1023/A:1025008802657

- Johari, A. and Bradshaw, A. 2008. Project-based learning in an internship program: A qualitative study of related roles and their motivational attributes. Educational Technology Research and Development. 56, 3 (2008), 329–359. DOI:https://doi.org/10.1007/s11423-006-9009-2.

- Land, S. and Hannafin, M. 1996. A conceptual framework for the development of theories-in-action with open learning environments. Educational Technology Research and De-velopment. 44, 3 (1996), 37–53. DOI:https://doi.org/10.1007/BF02300424.

- Lang, P. and Bradley, M. 2010. Emotion and the motiva-tional brain. Biological Psychology. 84, 3 (2010), 437–450. DOI:https://doi.org/10.1016/j.biopsycho.2009.10.007.

- Levesque-Bristol, C., Knapp, T.D. and Fisher, B.J. 2011. The Effectiveness of Service-Learning: It’s Not Always what you Think. The Journal of Experiential Learning. 33, 3 (2011), 208–224. DOI:https://doi.org/doi:10.1177/105382590113300302.

- Liu, Y., Xu, J., and Weitz, B.A. 2011. The Role of Emo-tional Expression and Mentoring in Internship Learning. Academy of Management Learning. 10, 1 (2011). DOI:https://doi.org/10.5465/amle.10.1.zqr94.

- Merri¨enbor, J.J.G. and Sweller, J. 2005. Cognitive Load Theory and Complex Learning: Recent Developments and Future Directions. Educational Psychology Review. 17, 2 (2005), 31. DOI:https://doi.org/10.1007/s10648-005-3951-0.

- Molock, S. and Parchem, B. 2021. The impact of COVID-19 on college students from communities of color. Journal of American College Health. (2021). DOI:https://doi.org/10.1080/07448481.2020.1865380.

- Moore, R.L., Yen, C.J., and Powers, F.E., 2020. Exploring the relationship between clout and cognitive processing in MOOC discussion forums. British Journal of Educational Technology. 52, 1 (2020), 482-497. DOI: https://doi.org/10.1111/bjet.13033

- Olney, A. M., & Fleming, S. D. (2021). JupyterLab Extensions for Blocks Programming, Self-Explanations, and HTML Injection. In T. W. Price & S. San Pedro, Joint Proceedings of the Workshops at the 14th International Conference on Educational Data Mining (Vol. 3051, pp. CSEDM–8). CEUR-WS.org.

- Payne, L., Tawfik, A. and Olney, A. 2021. Datawhys Phase 1: Problem Solving to Facilitate Data Science & STEM Learning Among Summer Interns. International Journal of Designs for Learning. 12, 3 (2021), 102–117. DOI:https://doi.org/10.14434/ijdl.v12i3.31555.

- Pennebaker, J.W., Boyd, R.L., Jordan, K. and Blackburn, K. The Development and Psychometric Properties of LIWC 2015. The University of Texas, Austin. 2015.

- Ruggiero, D. and Boehm, J. 2016. Design and Development of a Learning Design Virtual Internship. The International Review of Research in Open and Distributed Learning. 17, 4 (2016). DOI:https://doi.org/10.19173/irrodl.v17i4.2385.

- Ryan, R.M. and Deci, E. 2000. Self-Determination Theory and the Facilitation of Intrinsic Motivation, Social Develop-ment, and Well-being. American Psychologist. 55, 1 (2000), 68–78. DOI:https://doi.org/10.1037/0003-066X.55.1.68.

- Sexton, J.B. and Helmreich, R.L. 2000. Analyzing cockpit communications: The links between language, performance, error, and workload. Human Performance in Extreme Envi-ronments, 5, 1 (2000), 63-68.

- Sibthorp, J., Furman, N., Paisley, K., Gookin, J. and Schu-mann, S. 2011. Mechanisms of Learning Transfer in Adventure Education: Qualitative Results From the NOLS Survey. Journal of Experiential Education. 34, 2 (2011), 109–126. DOI:https://doi.org/10.5193/JEE34.2.109.

- Sigmon, R. 1979. Service-Learning: Three Principles. Syn-ergist. (1979).

- Simpson, A. and Maltese, A. 2017. “Failure is a major com-ponent of learning anything”: The role of failure in the development of STEM professionals. Journal of Science Education and Technology. 26, 2 (2017), 223–237. DOI:https://doi.org/10.1007/s10956-016-9674-9.

- Simmons, R. A., Chambless, D. L., Gordon, P.C. 2008. How do hostile and emotionally overinvolved relatives view rela-tionships? What relatives’ pronoun use tells us. Family Process. 47, 3 (2008), 405-419. DOI: https://doi.org/10.1111/j.1545-5300.2008.00261.x

- Stansbie, P. and Nash, R. 2013. Internship Design and Its Impact on Student Satisfaction and Intrinsic Motivation. Journal of Hospitality & Tourism Education. 25, 4 (2013), 157–168. DOI:https://doi.org/10.1080/10963758.2013.850293.

- Sweller, J. 1994. Cognitive Load Theory, Learning Difficulty, and Instructional Design. Learning and Instruc-tion. 4, (1994), 295–312. DOI:https://doi.org/0959-4752(94)00010-7.

- Tausczik, Y.R. and Pennebaker, J.W. 2009. The psycholog-ical meaning of words: LIWC and computerized text analysis methods. Journal of Language and Social Psychol-ogy. 29, (2009). DOI:https://doi.org/10.1177/0261927X09351676.

- The National Academics of Sciences, Engineering, and Medicine 2018. Data Science for Undergraduates: Oppor-tunities and Options. The National Academic Press.

- Tran, X., Williams, J., Mitre, B., Walker, V. and Carter, K. 2017. Learning Styles, Motivation, and Career Choice: In-sights for International Business Students From Linguistic Inquiry. Journal of Teaching International Business. 28, 3–4 (2017), 154–167. DOI:https://doi.org/10.1080/08975930.2017.1384949.

by Github user Tyrrrz (https://github.com/Tyrrrz/DiscordChatEx-porter). ↑

© 2022 Copyright is held by the author(s). This work is distributed under the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) license.