ABSTRACT

Online learning has been spreading with the increasing availability and diversity of digital resources. Understanding how students’ cognitive load and affect changes when using learning technologies will help us decipher the learning process and understand student needs. In this research, we focus on modeling learner’s cognitive load and affect using real-time physiological reactions. We explore what affect modeling contributes to the modeling of cognitive load, and how real-time cognitive load changes alongside learning activities. We want to further investigate if cognitive load modeling helps diagnose learner knowledge and facilitates improvement. We have designed two case studies: one where students are learning python with an e-learning system and another where they are practicing literacy skills with a web-based learning game. To collect learner data, we have implemented a sensing prototype consisting of an eye tracker and a wireless wristband.

Keywords

INTRODUCTION

The cognitive processes that underpin information processing and knowledge construction in learning have gained increasing attention from educational researchers. Prior studies have found cognitive load has a complex relationship with learning performance [22]. Models like cognitive load theory (CLT) [24, 33] have been used to explain the learner's cognitive process. How student cognitive load reacts when dealing with an abundance of information is a key component of learning performance. While previous research has shown the impact of cognitive load on learning outcomes, the exploration of its real-time changes when learners use online educational technologies has not yet been fully conducted. Moreover, the diversification of how learning materials are presented and changes in the corresponding learning methods suggest a need to explore their impact on learner cognitive load.

Although CLT effectively represents learner processing, some have argued that it fails to fully explain learning performance [21, 24]. Recent research has suggested people’s affective reactions might contribute to changes in their cognitive load during learning given the role that affective states seem to play in learning [10]. Neuroscience research has suggested that the brain mechanisms underlying affect and cognition are not fundamentally different [18]. The potential interactivity between affect and cognitive load [9, 11, 16] suggests that there may be value in jointly modelling these constructs.

Previous learner modeling studies using cognitive load mostly focused on supporting adaptation (e.g., feedback [6], problem selection [17]) to improve student performance. We argue that by including learner affect alongside their cognitive load, learner modeling has the potential to help improve learner’s experiences while supporting effective learning.

In this thesis project, we explore the potential of modeling students’ real-time cognitive load when using online educational tools to support adaptive learning experiences. We highlight a learner’s affective reactions and their contributions to cognitive load, as one of the areas that has not been sufficiently considered in previous student modeling studies. Based on recent research in cognitive processes in learning, we believe a learner’s cognitive load is affected by the difficulty of tasks and the sequence of learning units so it can change alongside the learning process.

To achieve our goal, user studies across different educational domains will be conducted. The online learning environments include one that supports learning to program in python and an edu-game for supporting literacy development. A sensing platform has been built to collect and synchronize different types of learner physiological data. Multimodal analysis will be conducted to detect learner cognitive load and affect. The modeling of cognitive load will be used for predicting the learner’s knowledge in each domain.

Related Work

Cognitive Load and Affect

CLT is widely used. Its application in educational design to enhance instruction has supported the learning of children, teens [35], and older adults [23, 38] in a wide variety of domains. CLT is grounded in an understanding of the human cognitive architecture that is supported by previous research on working memory models [2] and mental effort [31].

There are three types of cognitive load. Intrinsic load (IL) is the inherent difficulty of what needs to be learned and should be managed so it does not exceed the capacity of working memory. Extraneous load (EL) is unnecessary load that does not support learning [20, 34]. It is usually caused by specific learning activity or system designs. The third kind is germane load (GL) which refers to the mental resources devoted to acquiring and automating schemata.

CLT can help understand student’s learning patterns and provide guidance for adaptive learning design. However, we expect that it will not be enough to model the cognitive load of students since recent neuroscience research has shown the interconnectedness of affect and cognition despite earlier theories that posited the processes were separate [26]. Studies suggest that cognitive and affective behaviors have rich interactions and some argue that emotions arise from the same general cortical system that processes cognition [18]. These findings support the joint inclusion of affect and cognitive load within learner models.

While research on the connection of affect and learning has been performed for a long time [37], many studies have explored affect without considering its implication on cognitive load. For example, previous research on negative emotions argued students experiencing longer periods of boredom tended to have lower scores than those experiencing positive emotions [7]. However, the type of negative affect is important; students might have better learning performance when given erroneous examples even though those examples produced confusion and frustration [5].

While some argue the interrelatedness of affect and cognition, there is no consensus on how to incorporate affect into cognitive load modeling. Some argue affect could increase extraneous cognitive load because emotion regulation may add non-task-related processing that consumes extra cognitive resources [12]. Others have suggested that affect could be considered beneficial as it can foster motivation so that learners invest more cognitive effort [14]. Even negative emotions could motivate learners to turn to learning for shifting their attention away from their negative emotional states [4]. In this thesis project, we would like to recognize a student’s affective reactions in the context of computer-supported online learning and seek to investigate how affect contributes to learner cognitive load in these settings.

Measurement of Cognitive Load & Affect

To achieve our goal, appropriate data collection and modeling approaches must be selected. Commonly used methods include subjective rating scales as well as task- and performance-based methods. According to Paas and van Merriënboer’s work, cognitive load can be assessed using aspects of mental load, mental effort, and performance [25]. Subjective rating scales have been widely used and are grounded in a belief that people can reflect on their cognitive processes and report the amount of mental effort expended. The NASA task load index (NASA-TLX) is one instrument that attempts to capture this type of information [13]. However, it might not be able to measure unconscious and automatic processes. Task-based and performance-based methods have also been commonly used to measure cognitive load by measuring reaction time or accuracy on a secondary task. Although monitoring such tasks itself requires few cognitive resources, their use may interfere with the primary task when a reaction is necessary [8].

As the nature of questionnaires and performance evaluation implies, the above two methods rely on data collection after an experiment, which fails to support continuous monitoring. Brain-activity-based methods such as electroencephalography (EEG) have been used for identifying changes in cognitive load [1]. This measurement approach requires wearing complex instruments that are obtrusive to users. More recently, with advances in sensing technologies, physiological-data-based methods have presented the potential to address this issue. Eye-tracking technology is one of these sensors that can now be worn like a pair of glasses. This sensor provides information about pupillary response, which is considered a reliable source that enables the investigation of cognitive processes. One project found that pupil diameter changes indicated visual presentations that induced lower cognitive load in a math-education context [15], More generally, increased cognitive load has been associated with increases in pupil diameter due to decreasing parasympathetic activity in the peripheral nervous system [3].

In addition to estimating cognitive load through dynamic pupillary information, research has shown that user’s gaze trajectory data can be reliable for quantitatively measuring reading behavior. For example, eye-gaze data has been used to infer user cognitive style in reading activities [28] and the impact of distractions on surgeons’ intraoperative performance [32]. Such visual information is the key to investigating learner attention patterns and strategic processes.

In the context of affect measurement, three groups of tools are commonly used: psychological (i.e., self-reported), physiological, and behavioral [39]. While psychological methods often depend on respondents’ ability to consciously process their affective responses, physiological methods allow researchers to capture non-conscious aspects. Physiological arousal data, such as Electrodermal activity (EDA) and skin temperature, are considered to provide robust signals for measuring affect [29].

In this thesis project, we incorporate questionnaires and physiological tracking including eye-tracking data, cardiovascular responses, and EDA data for cognitive load and affect measurement.

RESEARCH QUESTION & APPROACH

Considering the research problem mentioned above, the overall goal of this thesis is to model students’ cognitive load and affect across instructional domains. To address this goal, case studies will be conducted to collect learner data, including their performance and reactions to learning activities. Models will then be developed using the collected data. Across this work we will answer the following questions.

Q1 What does affect modeling contribute to the modeling of cognitive load in online learning settings?

Q2 How does learner affect and cognitive load change alongside their learning when interacting with educational systems?

Q3 Can affect and cognitive load help in identifying a learner's knowledge state and trajectories?

To answer these questions, we have designed and will conduct lab-based case studies. Two case studies will be performed with different educational technologies: one is used to teach undergraduate students how to program and the other helps students improve their reading comprehension.

What does affect modeling contribute to the modeling of cognitive load in online learning settings?

We would like to investigate the added benefit, if any, of jointly modeling affect and cognitive load so we can determine what affect contributes to learner cognitive load modeling.

Considering the dynamic, sequential nature of cognitive load, we will use hidden Markov models (HMM) to model learner’s cognitive load. An HMM approach demands that the system has observable evidence that suggests the value of a hidden state. We will use the physiological reactions as observable signals to estimate the hidden cognitive load state.

We will compare the performance of estimating intrinsic and extraneous cognitive load using physiological features, i.e., pupil dilation (PD) and heart rate against the performance of estimating these loads when also including EDA data. Participant responses to affect and cognitive load scales will be used as a reference.

For the pupil data, we will use changes in PD instead of its absolute value as a feature to eliminate the influence of individual differences on model training. In a preprocessing step, we will calculate PD values as proportion change, by dividing pupil size by the grand mean PD size during the baseline period averaged across all attempts.

How does learner affect and cognitive load change alongside their learning when interacting with educational systems?

We will investigate the dynamics of cognitive load and affect across learning activities with a focus on what contributes to changes in cognitive load and affect. We are especially interested in understanding how the learning activity sequence and user interface design contribute to these latent states.

We would like to compare the continuous reactions of cognitive load and affect with information from learner behaviors and performance with educational technologies. Learner's interaction and learning performance information (i.e., score, task completion) will be extracted from the learning systems. Learner behavior data will come from two sources: the learning system’s logs (i.e., keyboard input, buttons clicked, questions answered) and eye tracking (i.e., gaze point and trajectory).

We will investigate what kinds of information contributes to increasing cognitive load or changes in affect by comparing the eye information actions obtained by students at critical moments (the moment when cognitive load or affect change).

Ideally, we will compare the cognitive load and affect levels of students who had similar achievements. This analysis will be done across their learning session(s) to explore whether there is a specific pattern among them.

Can affect and cognitive load help in identifying a learner's knowledge state and trajectories?

We will use the models developed under section 3.1 and 3.2 to help predict a learner’s knowledge and how it changes during learning. To answer this question, initial experiments will be conducted to predict a learner’s knowledge level. The prediction of this information can be important for providing suitable learning materials and learning sequences. As an initial step, we will evaluate two approaches: one heuristic with collaborative filtering (CF) and the other machine learning (ML) using engineered features. Based on the initial results, further experiments will be performed to identify changes in learner knowledge or mastery.

PROGRESS TO DATE & PLAN

So far, we have implemented our sensing platform and designed two case studies. The proposed case studies have been approved by our institutional Research Ethics Board (REB), and we have begun piloting the first.

Sensing System

In our literature search, we noticed there is no integrated sensing system to allow us to easily collect multiple types of learner physiological data. In this project, we choose multimodal analysis to support the recognition of cognitive load and affect. Thus, we need a sensing platform that enables us to collect and synchronize a learner’s physiological features automatically. This generates databases of learners’ physiological reactions that can be used to extract their cognitive load and affect characteristics. Our approach focuses on modeling with two dimensions of real-time physiological information: pupil dynamics collected by an eye tracker and cardiovascular and EDA data from non-invasive wearable sensors. As mentioned in Section 2, previous studies have shown the possibility of using pupil dynamics and electrodermal activity to investigate the continuous monitoring of cognitive load. These types of data have also been used to recognize state-based affect.

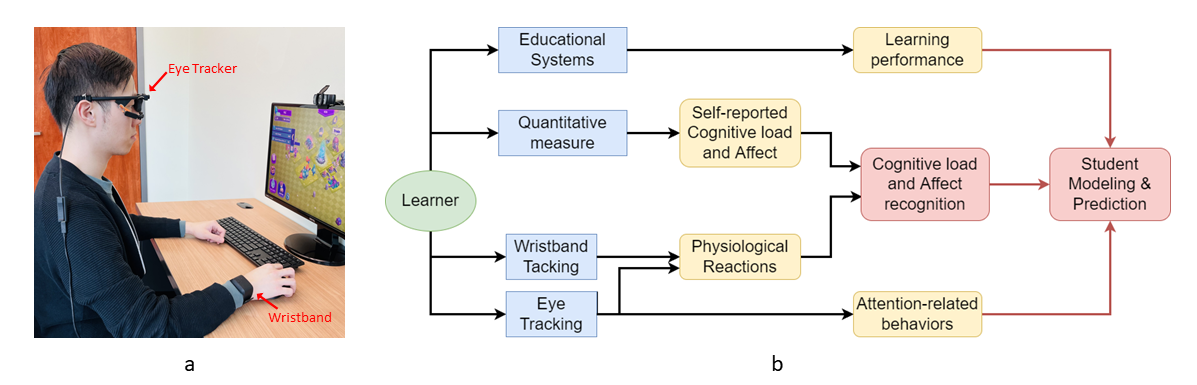

We developed our sensing platform that allows subjects to remain relatively comfortable (Figure 1-a). The platform consists of two non-invasive sensors and a software system that facilitates the synchronization of data from different channels. The first sensor is an open-source eye tracker (Pupil Core, Pupil Labs), which records dual eye movements at 200 Hz and includes gaze tracking and pupillometry. It is worn like normal glasses and consists of a scene camera that records what the user sees. The second sensor is a wireless wristband (E4, Empatica Inc.), which is worn as a wristwatch and connected via Bluetooth. The wristband includes photoplethysmography (PPG), electrodermal activity (EDA), skin temperature, and accelerometer sensors. The PPG sensor is used to measure blood volume pulse (BVP) which can then be used to calculate heart rate.

As these time series data come from different sources, they need to be synchronized to enable analysis. For this purpose, we have developed a system based on an open multi-model recording framework – Lab Streaming Layer (LSL). This system will be used to support real-time data streaming, synchronization, and recording with a laptop.

Case Studies

According to what we discussed in Section 3, our case studies will collect three types of learner data (Figure 1-b): (a) physiological data from the sensing system, (b) self-report measures, and demographic information from questionnaires, and (c) learner interaction and performance from the learning system.

Experiment procedure

The experiment will take approximately 90 minutes. Sensor calibration will follow consent. Participants will interact with a specific e-learning system for approximately 60 minutes. Sensors will be used to collect learner data as they perform learning tasks in the system. Self-report instruments for measuring affect and cognitive load will be administered every 5-10 minutes. Demographics will be collected at the end.

Learning environments (LE)

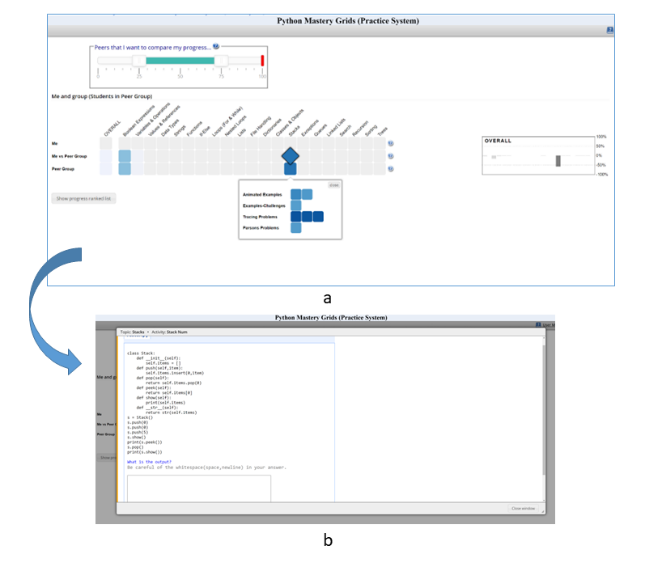

The first study (S1) will be conducted when students are learning programming using the Mastery Grids e-learning system within our lab (Figure 2). This system visualizes learner progress and provides multiple types of interactive learning activities. An explicit, visually rich representation with social comparison helps students track their activities alongside those of their peers. Interactive learning content with feedback helps students practice and makes them aware of the knowledge they are expected to learn.

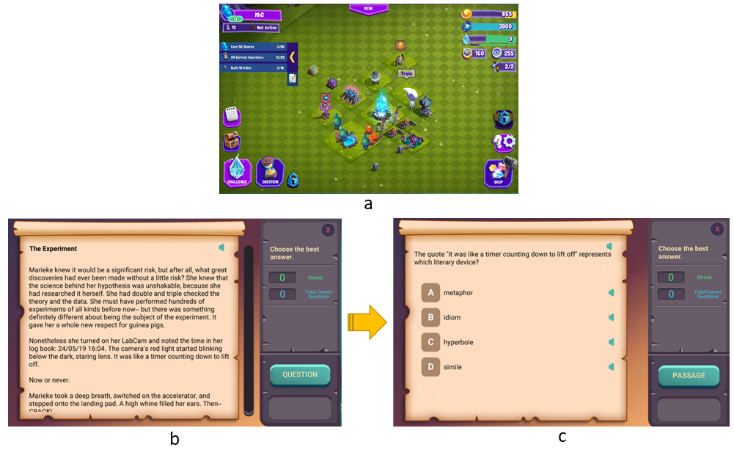

The second study (S2) will be conducted when learners are using a web-based online learning game (Figure 3). It engages players to enhance literacy skills for English learning using a popular base-building game design. Players pretend to be in the realm of dreams and are tasked with defending their virtual home from invading “reveries” (in-game creatures). The game combines the strategy and engagement of the game factors with passage reading and comprehension tasks to create an interactive learning experience.

Participants

Different people will be recruited for each case study. The target participants in S1 will be 35 undergraduate students registered in an introductory computer science course that teaches how to program in python. The target participants in S2 (n = 35) will be students registered in an English as an additional language course.

Self-reported measures

In this project, self-report information will be used as verification of our sensor-based measurement. We follow the definition of CLT [33], dividing cognitive load into three types: intrinsic, extraneous, and germane. Based on prior work [19], we developed Likert-scale items to measure all three types of cognitive load.

Affect has been defined in many different ways, and no generally agreed upon definition has emerged [26, 27]. We follow Russell’s model [30] that describes affect in two dimensions – arousal and valence. We adopt an established scale – the international positive and negative affect schedule short-form (I-PANAS-SF) [36] to measure both the positive and negative affect of learners.

We will collect participants’ demographic information using a questionnaire at the end of the study session. In addition to basic information (e.g., age, gender) we will collect information about participants’ programming background for S1 and language-learning background (e.g., mother tongue, IELTS/TOEFL scores) for S2.

Future Work

In conclusion, we have implemented the sensing prototype and designed the case studies that will collect learner data for student modeling. We will conduct the case studies and perform the modeling work.

If modelling cognitive load and affect better explains student learning trajectories, we will incorporate these models so that they inform system adaptation, thus optimizing student learning experience and performance.

ACKNOWLEDGEMENTS

This work is supported in part by funding from the Social Sciences and Humanities Research Council of Canada and the Natural Sciences and Engineering Research Council of Canada (NSERC), [RGPIN-2018-03834].

References

- Antonenko, P., Paas, F., Grabner, R. and van Gog, T. 2010. Using Electroencephalography to Measure Cognitive Load. Educational Psychology Review. 22, 4 (Dec. 2010), 425–438. DOI:https://doi.org/10.1007/s10648-010-9130-y.

- Baddeley, A., Logie, R., Bressi, S., Sala, S.D. and Spinnler, H. 1986. Dementia and Working Memory. The Quarterly Journal of Experimental Psychology Section A. 38, 4 (Nov. 1986), 603–618. DOI:https://doi.org/10.1080/14640748608401616.

- Beatty, J. 1982. Task-evoked pupillary responses, processing load, and the structure of processing resources. Psychological Bulletin. 91, 2 (1982), 276–292. DOI:https://doi.org/10.1037/0033-2909.91.2.276.

- Bless, H. and Fiedler, K. 2006. Mood and the regulation of information processing and behavior. Affect in social thinking and behavior. Psychology Press. 65–84.

- Booth, J.L., Lange, K.E., Koedinger, K.R. and Newton, K.J. 2013. Using example problems to improve student learning in algebra: Differentiating between correct and incorrect examples. Learning and Instruction. 25, (Jun. 2013), 24–34. DOI:https://doi.org/10.1016/j.learninstruc.2012.11.002.

- Bounajim, D., Rachmatullah, A., Hinckle, M., Mott, B., Lester, J., Smith, A., Emerson, A., Morshed Fahid, F., Tian, X., Wiggins, J.B., Elizabeth Boyer, K. and Wiebe, E. 2021. Applying Cognitive Load Theory to Examine STEM Undergraduate Students’ Experiences in An Adaptive Learning Environment: A Mixed-Methods Study. Proceedings of the Human Factors and Ergonomics Society Annual Meeting. 65, 1 (Sep. 2021), 556–560. DOI:https://doi.org/10.1177/1071181321651249.

- Brand, S., Reimer, T. and Opwis, K. 2007. How do we learn in a negative mood? Effects of a negative mood on transfer and learning. Learning and Instruction. 17, 1 (Feb. 2007), 1–16. DOI:https://doi.org/10.1016/j.learninstruc.2006.11.002.

- Brunken, R., Plass, J.L. and Leutner, D. 2003. Direct Measurement of Cognitive Load in Multimedia Learning. Educational Psychologist. 38, 1 (Mar. 2003), 53–61. DOI:https://doi.org/10.1207/S15326985EP3801_7.

- Brünken, R., Plass, J.L. and Moreno, R. eds. 2010. Current issues and open questions in cognitive load research. Cognitive load theory. Cambridge University Press. 253–272.

- Craig, S., Graesser, A., Sullins, J. and Gholson, B. 2004. Affect and learning: An exploratory look into the role of affect in learning with AutoTutor. Journal of Educational Media. 29, 3 (Oct. 2004), 241–250. DOI:https://doi.org/10.1080/1358165042000283101.

- Fraser, K., Huffman, J., Ma, I., Sobczak, M., McIlwrick, J., Wright, B. and McLaughlin, K. 2014. The emotional and cognitive impact of unexpected simulated patient death: a randomized controlled trial. Chest. 145, 5 (May 2014), 958–963. DOI:https://doi.org/10.1378/chest.13-0987.

- Fraser, K.L., Ayres, P. and Sweller, J. 2015. Cognitive Load Theory for the Design of Medical Simulations. Simulation in Healthcare. 10, 5 (Oct. 2015), 295–307. DOI:https://doi.org/10.1097/SIH.0000000000000097.

- Hart, S.G. and Staveland, L.E. 1988. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. Advances in Psychology. P.A. Hancock and N. Meshkati, eds. North-Holland. 139–183.

- Kalyuga, S. and Singh, A.-M. 2016. Rethinking the Boundaries of Cognitive Load Theory in Complex Learning. Educational Psychology Review. 28, 4 (Dec. 2016), 831–852. DOI:https://doi.org/10.1007/s10648-015-9352-0.

- Klingner, J., Tversky, B. and Hanrahan, P. 2011. Effects of visual and verbal presentation on cognitive load in vigilance, memory, and arithmetic tasks. Psychophysiology. 48, 3 (2011), 323–332. DOI:https://doi.org/10.1111/j.1469-8986.2010.01069.x.

- Knörzer, L., Brünken, R. and Park, B. 2016. Facilitators or suppressors: Effects of experimentally induced emotions on multimedia learning. Learning and Instruction. 44, (Aug. 2016), 97–107. DOI:https://doi.org/10.1016/j.learninstruc.2016.04.002.

- Koedinger, K.R. and Aleven, V. 2007. Exploring the Assistance Dilemma in Experiments with Cognitive Tutors. Educational Psychology Review. 19, 3 (Sep. 2007), 239–264. DOI:https://doi.org/10.1007/s10648-007-9049-0.

- LeDoux, J.E. and Brown, R. 2017. A higher-order theory of emotional consciousness. Proceedings of the National Academy of Sciences. 114, 10 (Mar. 2017), E2016–E2025. DOI:https://doi.org/10.1073/pnas.1619316114.

- Leppink, J., Paas, F., van Gog, T., van der Vleuten, C.P.M. and van Merriënboer, J.J.G. 2014. Effects of pairs of problems and examples on task performance and different types of cognitive load. Learning and Instruction. 30, (Apr. 2014), 32–42. DOI:https://doi.org/10.1016/j.learninstruc.2013.12.001.

- Mayer, R.E. and Moreno, R. 2003. Nine Ways to Reduce Cognitive Load in Multimedia Learning. Educational Psychologist. 38, 1 (Mar. 2003), 43–52. DOI:https://doi.org/10.1207/S15326985EP3801_6.

- Moreno, R. 2010. Cognitive load theory: more food for thought. Instructional Science. 38, 2 (Mar. 2010), 135–141. DOI:https://doi.org/10.1007/s11251-009-9122-9.

- Paas, F. and Ayres, P. 2014. Cognitive Load Theory: A Broader View on the Role of Memory in Learning and Education. Educational Psychology Review. 26, 2 (Jun. 2014), 191–195. DOI:https://doi.org/10.1007/s10648-014-9263-5.

- Paas, F., Camp, G. and Rikers, R. 2001. Instructional compensation for age-related cognitive declines: Effects of goal specificity in maze learning. Journal of Educational Psychology. 93, 1 (2001), 181–186. DOI:https://doi.org/10.1037/0022-0663.93.1.181.

- Paas, F., Tuovinen, J.E., Tabbers, H. and Van Gerven, P.W.M. 2003. Cognitive Load Measurement as a Means to Advance Cognitive Load Theory. Educational Psychologist. 38, 1 (Mar. 2003), 63–71. DOI:https://doi.org/10.1207/S15326985EP3801_8.

- Paas, F.G.W.C. and Van Merriënboer, J.J.G. 1994. Instructional control of cognitive load in the training of complex cognitive tasks. Educational Psychology Review. 6, 4 (Dec. 1994), 351–371. DOI:https://doi.org/10.1007/BF02213420.

- Pessoa, L. 2008. On the relationship between emotion and cognition. Nature Reviews Neuroscience. 9, 2 (Feb. 2008), 148–158. DOI:https://doi.org/10.1038/nrn2317.

- Plass, J.L. and Kaplan, U. 2016. Emotional Design in Digital Media for Learning. Emotions, Technology, Design, and Learning. S.Y. Tettegah and M. Gartmeier, eds. Academic Press. 131–161.

- Raptis, G.E., Katsini, C., Belk, M., Fidas, C., Samaras, G. and Avouris, N. 2017. Using Eye Gaze Data and Visual Activities to Infer Human Cognitive Styles: Method and Feasibility Studies. Proceedings of the 25th Conference on User Modeling, Adaptation and Personalization (New York, NY, USA, Jul. 2017), 164–173.

- Rogers, K.B. and Robinson, D.T. 2014. Measuring Affect and Emotions. Handbook of the Sociology of Emotions: Volume II. J.E. Stets and J.H. Turner, eds. Springer Netherlands. 283–303.

- Russell, J.A. 2003. Core affect and the psychological construction of emotion. Psychological Review. 110, 1 (2003), 145–172. DOI:https://doi.org/10.1037/0033-295X.110.1.145.

- Salomon, G. 1984. Television is “easy” and print is “tough”: The differential investment of mental effort in learning as a function of perceptions and attributions. Journal of Educational Psychology. 76, 4 (1984), 647–658. DOI:https://doi.org/10.1037/0022-0663.76.4.647.

- Sutton, E., Youssef, Y., Meenaghan, N., Godinez, C., Xiao, Y., Lee, T., Dexter, D. and Park, A. 2010. Gaze disruptions experienced by the laparoscopic operating surgeon. Surgical Endoscopy. 24, 6 (Jun. 2010), 1240–1244. DOI:https://doi.org/10.1007/s00464-009-0753-3.

- Sweller, J. 1988. Cognitive load during problem solving: Effects on learning. Cognitive Science. 12, 2 (Apr. 1988), 257–285. DOI:https://doi.org/10.1016/0364-0213(88)90023-7.

- Sweller, J. 2010. Element Interactivity and Intrinsic, Extraneous, and Germane Cognitive Load. Educational Psychology Review. 22, 2 (Jun. 2010), 123–138. DOI:https://doi.org/10.1007/s10648-010-9128-5.

- Sweller, J., van Merrienboer, J.J.G. and Paas, F.G.W.C. 1998. Cognitive Architecture and Instructional Design. Educational Psychology Review. 10, 3 (Sep. 1998), 251–296. DOI:https://doi.org/10.1023/A:1022193728205.

- Thompson, E.R. 2007. Development and Validation of an Internationally Reliable Short-Form of the Positive and Negative Affect Schedule (PANAS). Journal of Cross-Cultural Psychology. 38, 2 (Mar. 2007), 227–242. DOI:https://doi.org/10.1177/0022022106297301.

- Tyng, C.M., Amin, H.U., Saad, M.N.M. and Malik, A.S. 2017. The Influences of Emotion on Learning and Memory. Frontiers in Psychology. 8, (2017), 1454.

- Van Gerven, P.W.M., Paas, F.G.W.C., Van Merriënboer, J.J.G. and Schmidt, H.G. 2002. Cognitive load theory and aging: effects of worked examples on training efficiency. Learning and Instruction. 12, 1 (Feb. 2002), 87–105. DOI:https://doi.org/10.1016/S0959-4752(01)00017-2.

- Zimmermann, P. 2008. Beyond usability: measuring aspects of user experience. (2008).

© 2022 Copyright is held by the author(s). This work is distributed under the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) license.