ABSTRACT

This study investigates the heterogeneity in the effects of a Learning Analytics Dashboard (LAD) intervention, which provides personalized feedback messages, across a diverse population of learners. Specifically, it evaluates the impact of the LAD on learners’ total material usage and final grades, considering variables such as age, sex, prior material access level, prior academic performance, and first-year status. The intervention was implemented through a controlled experimental design with 26,753 learners over 17 weeks in a fully online course. Participants were randomly assigned to either the experimental (n = 13,377) or control (n = 13,376) groups. Results indicate significant heterogeneity in the effects of the LAD intervention within the online learning environment. The LAD improved learning material usage and academic performance, with factors such as sex, age, prior course material access, and prior academic performance moderating these effects. Female learners, older learners, and those with low prior academic performance benefited more from the intervention. This suggests that personalized learning analytics tools can enhance engagement and outcomes for specific learner subgroups, reinforcing the need for targeted interventions in online education.

Keywords

1. INTRODUCTION

Educational institutions are increasingly adopting virtual learning environments to enhance students’ learning experiences. With the rise of online learning platforms, the volume of learner activity data has expanded significantly. Learning analytics enables a deeper understanding of these learning processes and facilitates targeted interventions. Learning Analytics Dashboards (LADs) serve as a crucial tool in this context, offering learners real-time feedback and insights into their educational progress [21, 28]. Schwendimann et al. [21] define a learning analytics dashboard (LAD) as "a single display that aggregates different indicators about learner(s), learning process(es) and/or learning context(s) into one or multiple visualizations" (p.37). LADs not only provide graphical representations of data but also facilitate actionable insights through personalized, real-time feedback, empowering learners to make data-driven decisions about their educational journey [9, 7, 22]. While LADs can be adapted to meet the needs of a variety of stakeholders within educational settings, this study focuses on learner-facing LADs, which are designed to engage learners directly and provide them with insights into their own learning processes.

Despite the growing use of LADs, their impact on learners remains underexplored, particularly concerning heterogeneous treatment effects (HTE) [27, 10, 23, 8, 21]. Most studies focus on average treatment effects (ATE), which may obscure important differences among diverse learner groups. Understanding how learners with different characteristics respond to LAD interventions is essential for designing equitable and effective educational tools. In particular, large-scale and empirical research is needed to determine the extent to which these tools provide benefits to different subgroups of learners [4, 10, 21]. This study addresses this gap by investigating whether LAD effects vary significantly across different learner profiles in a large-scale online learning environment. Using HTE analysis, we explore which learner characteristics are most strongly associated with treatment effect variations. By identifying subgroups that benefit most from LADs, this research contributes to the development of personalized learning analytics strategies.

This study aims to investigate the effect heterogeneity of a LAD intervention within an online learning environment. The LAD developed in this study consists of several components, including learning behaviors, performance indicators, exam-related metrics, and feedback messages. We employed a controlled experimental design with 26,753 participants. The intervention was implemented over 17 weeks in a fully online course. This study is significant for examining the effects of LADs in a large-scale learning environment and their impact on different learner groups. In addition, the results are expected to contribute to the more personalized and effective use of learning analytics tools, while also informing strategies to improve learning outcomes in online environments.

Research Question This study aims to investigate whether the effects of an LAD, which provides personalized feedback messages, varies significantly across different learner profiles. Additionally, the research seeks to understand the differential effects of LAD interventions and contribute to the development of more personalized and effective learning analytics tools. To achieve this objective, the study addresses the following research questions:

- RQ1: Do the effects of learning analytics dashboard (LAD) interventions vary substantially across different groups of online learners (e.g., low-/high performers)?

- RQ2: Which learner characteristics are most strongly associated with such treatment effect variations?

2. RELATED WORK

2.1 Learner-Facing Analytics Dashboards

Learner-facing Learning Analytics Dashboards (LADs) are capable of visualizing the various components of learning processes, monitoring temporal changes, and presenting data related to learning behaviors in online learning environments in a holistic manner [19]. These dashboards support learners’ self-regulated learning, self-reflection, and self-awareness skills through the information they provide about online learning experiences, contributing to enhanced learning outcomes, academic performance, engagement, and motivation [16, 29, 23]. However, the existing literature indicates a limited number of studies examining the influence of LADs on learning outcomes [10]. Furthermore, the results of these studies are not sufficiently generalizable to be applicable more widely [10, 21].

Despite these limitations, several studies have highlighted associations between learners’ academic achievement levels, prior knowledge, motivation, self-regulation skills, and demographic variables with their responses to and usage patterns of LADs [20, 7]. Alam et al. found that learners with access to the LAD performed better than those without, with the greatest benefits observed among frequent LAD users [1]. Similarly, Chen et al. reported that high-performers used LADs more frequently during the preview and review phases and employed more monitoring and reflection strategies compared to low-performers [5]. In another study, Wang et al. reveal that the extent and impact of dashboards on learning outcomes are determined by learners’ prior knowledge and learning strategies [31]. Interestingly, Kim et al. reported that high-achieving learners who frequently used the LAD expressed lower levels of satisfaction with the dashboard [12]. In contrast, Kia et al. observed that low-achieving learners tended to use the LAD more frequently and monitored their status within the class more often [11].

While the empirical validation of LADs’ effects on academic achievement is a topic of ongoing research, the literature indicates that LADs primarily influence learners’ learning behaviors, engagement, and motivation levels, potentially enhancing interaction in online learning environments, rather than directly impacting academic achievement [19, 10]. Future research can contribute to the development of personalized and adaptive learning tools by examining the effectiveness of LADs in meeting the heterogeneous needs of diverse learner profiles.

2.2 Effect Heterogeneity in Online Learning

Heterogeneous treatment effects (HTEs) are defined as variations in causal effects of an intervention (treatment) across individuals or subgroups within a population [6, 3]. This reflects the phenomenon whereby the same intervention produces different outcomes depending on individual characteristics or contextual factors [32]. There is growing interest in predicting and analyzing HTEs in experimental and observational studies [13, 25, 32]. However, the application of this approach within educational contexts is still limited [14]. Understanding how a particular educational intervention affects different groups of learners is important for personalizing and targeting educational strategies. Such analyses can help develop customized learning tools to meet diverse needs. HTE analysis has the potential to enhance learning outcomes within diverse learner populations, facilitating data-driven decision-making and policy implementation in educational institutions.

Studies examining the effects of educational interventions on different learner groups focus on explaining how various learner groups experience these interventions and to what extent they benefit from them. Leite et al. [14] investigated the impact of a video recommendation system on diverse learner populations. Their results revealed that specific groups derived more benefit from the intervention, including those with advanced prior algebraic knowledge, who demonstrated a higher rate of engagement with the recommendations, distance learners, Hispanic learners, and those who received free or reduced-price lunch [14]. In their experimental study investigating the effects of LA-based feedback, Lim et al. [15] found that learners in the experimental group exhibited significantly different learning patterns and achieved higher final grades, while the impact of feedback on final grades showed no difference among learners with varying levels of prior academic achievement.

A review of the existing literature reveals a significant need for more experimental investigations of how different subgroups experience and benefit from educational technologies [14, 4, 21]. This study addresses this gap through an examination of the effects of a LAD on a diverse population of learners in a large-scale online learning environment.

3. METHODOLOGY

3.1 Background on LAD Intervention

This study was conducted within an online undergraduate course (BIT 102) at a large online university in Türkiye. The University provides fully online degree-seeking programs to approximately one million learners across Türkiye [26]. The LMS offers a wide range of learning resources, including textual, visual, auditory, and video content, as well as live lecture sessions [2].

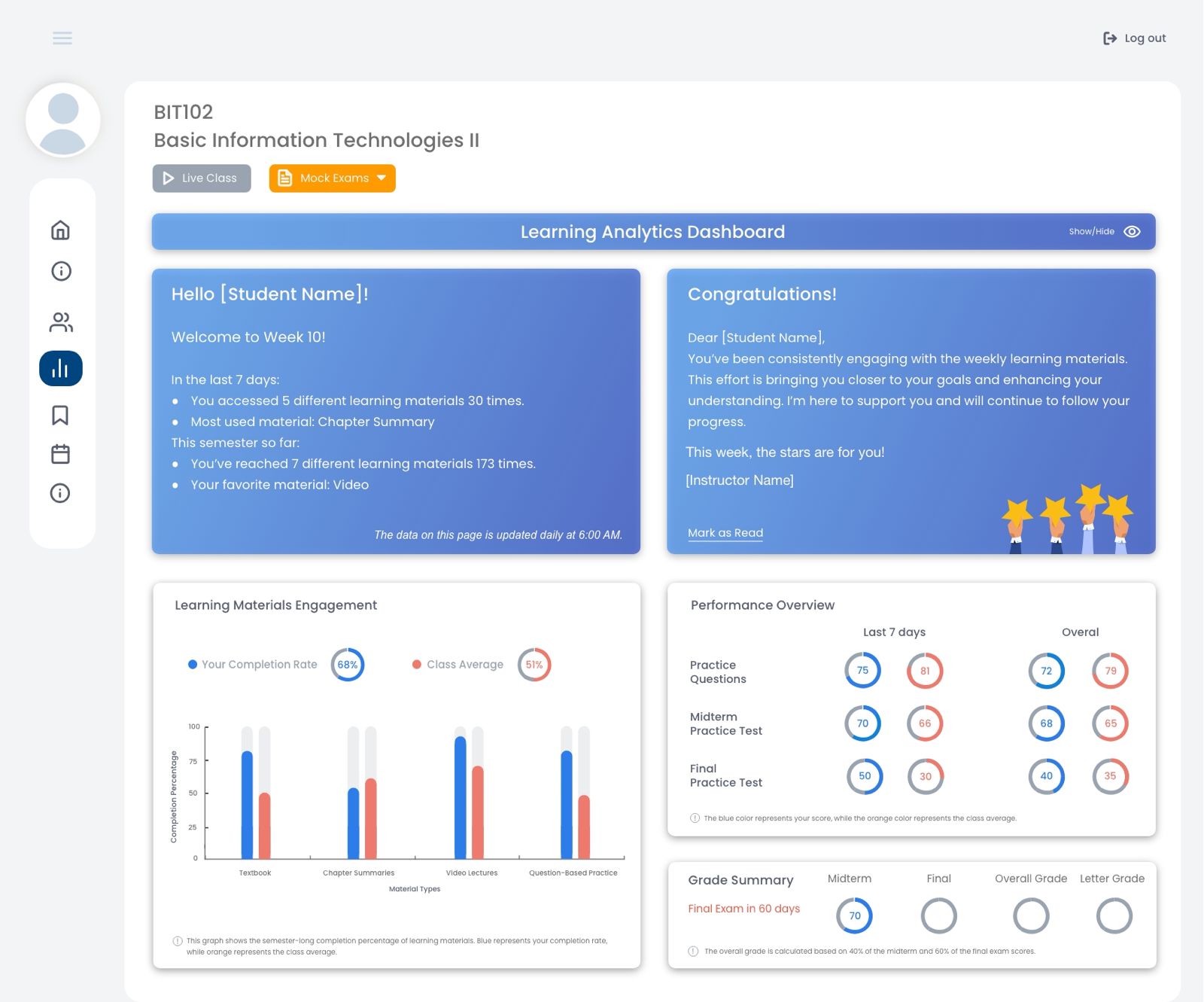

The LAD was integrated into the learning management system (LMS) and included five key components: (1) Welcome Greeting and Activity Synopsis, (2) Learning Materials Engagement, (3) Feedback Messages, (4) Performance Overview, and (5) Grade Summary. The LAD was prominently displayed at the top of the course interface to encourage interaction [17].

Using a quantitative, control-group experimental design, the LAD application was implemented over one academic semester [18]. Participants (N = 26,753) were randomly assigned to either the experimental group (n = 13,377), which received the LAD intervention, or the control group (n = 13,376), which had no access [18]. To ensure group equivalence, the assignment was stratified based on learners’ previous semester performance in the Basic Information Technologies I (BIT101) course, considering learning material usage and final scores. This stratification aimed to balance material usage and academic performance across both groups. An independent samples t-test assessed the difference in learning material usage and final scores between the control and experimental groups. The results indicated no statistically significant difference between the two groups (p > .05) regarding learning material usage and final scores [17].

| Age | Sex

| |||||

|---|---|---|---|---|---|---|

| 25 and under | 26-33 | 34-41 | 42 and above | Female | Male | |

| Experimental Group (n = 13,377) | 6,548 | 3,808 | 1,914 | 1,106 | 7,241 | 6,135 |

| (48.95%) | (28.47%) | (14.31%) | (8.27%) | (54.13%) | (45.86%) | |

| Control Group (n = 13,376) | 6,441 | 3,868 | 1,875 | 1,189 | 7,222 | 6,151 |

| (48.15%) | (28.92%) | (14.02%) | (8.89%) | (53.99%) | (45.99%) | |

| Covariate/Outcome | Type | Description |

|---|---|---|

| Covariates | ||

| High Grade | Binary | Indicates whether the learner’s grade was above the mean in the previous term’s BIT101 course (1 = High-grade, 0 = Low-grade) |

| High Access | Binary | Indicates whether the learner’s access to learning materials was above average in the previous term’s BIT101 course (1 = High-access, 0 = Low-access) |

| Sex | Binary | Reported sex of the learner (1 = Female, 0 = Male) |

| Age | Continuous | Age of the learner in completed years at the start of the semester |

| First-Year | Binary | Indicates the student’s academic year status (1 = First-year student, 0 = Upper-year student) |

| Outcomes | ||

| Final Grade | Continuous | Final academic grade of the learner in the BIT102 course on a 0-100 scale |

| Materials Usage | Continuous | Number of learning materials completed by the learner in the BIT102 course |

Following the LAD intervention in the BIT102 course over one semester, an independent samples t-test was conducted to evaluate statistically significant differences between the experimental and control groups in terms of login frequency, number of days logged in, learning material usage, and final scores [17]. The t-test results revealed statistically significant differences in all variables (p < .001), with higher values consistently observed in the experimental group. However, despite these significant differences, the effect sizes for each variable indicated minimal practical significance [17]. The ATE analysis conducted in this research revealed positive effects of the intervention on learning outcomes. These results motivated this follow-up analysis aimed at investigating heterogeneous treatment effects.

3.2 Data and Measures

This study was conducted for one semester in an online undergraduate course. A control-group experimental design was employed. Participants (N = 26,753) were randomly assigned to either the experimental group (n = 13,377), which received the LAD intervention, or the control group (n = 13,376). Table 1 shows participant age and sex distributions.

In the present study, data from learners with invalid exam scores were excluded from the analysis. The final dataset comprised 26,686 learners. Table 2 summarizes the covariates and outcomes utilized in the statistical analyses. In addition to the covariates presented in Table 2, we examined the effects of departmental affiliation and occupational groups. However, no significant heterogeneity was detected across these categories.

3.3 Heterogeneous Treatment Effect Analyses

We employed HTE analysis to examine how the LAD intervention affected different student subgroups [6, 3]. Unlike methods that assume uniform average treatment effects, HTE analysis captures differential impacts based on learner characteristics, such as prior academic performance or engagement levels. This approach enabled us to identify which subgroups benefited most from the intervention, informing targeted designs for learning analytics tools. In this section, we outline the algorithms and statistical techniques employed, including regression-based interaction term modeling, robust statistical corrections, and exploratory steps toward implementing causal forest models.

Algorithms and Techniques: We implemented HTE analyses using the following methods:

-

Interaction Term Modeling with OLS Regression: To assess treatment effects across subgroups, we used Ordinary Least Squares (OLS) regression models with interaction terms. Specifically, we estimated separate models for each covariate of interest, where each model included an interaction between the treatment indicator and the respective covariate, to identify subgroup-specific heterogeneity. The regression model is specified as:

\begin{align} Y = \beta _0 + \beta _1 \text {T} + \beta _2 \text {X} + \beta _3 (\text {T} \times \text {X}) + \epsilon \label {eq:linear_hte} \end{align}where \(T\) is the treatment (LAD intervention), \(X\) the student covariate (e.g., high grade status, high access to learning materials, as defined in Table 2), and \(Y\) the outcome variable (learning material usage or final grades), and \(\epsilon \) is the error term. We refer to \(\beta _0\) as the intercept, \(\beta _1\) as the treatment effect, \(\beta _2\) as the covariate main effect (effect of \(X\) in the control group), and \(\beta _3\) as the treatment/covariate interaction effect.

- Model Validation and Statistical Corrections: We used robust standard errors (HC2) to account for heteroskedasticity. To adjust for multiple hypothesis testing, we applied the Romano-Wolf correction to the p-values of the interaction terms, ensuring statistical validity. Cross-validation and bootstrapping techniques were used to validate the models and generate null distributions for the Romano-Wolf correction, further enhancing the robustness of our results.

- Exploratory Use of Causal Forest (CRF): We employed

Causal Forests (CRF) [30] as a complementary

non-parametric method to explore complex, nonlinear

patterns of treatment effect heterogeneity beyond

those captured by linear interaction models. Using

the

grfpackage [24], we estimated individual-level treatment effects and computed Augmented Inverse Probability Weighting (AIPW) scores to derive group-level heterogeneity estimates. This approach allowed us to validate and triangulate findings from traditional OLS models while leveraging the robustness and flexibility of CRF. Although full CRF results are not reported, the method served as a sensitivity check and supports the reliability of our subgroup analyses.

Implementation: We conducted all analyses in R. The outcomes assessed include students’ usage of learning materials and their final grades. By combining traditional statistical methods (OLS with interaction terms) with exploratory machine learning approaches (CRF), our approach lays the groundwork for a comprehensive understanding of how the LAD intervention differentially affects student subgroups.

4. RESULTS

We present results of the heterogeneous treatment effects analysis of the LAD with respect to learners’ total learning material usage and final grades based on covariates: sex, age, first-year status, prior material access level, and prior academic achievement level. This section presents a detailed examination of the results in the context of the research questions, focusing on the HTEs of the LAD intervention and the learner characteristics associated with these effects.

4.1 HTEs on Learning Material Usage

Table 3 shows the treatment (\(\hat {\beta }_1\)), main (\(\hat {\beta }_2\)), and interaction effects (\(\hat {\beta }_3\)) on learning material usage for the covariates sex, high access, high grade, age, and first-year status.

| Covariate | Effect | Estimate | SE | t-value | p-value | Adj. p-value |

|---|---|---|---|---|---|---|

| Sex (Female) | Treatment (\(\hat {\beta }_1\)) | 1.9400 | 0.2380 | 8.1525 | <0.001*** | - |

| Main Effect (\(\hat {\beta }_2\)) | 0.5246 | 0.1829 | 2.8681 | 0.004** | - | |

| Interaction (\(\hat {\beta }_3\)) | 0.7733 | 0.3342 | 2.3142 | 0.021* | 0.024 | |

| Age | Treatment (\(\hat {\beta }_1\)) | 0.6791 | 0.7828 | 0.8675 | 0.3857 | - |

| Main Effect (\(\hat {\beta }_2\)) | 0.1940 | 0.0153 | 12.7129 | <0.001*** | - | |

| Interaction (\(\hat {\beta }_3\)) | 0.0607 | 0.0294 | 2.0672 | 0.0387* | 0.0025 | |

| High Access | Treatment (\(\hat {\beta }_1\)) | 1.3667 | 0.0954 | 14.3189 | <0.001*** | - |

| Main Effect (\(\hat {\beta }_2\)) | 7.6550 | 0.2177 | 35.1563 | <0.001*** | - | |

| Interaction (\(\hat {\beta }_3\)) | 2.5855 | 0.3955 | 6.5365 | <0.001*** | 0 | |

| High Grade | Treatment (\(\hat {\beta }_1\)) | 2.3199 | 0.3370 | 6.8829 | <0.001*** | - |

| Main Effect (\(\hat {\beta }_2\)) | -0.4617 | 0.2219 | -2.0807 | 0.037* | - | |

| Interaction (\(\hat {\beta }_3\)) | 0.0528 | 0.3884 | 0.1360 | 0.8918 | 0.894 | |

| First-Year | Treatment (\(\hat {\beta }_1\)) | 2.3984 | 0.5071 | 4.7293 | <0.001*** | - |

| Main Effect (\(\hat {\beta }_2\)) | -1.2156 | 0.2632 | -4.6187 | <0.001*** | - | |

| Interaction (\(\hat {\beta }_3\)) | -0.0375 | 0.5357 | -0.0699 | 0.9443 | 0.9308 |

Note. *p < .05, **p < .01, ***p < .001.

SE = Standard Error. Adjusted p-values (Adj. p) were calculated using the Romano-Wolf correction.

Across most models, the LAD intervention was associated with statistically significant treatment effects, indicating a consistent positive association with learning material usage. For instance, significant effects were observed in the models with sex, high access, high grade, and first-year status (\(p < .001\) in all cases). However, in the age model, the treatment effect was not significant (\(p = 0.3857\)), suggesting that the overall impact of the LAD was not consistent across all subgroups.

The results show a statistically significant main effect of sex (Estimate = 0.5246, SE = 0.1829, \(p < .01\)), indicating that female learners tend to engage with the learning materials more than male learners. The interaction between treatment and sex is also statistically significant (Estimate = 0.7733, SE = 0.3342, \(p < .05\), \(Adj. p < .05\)). This interaction suggests that the LAD intervention had a heterogeneous effect on total material usage. Specifically, female learners responded more positively to the intervention than male learners.

Age also showed a statistically significant main effect (Estimate = 0.1940, SE = 0.0153, \(p < .001\)) and interaction effect (Estimate = 0.0607, SE = 0.0294, \(p < .05\), \(Adj. p < .01\)). These findings suggest that as age increases, learners not only engage more with learning materials but also show relatively stronger responses to the LAD intervention, as indicated by the significant interaction effect.

Similarly, high access to learning materials, which indicates whether the learner’s access to materials was above average in the previous term’s BIT101 course, was associated with a strong and significant main effect (Estimate = 7.6550, SE = 0.2177, \(p < .001\)). The interaction between treatment and high access (Estimate = 2.5855, SE = 0.3955, \(p < .001\), Adj. \(p < .001\)) further demonstrates that the LAD intervention was particularly effective for learners who had high access in the previous term. These results suggest that high-level access to course materials in the prior term significantly moderated the treatment effect, enhancing the impact of the LAD intervention on total material usage.

The high-grade group indicated a statistically significant main effect (Estimate = -0.4617, SE = 0.2219, \(p < .05\)), indicating that learners with higher prior academic performance tended to engage less with the materials compared to those with lower performance. The interaction effect for the high-grade group (Estimate = 0.0528, SE = 0.3884, \(p > .05\), \(Adj. p > .05\)) indicates that there was no statistically significant difference in the effect of the treatment based on whether learners had high previous grades. This suggests that the LAD intervention did not affect learners with high grades differently from others.

For the first-year status covariate, there was a statistically significant main effect (Estimate = -1.2156, SE = 0.2632, \(p < .001\)), meaning that first-year students used the materials less than upper-year students. However, the non-significant interaction effect (Estimate = -0.0375, SE = 0.5357, \(p > .05\), \(Adj. p > .05\)) indicates that the LAD intervention’s effectiveness did not differ based on students’ academic year.

In summary, the findings suggest that the LAD intervention was generally more effective for learners who were female or had higher prior engagement with course materials. Additionally, age showed a significant moderating effect, suggesting that the intervention’s impact varied with age. These results highlight the moderating role of individual characteristics and established engagement patterns in shaping the effectiveness of learning analytics interventions.

4.2 HTEs on Final Grades

Table 4 presents the treatment, main, and interaction effects on final grades for covariates including sex, high access, high grade, age, and first-year status.

| Covariate | Effect | Estimate | SE | t-value | p-value | Adj. p |

|---|---|---|---|---|---|---|

| Sex (Female) | Treatment (\(\hat {\beta }_1\)) | 1.0497 | 0.4269 | 2.5490 | 0.0139* | - |

| Main Effect (\(\hat {\beta }_2\)) | 0.1901 | 0.3902 | 0.4873 | 0.6260 | - | |

| Interaction (\(\hat {\beta }_3\)) | -0.0769 | 0.5400 | -0.1425 | 0.8867 | 0.8799 | |

| Age | Treatment (\(\hat {\beta }_1\)) | 1.6514 | 0.9449 | 1.7478 | 0.0805 | - |

| Main Effect (\(\hat {\beta }_2\)) | 0.1085 | 0.0224 | 4.8404 | <0.001*** | - | |

| Interaction (\(\hat {\beta }_3\)) | -0.0218 | 0.0318 | -0.6853 | 0.4931 | 0.4933 | |

| High Access | Treatment (\(\hat {\beta }_1\)) | 1.0712 | 0.3585 | 2.9881 | 0.0028** | - |

| Main Effect (\(\hat {\beta }_2\)) | 5.6363 | 0.3726 | 15.1286 | <0.001*** | - | |

| Interaction (\(\hat {\beta }_3\)) | -0.1641 | 0.5175 | -0.3170 | 0.7512 | 0.7618 | |

| High Grade | Treatment (\(\hat {\beta }_1\)) | 2.1774 | 0.6390 | 3.4076 | 0.0007*** | - |

| Main Effect (\(\hat {\beta }_2\)) | 24.3270 | 0.4873 | 49.9253 | <0.001*** | - | |

| Interaction (\(\hat {\beta }_3\)) | -1.5633 | 0.6780 | -2.3056 | 0.0211* | 0.0042 | |

| First-Year | Treatment (\(\hat {\beta }_1\)) | 0.3985 | 0.7253 | 0.5494 | 0.5827 | - |

| Main Effect (\(\hat {\beta }_2\)) | 4.2218 | 0.5446 | 7.7518 | <0.001*** | - | |

| Interaction (\(\hat {\beta }_3\)) | 0.7019 | 0.7780 | 0.9022 | 0.3669 | 0.3177 |

Note. *p < .05, **p < .01, ***p < .001.

SE = Standard Error. Adjusted p-values (Adj. p) were calculated using the Romano-Wolf correction.

Across models, the LAD intervention was associated with statistically significant treatment effects on final grades for several subgroups, including sex, high access, and high grade. For example, significant treatment effects were observed in the models with sex (Estimate = 1.0497, SE = 0.4269, \(p = .0139\)), high access (Estimate = 1.0712, SE = 0.3585, \(p = .0028\)), and high grade (Estimate = 2.1774, SE = 0.6390, \(p = .0007\)). No statistically significant treatment effect was observed in the age or first-year models.

The results indicate that the main effect of sex was not statistically significant (Estimate = 0.1901, SE = 0.3902, \(p > .05\)), indicating no significant difference in final grades between female and male learners. The interaction effect between treatment and sex was also not statistically significant (Estimate = -0.0769, SE = 0.5400, \(p > .05\), \(Adj. p > .05\)). This suggests that the LAD intervention did not have a heterogeneous effect on final grades based on sex.

Age demonstrated a statistically significant main effect (Estimate = 0.1085, SE = 0.0224, \(p < .001\)), suggesting that as age increases, learners tend to achieve higher final grades. However, in the age model, the treatment effect did not reach statistical significance (Estimate = -0.0218, SE = 0.0318, \(p > .05\), \(Adj. p > .05\)), indicating that the LAD intervention did not significantly affect final grades differently based on age.

For the high-access group, there was a statistically significant main effect (Estimate = 5.6363, SE = 0.3726, \(p < .001\)), suggesting that learners who had higher access to learning materials in the previous term performed better in terms of final grades. However, the interaction effect between treatment and high access was not statistically significant (Estimate = -0.1641, SE = 0.5175, \(p > .05\), \(Adj. p > .05\)), indicating that the LAD intervention did not have a differential effect on final grades based on prior access to materials.

The high-grade group showed a statistically significant main effect (Estimate = 24.3270, SE = 0.4873, \(p < .001\)), indicating that learners with higher grades in the previous BIT102 course tended to achieve significantly higher final grades in the current term. Notably, a significant interaction effect was observed for the high-grade covariate (Estimate = -1.5633, SE = 0.6780, \(p < .05\), \(Adj. p < .01\)). This finding suggests that learners with lower prior academic performance benefited more from the LAD intervention, underscoring its potential to support underperforming students.

Lastly, the first-year status covariate demonstrated a statistically significant main effect (Estimate = 4.2218, SE = 0.5446, \(p < .001\)), indicating that upper-year learners tended to achieve higher final grades. However, the interaction effect was not statistically significant (Estimate = 0.7019, SE = 0.7780, \(p > .05\), \(Adj. p > .05\)), indicating that the LAD intervention’s effect on final grades did not significantly differ based on first-year status.

Overall, the LAD intervention showed a broadly positive effect on final grades, particularly for students with lower prior academic performance. However, it did not produce statistically significant differential effects by sex, age, prior access, or academic year, suggesting relatively uniform academic impact across these subgroups.

5. DISCUSSION

The results of this study reveal the HTEs of LAD interventions on different learner profiles in an online learning environment. In response to Research Question 1, the results indicate significant heterogeneity in the effects of the LAD intervention on learning material usage and final grades, based on learner characteristics. This aligns with the argument by Leite et al. [14] and Lim et al. [15] that the effects of educational interventions can vary significantly across different learner populations. The observed heterogeneity underscores the importance of considering individual learner characteristics in the design, implementation, and evaluation of LAD interventions.

Regarding Research Question 2, the results show that HTEs are strongly associated with learner characteristics, including sex, age, prior access to course materials, prior academic performance, and first-year status. For example, female learners and older learners (specifically those aged 42 and above) responded more positively to the LAD intervention. These results support and extend the relationships between learner characteristics and LAD usage patterns reported by Chen et al. [5] and Wang et al. [31]. Additionally, learners with higher previous access to course materials demonstrated a significantly stronger response to the intervention in terms of accessing learning materials. This result may be related to their established study habits and self-regulation skills. However, prior academic performance and first-year status did not show significant interaction effects on learning material usage.

When examining final grades, the intervention did not produce a statistically significant interaction effect for sex, prior access to learning materials, or first-year status. These results suggest that the intervention did not have statistically heterogeneous effects on these groups. These findings align with the perspective of Park and Jo [19] and Kaliisa et al. [10], suggesting that LADs have the potential to enhance engagement with online learning environments rather than directly influencing academic achievement. These findings suggest that the effects of LAD interventions are more pronounced on behavioral outcomes (e.g., learning material usage) than on academic performance. This underscores the potential value of optimizing LAD designs to better support diverse learner profiles and address variability in learning behaviors. However, a significant interaction was observed for prior academic performance, indicating that learners with lower past performance benefited more from the intervention. This heterogeneous treatment effect suggests that the LAD intervention may help narrow performance gaps in online learning environments. While this result contrasts with some previous studies, such as Kim et al. [12], it emphasizes the need for a deeper exploration of the complex relationships between learner profiles, LAD interventions, and academic outcomes. This highlights the challenges of developing universal solutions for all learners, especially in online learning environments. Future research should focus on developing more adaptive and personalized interventions to enhance learning outcomes across diverse learner populations.

Limitations: This study’s analyses are based on data from a single course (i.e., Information Technologies) and academic year. Future research is needed to assess the robustness of findings across different subjects and geographic regions. Additionally, students were not required to use the LMS and could download materials for offline study. Since data from offline learners is unavailable, our measures of learning activity usage may underestimate intervention effects, as they rely solely on LMS log data. Future research will benefit from collecting and analyzing more comprehensive data that captures both online and offline learning behaviors. This will provide deeper insights into students’ learning processes over time (e.g., learning duration and motivation) and contribute to a more holistic understanding of effects of educational technology interventions in distance education.

6. CONCLUSION

This study examined how the effects of an LAD intervention within an online learning platform can vary significantly across a diverse population of learners. The findings indicate that while LADs can improve overall learner engagement and academic performance, their effectiveness is moderated by individual characteristics such as sex, age, prior access to course materials, and previous academic performance. The intervention was found to be especially beneficial for female learners, older learners, and those with lower prior academic achievement.

These results highlight the importance of considering learner heterogeneity in the design and implementation of LADs. Personalized learning analytics tools hold promise for fostering engagement and supporting academically at-risk learners. Future research should explore how integrating motivational and self-regulatory components into LAD design can enhance their effectiveness and promote equitable learning outcomes.

7. ACKNOWLEDGMENTS

This study was supported in part by the Scientific and Technological Research Council of Türkiye (TÜBİTAK) under the 1001 - Scientific and Technological Research Projects Funding Program, grant number 118K100. The findings and opinions expressed in this article are those of the authors and do not necessarily reflect the views of TÜBİTAK. The authors would also like to express their gratitude to the Anadolu University Open Education System, particularly its Learning Management System team, academic personnel, and Learning Technologies Research and Development unit, for their valuable support and for providing access to the learning environment and data used in this study.

References

- M. I. Alam, L. Malone, L. Nadolny, M. Brown, and C. Cervato. Investigating the impact of a gamified learning analytics dashboard: Student experiences and academic achievement. Journal of Computer Assisted Learning, 39(5):1436–1449, 2023.

- Anadolu University. Anadolum ecampus learning management system [anadolum ekampüs Öğrenme yönetim sistemi]. https://ekampus.anadolu.edu.tr/, 2024. Accessed: 2024-09-17.

- S. Athey and G. W. Imbens. The state of applied econometrics: Causality and policy evaluation. Journal of Economic perspectives, 31(2):3–32, 2017.

- R. Bodily and K. Verbert. Review of research on student-facing learning analytics dashboards and educational recommender systems. IEEE Transactions on Learning Technologies, 10(4):405–418, 2017.

- L. Chen, X. Geng, M. Lu, A. Shimada, and M. Yamada. How students use learning analytics dashboards in higher education: A learning performance perspective. Sage Open, 13(3), 2023. PMID: 37038434.

- G. W. Imbens and D. B. Rubin. Causal Inference for Statistics, Social, and Biomedical Sciences: An Introduction. Cambridge University Press, Cambridge, UK, 2015.

- I. Jivet, M. Scheffel, H. Drachsler, and M. Specht. Awareness is not enough: Pitfalls of learning analytics dashboards in the educational practice. In Proceedings of the 12th European Conference on Technology Enhanced Learning, pages 82–96, Cham, 2017. Springer International Publishing.

- I. Jivet, M. Scheffel, M. Schmitz, S. Robbers, M. Specht, and H. Drachsler. From students with love: An empirical study on learner goals, self-regulated learning and sense-making of learning analytics in higher education. The Internet and Higher Education, 47:100758, 2020.

- I. Jivet, M. Scheffel, M. Specht, and H. Drachsler. License to evaluate: Preparing learning analytics dashboards for educational practice. In Proceedings of the 8th International Conference on Learning Analytics and Knowledge, LAK ’18, pages 31–40, New York, NY, USA, 2018. Association for Computing Machinery.

- R. Kaliisa, K. Misiejuk, S. López-Pernas, M. Khalil, and M. Saqr. Have learning analytics dashboards lived up to the hype? a systematic review of impact on students’ achievement, motivation, participation and attitude. In Proceedings of the 14th Learning Analytics and Knowledge Conference, LAK ’24, pages 1–10, New York, NY, USA, Mar. 2024. Association for Computing Machinery.

- F. S. Kia, S. D. Teasley, M. Hatala, S. A. Karabenick, and M. Kay. How patterns of students dashboard use are related to their achievement and self-regulatory engagement. In Proceedings of the Tenth International Conference on Learning Analytics & Knowledge, LAK ’20, page 340–349, New York, NY, USA, 2020. Association for Computing Machinery.

- J. Kim, I.-H. Jo, and Y. Park. Effects of learning analytics dashboard: analyzing the relations among dashboard utilization, satisfaction, and learning achievement. Asia Pacific Education Review, 17:13–24, 2016.

- S. R. Künzel, J. S. Sekhon, P. J. Bickel, and B. Yu. Metalearners for estimating heterogeneous treatment effects using machine learning. Proceedings of the national academy of sciences, 116(10):4156–4165, 2019.

- W. L. Leite, H. Kuang, Z. Shen, N. Chakraborty, G. Michailidis, S. D’Mello, and W. Xing. Heterogeneity of treatment effects of a video recommendation system for algebra. In Proceedings of the Ninth ACM Conference on Learning @ Scale, L@S ’22, page 12–23, New York, NY, USA, 2022. Association for Computing Machinery.

- L.-A. Lim, S. Gentili, A. Pardo, V. Kovanović, A. Whitelock-Wainwright, D. Gašević, and S. Dawson. What changes, and for whom? a study of the impact of learning analytics-based process feedback in a large course. Learning and Instruction, 72:101202, 2021.

- W. Matcha, N. A. Uzir, D. Gašević, and A. Pardo. A systematic review of empirical studies on learning analytics dashboards: A self-regulated learning perspective. IEEE Transactions on Learning Technologies, 13(2):226–245, 2020.

- A. Ozturk. Açık ve uzaktan öğrenmede öğrenenlerin davranış örüntülerinin ve profillerinin modellenmesi, akademik performanslarının tahmin edilmesi ve performans değerlendirme panelinin etkilerinin incelenmesi [Modeling learners’ behavioral patterns and profiles, predicting the academic performance and investigating the effects of a dashboard in open and distance learning]. PhD thesis, Anadolu Üniversitesi, 2022.

- A. Ozturk and A. T. Kumtepe. Learning analytics-based dashboard application in open distance learning system [açıköğretim sisteminde öğrenme analitikleri tabanlı performans değerlendirme paneli uygulaması]. In A. Z. Özgür, K. Çekerol, S. Koçdar, and İ. Kayabaş, editors, 40 Years with Open Education: Practices and Research [Açıköğretim ile 40 Yıl: Uygulamalar ve Araştırmalar], pages 405–430. Anadolu University Publications, Eskişehir, 2023.

- Y. Park and I.-H. Jo. Development of the learning analytics dashboard to support students’ learning performance. Journal of Universal Computer Science, 21(1):110–133, 2015.

- I. Rets, C. Herodotou, V. Bayer, M. Hlosta, and B. Rienties. Exploring critical factors of the perceived usefulness of a learning analytics dashboard for distance university students. International Journal of Educational Technology in Higher Education, 18:1–23, 2021.

- B. A. Schwendimann, M. J. Rodriguez-Triana, A. Vozniuk, L. P. Prieto, M. S. Boroujeni, A. Holzer, D. Gillet, and P. Dillenbourg. Perceiving learning at a glance: A systematic literature review of learning dashboard research. IEEE Transactions on Learning Technologies, 10(1):30–41, 2016.

- T. Susnjak, G. S. Ramaswami, and A. Mathrani. Learning analytics dashboard: a tool for providing actionable insights to learners. International Journal of Educational Technology in Higher Education, 19:1–23, 2022.

- J. P.-L. Tan, E. Koh, C. R. Jonathan, and S. Yang. Learner dashboards a double-edged sword? students’ sense-making of a collaborative critical reading and learning analytics environment for fostering 21st century literacies. Journal of Learning Analytics, 4(1):117–140, 2017.

- J. Tibshirani, S. Athey, E. Sverdrup, and S. Wager. grf: Generalized random forests. r package version 2.0. 2, 2021.

- C. Tran and E. Zheleva. Improving data-driven heterogeneous treatment effect estimation under structure uncertainty, 2022.

- A. University. About the open education system. https://www.anadolu.edu.tr/en/open-education/, 2024. Accessed: 2024-09-01.

- N. Valle, P. Antonenko, K. Dawson, and A. C. Huggins-Manley. A systematic literature review on learner-facing learning analytics dashboards. British Journal of Educational Technology, 52:1724–1748, 2021.

- K. Verbert, E. Duval, J. Klerkx, S. Govaerts, and J. L. Santos. Learning analytics dashboard applications. American Behavioral Scientist, 57(10):1500–1509, 2013.

- K. Verbert, S. Govaerts, E. Duval, J. L. Santos, F. Van Assche, G. Parra, and J. Klerkx. Learning dashboards: an overview and future research opportunities. Personal and Ubiquitous Computing, 18:1499–1514, 2014.

- S. Wager and S. Athey. Estimation and inference of heterogeneous treatment effects using random forests. Journal of the American Statistical Association, 113(523):1228–1242, 2018.

- H. Wang, T. Huang, Y. Zhao, and S. Hu. The impact of dashboard feedback type on learning effectiveness, focusing on learner differences. Sustainability, 15(5):4474, 2023.

- Y. Xie, J. E. Brand, and B. Jann. Estimating heterogeneous treatment effects with observational data. Sociological Methodology, 42(1):314–347, 2012.

© 2025 Copyright is held by the author(s). This work is distributed under the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) license.