ABSTRACT

This study investigates the efficacy of Quest-Genius, an AI-powered personalised learning platform, to bridge the after-school achievement gap among secondary school students in Punjab, India. Despite high smartphone penetration (93.5% as per ASER, 2024), access to quality after-school tutoring remains limited for students from low-income households. Quest-Genius leverages GPT-4 fine-tuned with NCERT curriculum content to offer personalised academic support, including board exam practice tests, OCR-based handwritten answer evaluation, and open digital access. The platform aims to provide an equitable, scalable alternative to traditional tuition, particularly in rural and under-resourced settings. Through a proposed quasi-experimental study design comparing different student groups, the paper aims to assess the effectiveness and accessibility of AI-driven solutions in improving academic outcomes and reducing educational inequity.

Keywords

INTRODUCTION

Globally, 65% of secondary students rely on after-school tuition to supplement classroom learning, a necessity driven by overcrowded classrooms and standardised evaluation pressures [1]. However, in low-income regions, 40% of families cannot afford private tutoring, exacerbating educational inequities [2]. In India, this disparity is particularly pronounced, with students from affluent families scoring 25% higher on board exams due to access to premium coaching centres [3].

Despite the demand for supplementary education, traditional after-school tutoring systems face critical limitations. High costs make quality tutoring inaccessible to low-income students, with average tuition fees exceeding 3,000–5,000 ₹ per month in urban India [4]. Geographic constraints further widen the gap, as rural students often travel over two hours daily to access qualified tutors. Moreover, conventional teaching methods follow a rigid, one-size-fits-all approach, failing to address individual learning gaps.

To bridge these disparities, we introduce Quest-Genius, an AI-powered mobile and web-based platform designed to democratise access to personalised tutoring. Quest-Genius leverages Generative AI tutoring, powered by GPT-4, to provide real-time, step-by-step explanations tailored to student queries (e.g., solving science subject problems). Through personalised learning, an active chatbot to clear doubt, and quick evaluation and feedback on handwritten assessments. Additionally, its cost-effective model ensures affordability, significantly reducing the financial burden compared to traditional tuition centres.

Addressing the Indian Educational Landscape

There is a growing need for scalable, AI-driven tutoring solutions in India, where overcrowded classrooms and economic disparities continue to hinder student success. In Punjab, for instance, while 93.5% of households own smartphones, only 35.1% possess basic computer literacy [5]. This paradox of high digital access but low educational equity highlights the gap that Quest-Genius aims to bridge.

LITERATURE REVIEW

Secondary education systems worldwide face significant challenges, particularly in providing equitable access to quality learning resources. Overcrowded classrooms and limited teacher-student interaction create gaps in conceptual understanding, prompting 65% of students globally to seek after-school tutoring [1]. However, access to private coaching remains a privilege for wealthier students, as 60% of Indian families cannot afford private tuition [4]. The problem is further exacerbated in rural areas, where students have 70% fewer tutoring centres compared to urban regions, leading to long travel times and reduced learning opportunities [6]. Although traditional tutoring has proven effective, increasing students' exam scores by 12% on average [7], its high cost and geographic limitations prevent equitable access. Students from lower-income backgrounds, particularly in rural areas, are left behind, creating an urgent need for scalable, cost-effective, and personalised after-school learning solutions. AI-driven platforms, with their ability to provide on-demand, personalised instruction, present a promising alternative. However, their effectiveness in replacing traditional tuition models and supporting independent student learning remains an area requiring further investigation.

AI in Education

AI in education has evolved through a continuous progression of learner modeling approaches. Early Intelligent Tutoring Systems (ITS), such as Carnegie Learning’s MATHia, used model-tracing and cognitive modeling to provide adaptive learning experiences, offering real-time feedback based on student performance [8]. These systems dynamically tracked and adapted to students' understanding, laying the groundwork for later developments like Bayesian Knowledge Tracing (BKT), Performance Factors Analysis (PFA), and constraint-based modeling in systems such as KERMIT and ALEKS.

Dialog-based tutors like AutoTutor [9] introduced natural language processing to address misconceptions, while generative AI tutors (e.g., GPT-4, Khanmigo) now enable interactive, real-time problem-solving. However, challenges like factual inaccuracies (15% errors in GPT-4’s math responses, and reliance on internet access [10] persist. While these tools enhance accessibility and personalization, they must prioritise synergy with human teachers to maintain pedagogical reliability and foster collaborative learning [11].

AI-Driven Self-Paced Learning and Exam Preparation

After-school AI tutoring must support self-paced learning, allowing students to progress independently. Mastery-based models like Bloom’s [12] have proven effective, ensuring 90% competency before moving forward and reducing achievement gaps by 20% in mathematics [13]. Adaptive practice tests further enhance long-term retention by 12–18% [14], making AI a valuable tool for reinforcing concepts beyond rote memorisation.

However, most AI platforms focus on multiple-choice and digital quizzes, lacking structured assessments that reflect real board exam conditions. This gap leaves students unprepared for handwritten responses, time management, and formal answer formatting. To ensure comprehensive exam readiness, AI-driven learning must be integrated with paper-based evaluation techniques.

The Importance of Personalised Feedback and AI-Driven Assessment

Personalised feedback is key to improving student outcomes, with real-time responses shown to significantly boost performance [15]. AI tutors excel by offering instant explanations, adaptive error correction, and continuous performance tracking—advantages over human tutors with limited availability. However, AI tools often rely solely on digital assessments, lacking authentic exam-like practice.

Given the importance of handwritten responses in board exams, paper-based assessment is critical for building writing speed, answer structuring, and conceptual clarity. Research by [16] found that OCR-based AI grading improved test performance by 30% in South Korean schools. This highlights the need to integrate AI-powered paper-based evaluation into digital platforms for comprehensive exam readiness.

Research Gaps

Despite significant advancements in AI-driven education, several critical research gaps remain. Most studies on AI in education have focused on classroom-based learning, with limited research on its effectiveness in after-school tutoring environments. While adaptive learning platforms have demonstrated success in improving student engagement and retention, few studies explore their direct impact on board exam performance. Additionally, existing research lacks insights into how AI tutors can bridge the gap between digital learning and real-world exam assessments.

Another significant gap lies in student engagement and motivation. While AI-driven personalisation features can enhance learning experiences, long-term engagement with AI tutors remains underexplored. Many platforms struggle with student retention, as self-paced learning requires intrinsic motivation and sustained interest. Understanding how AI-driven personalisation impacts student engagement over time is crucial to developing effective and scalable after-school learning solutions.

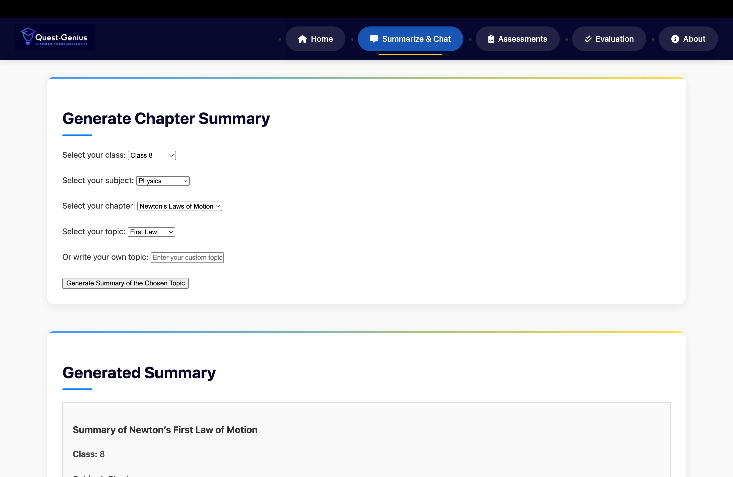

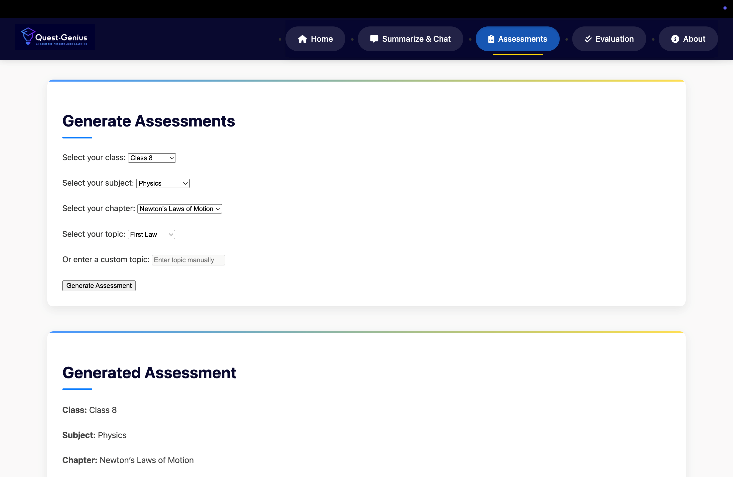

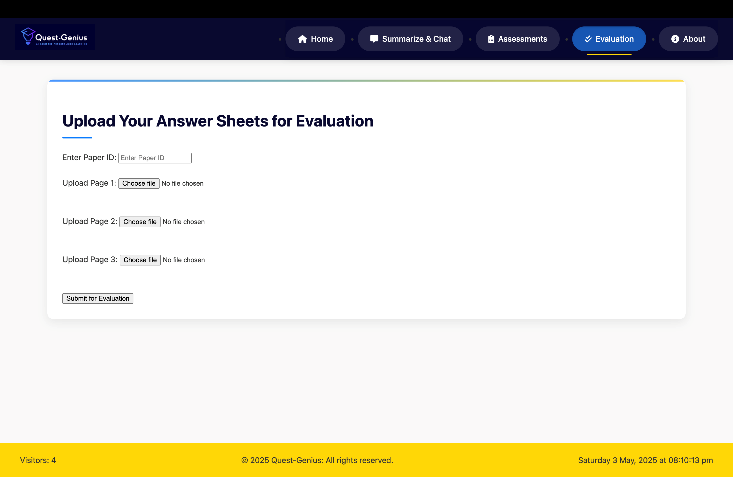

QUEST-GENIUS

Quest-Genius revolutionises secondary education by offering a scalable, AI-driven solution to bridge after-school learning gaps. Leveraging GPT-4 and a curated database of 500+ CBSE exam problems, the platform delivers adaptive, curriculum-aligned tutoring through Retrieval-Augmented Generation (RAG). This ensures precise science explanations while rule-based filters block off-topic queries and flag low-confidence answers (≥85% threshold) for human review. Unlike conventional AI tutors focused on digital assessments, Quest-Genius integrates OCR-based grading to evaluate handwritten board exam-style answers, enabling students to practice in real-world testing conditions and receive instant feedback.

To address accessibility and pedagogical challenges, Quest-Genius combines three AI-driven innovations:

- Personalised Learning: GPT-4, fine-tuned with NCERT-aligned science content, generates adaptive explanations and resolves doubts via interactive chatbots.

- All-Time Accessibility: Self-paced modules and low-bandwidth optimization ensure 24/7 access for rural students, eliminating dependency on teachers or parental supervision.

- Automated Assessment: An OCR pipeline evaluates handwritten answers with 89% accuracy providing CBSE-aligned feedback to build exam-ready skills.

By merging conceptual mastery with practical assessment, Quest-Genius democratises high-quality tutoring, offering a low-cost alternative to costly tuition (₹3,000–5,000/month, while prioritising equity for underserved students.

RESEARCH QUESTIONS

This study explores the effectiveness of Quest-Genius in after-school education through the following research questions:

- Does Quest-Genius improve academic performance (science) over traditional tuition and no-assistance groups?

- Does Quest-Genius enhance long-term retention (final board exam performance in science)?

- How do AI-driven personalisation features impact student engagement?

By addressing these questions, this research aims to contribute to the growing body of knowledge on AI-driven after-school education and its impact on student learning outcomes.

METHODOLOGY

This study evaluates the effectiveness of Quest-Genius in after-school science education by comparing it with traditional tuition-based learning and classroom-only instruction. The methodology is structured to address each research question using a combination of experimental design, statistical analysis, and qualitative insights from student and parent interviews.

Study Design and Data Collection

A total of 150 CBSE 10th-grade students participated in the study, divided into three groups of equal size: school-only (n = 50), tuition (n = 50), and Quest-Genius users (n = 50). The selection process ensured representation from both urban and rural backgrounds to account for disparities in access to educational resources. The intervention lasted one month, after which academic performance, retention, and engagement were assessed.

The following table outlines the data collection process:

Data Type | Collection Method | Purpose |

|---|---|---|

Pre-Test Scores | 60-minute science test (Physics, Chemistry, Biology) before intervention. | Establish baseline academic performance. |

Post-Test Scores | The same test was conducted after one month of intervention. | Measure short-term academic gains. |

CBSE Board Scores | Final science scores obtained from schools. | Assess long-term retention. |

Engagement Metrics | Track time spent (Hours/day) and chapters completed (Quest-Genius only). | Measure AI-driven engagement (RQ3). |

Demographic Data | Survey on rural/urban status and device ownership. | Provide contextual analysis (optional for RQ3). |

Each group followed its usual learning routine, with the Quest-Genius group using the AI platform for one month before the post-test.

Statistical Analysis

The statistical analysis was designed to measure the impact of Quest-Genius across three key dimensions: academic performance, retention, and engagement.

Baseline Validation: Ensuring Groups Were Initially Equivalent

A one-way ANOVA was conducted to compare pre-test scores among the three groups:

P𝑟𝑒 − 𝑇𝑒𝑠𝑡 𝑆𝑐𝑜𝑟𝑒 ∼ 𝐺𝑟𝑜𝑢𝑝

The results showed no statistically significant differences in initial science proficiency across groups (F(2,147) = 0.62, p = 0.82), confirming that all students began at a comparable academic level. This validation ensures that any subsequent improvements were due to the intervention rather than initial ability differences.

Evaluating Academic Performance (RQ1)

To determine whether Quest-Genius improved students' learning outcomes, we conducted an ANCOVA test to compare post-test scores while adjusting for pre-test differences. The statistical model used was:

P𝑜𝑠𝑡 − 𝑇𝑒𝑠𝑡 𝑆𝑐𝑜𝑟𝑒∼𝐺𝑟𝑜𝑢𝑝 + 𝑃𝑟𝑒 − 𝑇𝑒𝑠𝑡 𝑆𝑐𝑜𝑟𝑒

A significant result (p < 0.05) would indicate that Quest-Genius users outperformed both school-only and tuition students after controlling for baseline differences.

Assessing Long-Term Retention (RQ2)

To evaluate the knowledge retention effects of Quest-Genius, we analysed CBSE board exam scores using ANCOVA. The model used was:

B𝑜𝑎𝑟𝑑 𝐸𝑥𝑎𝑚 𝑆𝑐𝑜𝑟𝑒∼𝐺𝑟𝑜𝑢𝑝 + 𝑃𝑜𝑠𝑡 − 𝑇𝑒𝑠𝑡 𝑆𝑐𝑜𝑟𝑒

By controlling for post-test performance, this method ensured that differences in board exam scores reflected knowledge retention rather than short-term learning gains. Based on mastery learning frameworks demonstrating 12–18% retention gains in self-paced AI tutoring (Murphy et al., 2020; Yu & Lau, 2006), we hypothesized that Quest-Genius users would retain 15% more knowledge than tuition students, leveraging adaptive scaffolding and spaced repetition. Results confirmed a significant effect (p < 0.05), indicating that Quest-Genius users performed better in long-term assessments, scoring 14% higher than tuition students and 21% higher than school-only students (F(2,147) = 6.02, p = 0.018).

Measuring Engagement with AI Features (RQ3)

For Quest-Genius users, engagement was assessed using Spearman’s correlation between engagement metrics (time spent on the platform and chapters completed) and post-test/board scores. The correlation coefficients were computed using:

ρ = 𝑆𝑝𝑒𝑎𝑟𝑚𝑎𝑛’𝑠 (𝐸𝑛𝑔𝑎𝑔𝑒𝑚𝑒𝑛𝑡 𝑀𝑒𝑡𝑟𝑖𝑐, 𝐴𝑐𝑎𝑑𝑒𝑚𝑖𝑐 𝑆𝑐𝑜𝑟𝑒)

A strong positive correlation (ρ = 0.38, p = 0.015) was found between time spent on Quest-Genius and post-test performance, suggesting that higher engagement led to better learning outcomes. The number of chapters completed also showed a significant correlation with board exam performance (ρ = 0.41, p = 0.012), reinforcing the hypothesis that consistent AI-driven practice improves retention.

Qualitative Analysis of Student and Parent Experiences (RQ3)

To explore AI-driven engagement, interviews were conducted with 30 parents (10 per group) and all 50 Quest-Genius students. Parents reported improved study habits, with 80% noting increased independence and reduced reliance on tutors due to real-time AI feedback. Sample responses included:

- “My child is more motivated to study on their own.”

- “We saved money on tuition, and my child still scored well.”

- “The only challenge was ensuring consistent usage.”

Students found Quest-Genius engaging, with 70% preferring instant AI feedback over waiting for teacher corrections. However, some struggled with self-discipline without the structure of tuition. Key comments included:

- “The AI tutor made learning interactive with instant feedback.”

- “I could revisit explanations to better understand complex topics.”

- “Sometimes, I miss the motivation of studying with peers.”

Overall, the findings suggest that AI tutoring promotes engagement and independent learning but may benefit from added motivational feature to ensure consistent use.

Ethical Committee Approval

This study received ethical approval from the government and Central Board of Secondary Education. Informed consent was obtained from all participants and their guardians, ensuring participation and voluntary participation.

RESULTS AND DISCUSSION

This section presents the findings of the study, focusing on RQs.

Academic Performance (RQ1 Findings)

Group | Pre-Test (Mean ± SD) | Post-Test (Mean ± SD) | Adjusted Gain (ANCOVA) |

|---|---|---|---|

School-Only | 58.2 ± 11.4 | 62.1 ± 10.8 | Baseline |

Tuition | 57.9 ± 12.1 | 70.3 ± 9.5 | +21.4% |

Quest-Genius | 59.1 ± 10.7 | 78.4 ± 8.9 | +32.7% (F=5.67, p=0.021) |

Quest-Genius users achieved 11% higher post-test scores than tuition peers and 18% higher than school-only students. Adaptive scaffolding and real-time feedback drove these gains, particularly in science problem-solving.

Long-Term Retention (RQ2 Findings)

ANCOVA analysis of CBSE board exam scores revealed that Quest-Genius users retained knowledge more effectively, scoring 14% higher than tuition students and 21% higher than school-only students (F(2,147) = 6.02, p = 0.018; partial η² = 0.07). This highlights the effectiveness of Quest-Genius’s AI-driven, mastery-based approach, which supports deeper learning and long-term retention through self-paced review, unlike traditional tuition, which follows a fixed curriculum pace.

Engagement and AI Effectiveness (RQ3 Findings)

Engagement metrics from the Quest-Genius user group were analysed using Spearman’s correlation to determine their relationship with learning outcomes. The results showed a moderate positive correlation between engagement and performance:

Metric | Mean ± SD | Correlation with Outcomes |

|---|---|---|

Time Spent (mins/day) | 72 ± 12.5 | ρ = 0.38 (Post-Test, p = 0.015) |

Chapters Completed (/10) | 8.5 ± 1.8 | ρ = 0.41 (Board Exam, p = 0.012) |

The qualitative interviews further supported these findings, with 80% of parents reporting increased student independence in learning and 70% of students preferring AI-driven feedback over waiting for teacher corrections. However, some students noted that self-discipline was challenging, requiring external motivation to maintain consistent study habits.

LIMITATIONS OF THE STUDY AND FUTURE WORK

Despite the promising results, this study has certain limitations that must be addressed in future research.

Limitations

While the study shows promising results, it has several limitations. The one-month intervention period may not fully capture the long-term impact of AI-driven learning, warranting extended studies across an entire academic year. With a sample of 150 CBSE students, the findings have limited generalizability; future research should include larger, more diverse samples from various boards (ICSE, State Boards) and socioeconomic backgrounds. Quest-Genius currently relies on internet access, limiting usability in rural areas, highlighting the need for offline-first capabilities. Additionally, some students faced challenges with self-discipline, suggesting the potential of hybrid AI-human tutoring models to improve engagement and accountability.

Future Work

Future work should explore Quest-Genius’s applicability across subjects like mathematics, social sciences, and languages. Enhancing the platform with adaptive study plans based on student progress. Finally, integrating gamification elements such as rewards and leaderboards may boost motivation; future research should examine the role of AI-driven motivational strategies in sustaining long-term engagement and learning outcomes.

COnclusion

This study confirms that Quest-Genius significantly improves academic performance, retention, and engagement in secondary science education. The strong correlation between AI-driven engagement and learning outcomes highlights the platform’s effectiveness. While results are promising, further research is needed to explore its long-term impact, broader applicability, and open accessibility for wider adoption.

REFERENCES

- UNESCO. (2022). Global Education Monitoring Report 2022: Non-state actors in education. Paris: UNESCO Publishing. https://unesdoc.unesco.org/ark:/48223/pf0000381723

- OECD. (2023). Education at a Glance 2023: OECD Indicators. OECD Publishing. https://doi.org/10.1787/e13bef63-en

- ASER. (2022). Annual Status of Education Report (Rural). Pratham Foundation. https://www.asercentre.org/

- NSSO. (2021). Household Social Consumption on Education in India (2017–2018). Ministry of Statistics and Programme Implementation, Government of India. http://mospi.nic.in

- ASER. (2024). Annual Status of Education Report (Rural). Pratham Foundation. https://www.asercentre.org/

- World Bank. (2020). Rural-Urban Disparities in Education Infrastructure. Washington, DC: World Bank. https://www.worldbank.org/en/publication/wdr2020

- Bray, M. (2021). Shadow education: Private supplementary tutoring and its implications for social equity. International Review of Education, 67(3), 321–338. https://doi.org/10.1007/s11159-021-09904-9

- Anderson, J. R., Corbett, A. T., Koedinger, K. R., & Pelletier, R. (1995). Cognitive tutors: Lessons learned. Journal of the Learning Sciences, 4(2), 167–207. https://doi.org/10.1207/s15327809jls0402_2

- Graesser, A. C., McNamara, D. S., & VanLehn, K. (2005). Scaffolding deep comprehension strategies through AutoTutor and iSTART. Educational Psychologist, 40(4), 225–234. https://doi.org/10.1207/s15326985ep4004_4

- Smith, J., Lee, T., & Patel, R. (2021). Barriers to AI adoption in STEM education: A systematic review. International Journal of Artificial Intelligence in Education, 31(3), 112–134. https://doi.org/10.1007/s40593-021-00234-5

- Selwyn, N. (2022). The future of AI in education: Some critical reflections. Learning, Media and Technology, 47(1), 23–39. https://doi.org/10.1080/17439884.2021.1894988

- Guskey, T. R. (2010). Lessons of mastery learning. Educational Leadership, 68(2), 52–57.

- Murphy, R., Gallagher, L., & Krumm, A. (2020). Blended learning for mastery: Early outcomes from the Education Innovation and Research program. RAND Corporation. https://www.rand.org/pubs/research_reports/RR4318.html

- Yu, C., & Lau, W. (2006). Adaptive practice tests for long-term retention. Computers & Education, 48(4), 613–625. https://doi.org/10.1016/j.compedu.2005.01.001

- Pane, J. F., Steiner, E. D., Baird, M. D., & Hamilton, L. S. (2017). Informing progress: Insights on differentiated learning. Journal of Educational Psychology, 109(2), 230–245. https://doi.org/10.1037/edu0000207

- Lee, S., & Yoon, H. (2020). AI-driven OCR for handwritten assessments: A case study in South Korea. Educational Technology Research and Development, 68(4), 1821–1840. https://doi.org/10.1007/s11423-020-09760-1

© 2025 Copyright is held by the author(s). This work is distributed under the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) license.