ABSTRACT

Although massive open online courses (MOOCs) have broadened access to education worldwide, they often lack personalized support due to large enrollment and limited instructor capacity. Chatbots present an appealing solution by providing timely, on-demand assistance. However, few empirical studies have examined how different chatbot architectures, specifically intent-based versus large language model (LLM) retrieval-augmented generation (RAG) approaches, impact learner navigation patterns. To address this gap, we analyzed clickstream data from two journalist professional development MOOCs, each embedding a different chatbot type. The first course, an election reporting MOOC, incorporated an intent-based chatbot; the second, a digital content creation MOOC, employed an LLM-RAG chatbot. We analyzed the learners’ clickstream data in two ways: measuring each event node’s degree centrality and generating directed graphs to compare how learners moved through each course. Results showed that, while both chatbots ranked relatively high in node centrality, they did not dominate learners’ navigation pathways. Certificate-related pages, announcements, and quizzes frequently attracted more attention in both courses. The LLM-RAG chatbot offered the potential for deeper personalization, yet its usage rates did not dramatically surpass those of the simpler intent-based chatbot. Our findings suggest that implementation strategies, such as integrating chatbots into core assessments and actively promoting their use, may play a greater role in shaping learner navigation than technological sophistication alone. We conclude that course designers aiming to optimize learner engagement with chatbots should focus on strategic deployment and alignment with course objectives rather than relying solely on advanced capabilities.

Keywords

INTRODUCTION

Massive open online courses (MOOCs) have rapidly expanded access to education for learners worldwide, yet they often lack the individualized support found in traditional classroom settings [3]. Chatbots have emerged as a promising intervention to address this challenge by providing timely, context-aware assistance to learners navigating large-scale online environments [1]. Despite growing interest in chatbot technologies, there remains a dearth of empirical research comparing how different chatbot architectures influence learners’ behaviors in MOOCs [2]. To bridge this gap, we investigate whether an advanced large language model (LLM)- Retrieval-Augmented Generation (RAG)-based chatbot meaningfully alters learners’ navigation patterns compared to a more traditional intent-based chatbot. Specifically, we analyze node centrality and transition flows using clickstream data gathered from two journalist professional development MOOCs, each employing a distinct type of chatbot.

Our guiding research question is: How does the type of chatbot (LLM-RAG vs. intent-based) embedded in massive open online courses influence learners’ course navigation patterns?

By examining differences in how learners move through course content, this study seeks to shed light on whether chatbot sophistication alone leads to greater engagement or if implementation strategies are equally, if not more, critical in shaping learner navigation.

METHOD

Study Context and Participants

This study examined how learners in two journalist professional development MOOCs navigated course content when supported by either an intent-based [4] or an LLM-RAG chatbot. Both chatbots were labeled as “learning assistant” and replaced traditional FAQ pages with the shared aim of providing immediate, contextualized support. However, the LLM-RAG chatbot, built on Llama 3.2 [6] with hybrid RAG capability, was introduced to potentially offer deeper personalization in response to prior research calling for more context-aware guidance [7]. The first course, focused on election reporting in 2022, employed an intent-based chatbot. A total of 3,358 learners enrolled, of whom 2,399 were active participants, and 886 of these active users interacted with the chatbot. The second course, delivered in 2024 on the topic of digital content creation, utilized the LLM-RAG chatbot.

A total of 4,918 learners enrolled, of whom 3,325 were active participants, and 898 of these active users interacted with the chatbot.

Because the two MOOCs differed in both topic (election reporting vs. digital content creation) and delivery year (2022 vs. 2024), differences in learner navigation may reflect course-specific factors as well as chatbot effects.

Data Collection and Analysis

To explore whether the two chatbot types shaped learners’ navigation behaviors differently, we collected clickstream data that recorded user identifications, timestamps, and event contexts (e.g., module videos, quizzes, and chatbot interactions) from the learning management system (Moodle). We excluded users who showed no meaningful engagement, yielding active subsets in each course. Each user’s sequence of events was transformed into a directed graph, where nodes represented different course components (“event contexts”) and edges indicated transitions between them. Degree centrality (based on both incoming and outgoing edges) quantified how often each component functioned as a key pivot in learners’ navigation. In constructing and visualizing directed graphs, we employed Word2Vec embeddings [5] to capture localized patterns in learners’ event sequences and used Python libraries (e.g., NetworkX, pyvis). This approach allowed us to compare the navigational prominence of the chatbot node in relation to other course components and to see whether the LLM-RAG chatbot prompted distinct movement through the course. See all relevant Python codes, graphs, and event context descriptions in this directory).

Results

Node Centrality

Table 1 shows the top 20 nodes ranked by degree centrality (from highest to lowest) in each course. In the intent-based chatbot embedded course, certificate_info, announcements, and chatbot occupy the top three positions, while in the LLM-RAG chatbot embedded course, certificate_info, baseline survey, and optional_resources head the list.

Despite the advanced capabilities of the LLM-RAG chatbot, it does not dominate navigation; rather, pages related to certificates, announcements, or evaluations consistently outrank it. Nonetheless, the chatbot remains relatively high in node centrality in both lists, ranked third and fourth in the two courses, suggesting that it is a regular point of interaction. Assessment-related nodes (e.g., quiz2) also feature in each course’s top 20, underscoring that learners often pivot from evaluation components to other areas, including occasional chatbot usage. These patterns set the stage for a deeper exploration of how students transition among these prominent nodes, which we examine in the next section, 3.2 Navigation Patterns.

Intent-based-chatbot embedded | Degree | LLM-RAG-chatbot embedded | Degree |

|---|---|---|---|

certificate_info | 1.91 | certificate_info | 1.52 |

announcements | 1.88 | baseline survey* | 1.51 |

chatbot | 1.78 | optional_resources | 1.41 |

module1_video1_slides | 1.71 | chatbot | 1.36 |

help_lounge | 1.63 | announcements | 1.35 |

help_instructor | 1.62 | midevaluation | 1.20 |

module2_video1_slides | 1.58 | intromaterial6 | 1.18 |

help_platform | 1.52 | intromaterial7 | 1.18 |

syllabus | 1.49 | module1_video4 | 1.18 |

module3_video1_slides | 1.45 | help_instructor | 1.16 |

module2_discussion2 | 1.37 | module2_video4 | 1.16 |

midevaluation | 1.35 | quiz2 | 1.16 |

module1_discussion2 | 1.32 | intromaterial4 | 1.13 |

quiz2 | 1.29 | help_platform | 1.11 |

module2_optional3 | 1.26 | help_lounge | 1.10 |

intromodule_instuctormessage | 1.26 | certificate_print | 1.08 |

module5_discussion1 | 1.25 | quiz3 | 1.08 |

module1_discussion1 | 1.24 | quiz1 | 1.05 |

module1_video4_slides | 1.23 | unenroll | 1.02 |

instructor | 1.22 | intromaterial2 | 1.01 |

Navigation Patterns

Overall, reliance on the chatbot for real-time help was not dramatically higher in the LLM-RAG chatbot setting, and many learners still navigated to quizzes, announcements, and certificate pages without interacting much with the chatbot. These findings underscore that while the LLM-RAG chatbot may provide more nuanced responses, how it is introduced and promoted within the course can be as influential on learner behavior as the underlying technology.

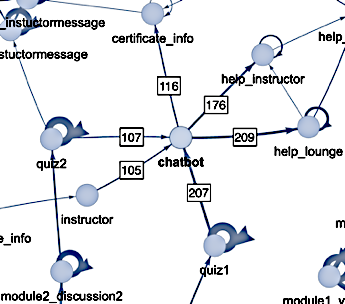

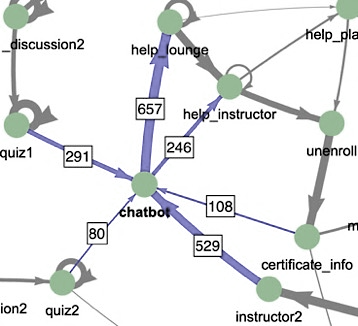

Figure 1 presents zoomed-in directed graph representations around the chatbot nodes, highlighting learner navigation patterns in both the intent-based and LLM-RAG chatbot courses. Similar to findings from node centrality, the visual patterns indicate notable similarities in how learners navigated among course components. For instance, the only course content-related transitions to the chatbot node observed in both courses stemmed from quiz1 and quiz2. This observation suggested that overall chatbot usage in both courses was modest, especially given the wide range of course materials available.

*Note: All directed graphs presented in the results section are accessible as interactive network graphs in this directory. Numbers surrounding the chatbot node represent the number of edges containing the chatbot node. After downloading the corresponding HTML files, viewers can interactively explore the graphs.

Discussion and conclusion

Summary of Findings

The results indicated that both the intent-based and LLM-RAG chatbots occupied notable positions in learners’ navigation, yet neither dominated course interactions overall. Node centrality revealed that certificate information, announcements, and quizzes frequently overshadowed the chatbot node in both MOOCs. Although the LLM-RAG chatbot may have provided more advanced natural language responses, the data showed no substantial increase in learners’ reliance on it for real-time help.

Implementation over Capabilities

These findings underscore that a more sophisticated model alone does not necessarily translate into higher engagement. Rather, how a chatbot is introduced and promoted within a course appears to matter at least as much as its underlying technology. Even though the LLM-RAG chatbot approach has greater potential for nuanced conversation, its impact seemed modest unless learners were prompted or guided to use it. Course design elements, such as explicit recommendations for when to consult the chatbot, direct links from quiz sections, or mandatory chatbot exercises, could potentially boost usage and better demonstrate the benefits of LLM-driven personalization.

Limitations and Future Work

Several constraints should be noted. First, this study compared different chatbots in two courses that varied in topic (election reporting vs. digital content creation) and delivery year. As a result, the observed navigation differences may reflect course-specific influences as well as the chatbot interventions. Second, clickstream data alone cannot confirm learners’ satisfaction, knowledge gains, or the quality of chatbot responses. Future studies could combine these behavioral metrics with quizzes, completion rates, or qualitative feedback (e.g., surveys, interviews) to gain deeper insight into whether and how an LLM-RAG chatbot fosters better learning experiences. Additionally, experimenting with A/B testing within the same course could help isolate which design strategies, such as mandatory chatbot tasks, truly enhance learner engagement with advanced chatbot features.

Conclusion

In assessing how chatbots shape learner navigation in MOOCs, this study highlights the critical role of implementation. While an LLM-RAG chatbot can offer richer dialogue capabilities [7], simply deploying a sophisticated chatbot does not guarantee increased usage. Course context, integration strategies, and clear guidance on when and how to use the chatbot remain pivotal factors. Ultimately, maximizing the potential of LLMs in online learning will require careful attention to both technological refinement and pedagogical design.

ACKNOWLEDGMENTS

We sincerely thank the Knight Center at The University of Texas at Austin for their ongoing support of our research endeavors. Additionally, this study was made possible through the generous support provided by Anne Spencer Daves College of Education, Health, and Human Sciences at Florida State University through its Research Startup Fund.

REFERENCES

- Han, S., and Liu, M. 2024. Equity at the forefront: A systematic research and development process of chatbot curriculum for massive open online course. Educational Technology & Society. 27, 4 (Oct. 2024). DOI: https://doi.org/10.30191/ETS.202410_27(4).SP08.

- Han, S., Hamilton, X., Cai, Y., Shao, P., and Liu, M. 2023. Knowledge-based chatbots: A scale measuring students’ learning experiences in massive open online courses. Educational Technology Research and Development, 71, 6 (Aug. 2023). DOI: https://doi.org/10.1007/s11423-023-10280-7

- A.Qaffas, A., Kaabi, K., Shadiev, R. and Essalmi, F. 2020. Towards an optimal personalization strategy in MOOCs. Smart Learning Environments. 7, 1 (Apr. 2020), 14. DOI: https://doi.org/10.1186/s40561-020-0117-y.

- Dialogflow ES documentation: https://cloud.google.com/dialogflow/es/docs. Accessed: 2025-03-15.

- Getzen, E., Ruan, Y., Ungar, L. and Long, Q. 2024. Mining for health: A comparison of word embedding methods for analysis of EHRs data. Statistics in Precision Health: Theory, Methods and Applications. Y. Zhao and D. G. Chen, eds. Springer International Publishing. 313–338. DOI: https://doi.org/10.1007/978-3-031-50690-1_13

- Llama: https://www.llama.com/. Accessed: 2025-03-17.

- Yuan, Y., Liu, C., Yuan, J., Sun, G., Li, S. and Zhang, M. 2024. A hybrid RAG system with comprehensive enhancement on complex reasoning. arXiv. DOI: https://doi.org/10.48550/arXiv.2408.05141

© 2025 Copyright is held by the author(s). This work is distributed under the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) license.