ABSTRACT

This study explores the use of webcam-based eye tracking during a learning task to predict and better understand neurodivergence with the aim of improving personalized learning to support diverse learning needs. Using WebGazer, a webcam-based eye tracking technology, we collected gaze data from 354 participants as they engaged in educational online reading. We extracted both gaze features and text characteristics, as well as interactions between gaze and text. Results show that the supervised machine-learned model predicting whether a learner is neurodivergent or not achieved an AUROC of 0.60 and a Kappa of 0.14, indicating slight agreement beyond chance. For specific neurodivergent diagnoses, AUROC values ranged from 0.53 to 0.61, demonstrating moderate predictive performance. Additionally, SHAP analysis was used to examine the influence of features selected through forward feature selection, revealing both commonalities and differences between predicting broad neurodivergence and specific diagnoses. These findings should not be used for diagnostic purposes or to single out any individual but instead underscore the potential for personalized modeling to better support diverse learning needs.

Keywords

INTRODUCTION

Understanding how learners process information is essential for improving educational experiences, particularly for neurodivergent learners who often face unique challenges in attention and cognitive processing [8, 42]. Neurodivergent individuals, including those with Attention Deficit Hyperactivity Disorder (ADHD), Autism Spectrum Disorder (ASD), Dyslexia, and other learning disabilities, represent approximately 15-20% of the general population [3] but frequently experience disparities in educational outcomes due to limited tailored support [7, 44, 56]. Identifying differences between how neurodivergent and neurotypical learners interact with learning material can lead to more personalized educational interventions that support diverse learning needs [50, 68]. In this study, we leverage webcam-based eye tracking during a self-paced reading task, collecting multiple data streams in real time to better understand learners’ individual differences, laying the groundwork for more personalized support.

Eye tracking has long been a method for understanding learners. Research-grade eye-tracking has been widely used in cognitive and educational research to study reading behaviors and attention shifts [23, 62, 71]. However, these research-grade technologies are expensive and require a tightly controlled data collection environment, limiting their scalability in real-world educational settings. Recent advancements in webcam-based eye tracking provide a promising alternative, allowing for real-time gaze tracking using standard webcams [22, 23, 69, 71]. This technology enables scalable, cost-effective assessments of cognitive processing in diverse environments, including online learning platforms.

Few studies have examined how gaze behaviors differ between neurotypical and neurodivergent learners or how such differences could be leveraged to analyze specific neurodivergent diagnoses [71]. Given the growing emphasis on personalized learning, utilizing machine learning models that can distinguish between neurodivergent and neurotypical learners—as well as predict individual differences within that group—could significantly enhance targeted educational interventions.

This study explores the use of webcam-based eye tracking to predict whether a learner is neurodivergent or neurotypical and to further classify specific neurodivergent diagnoses. By leveraging gaze behavior, text characteristics, and interactions between these features, we trained machine learning models to differentiate between learner groups. Through SHapley Additive exPlanations (SHAP) [40], we examined the influence of key features selected via forward feature selection [29, 30], identifying both commonalities and differences in predictive patterns for classifying neurodivergent learners and identifying individual differences. It should be noted that this work is not intended to be a diagnostic tool in any way, but instead contributes to the growing field of personalized learning, supporting efforts to create more inclusive and adaptive learning environments. The goal is to support learners by informing educational strategies that accommodate diverse learning needs. This approach could inform the development of adaptive learning systems that personalize content delivery, adjust instructional methods, and increase engagement based on individual neurodivergent profiles. By employing the findings of this study, educators can develop more tailored interventions that create equitable learning opportunities for all learners.

Literature review

Eye-tracking technology has gained significant attention in education and cognitive research, providing insights into learning behaviors, attention shifts, and comprehension. By analyzing gaze patterns, researchers can assess how learners allocate attention, which aspects of learning materials they engage with most, and where cognitive difficulties arise. These insights enable educators to refine instructional methods, improve student engagement, and develop personalized learning frameworks tailored to individual learning needs [55, 64, 66]. Machine learning algorithms have played a significant role in advancing the accuracy, calibration, and data quality of eye-tracking technology. Researchers have utilized classifiers and regression models to detect and predict gaze error patterns under various conditions [32]. Additionally, machine learning models such as K-nearest neighbor, naïve Bayes, decision trees, and random forests have been applied to analyze eye movement data, classify visual attention patterns, and enhance human-computer interaction [58].

Traditional research-grade eye-tracking technology are expensive and typically require controlled laboratory environments, limiting their accessibility and applicability in broader educational settings. To overcome these constraints, recent advancements in webcam-based eye tracking have introduced cost-effective and scalable alternatives that enable gaze monitoring in real-world learning environments [10, 26]. Unlike traditional eye tracking devices, webcam-based technology utilizes standard hardware alongside mathematical models, making it accessible for remote learning and online educational research. This approach supports ecological validity by allowing data collection in naturalistic settings such as participants’ homes, rather than controlled laboratory environments.

Studies have demonstrated the feasibility of webcam-based eye tracking for various educational applications. For example, it has been used to measure visual expertise in medical education, where gaze data helps assess how learners interpret diagnostic images and perform clinical assessments [9]. Additionally, webcam-based eye tracking has been employed to detect mind wandering and comprehension difficulties during online reading tasks, providing insights into how students remain engaged or disengage from educational materials [23]. Researchers have also used webcam eye tracking to analyze student behaviors in digital learning environments, helping to identify factors that contribute to effective online learning experiences [10]. By tracking gaze patterns - including fixation duration and pupil dilation, this technology provides valuable insights into cognitive load, engagement levels, and information processing strategies. Researchers have explored its role in detecting attentional shifts, monitoring stress and relaxation levels, and assessing self-regulated learning behaviors [10, 36, 37, 73]. These factors can then, in turn, be used to optimize digital learning platforms by enabling adaptive interventions that cater to individual learners' needs [10, 17].

Gaze estimation accuracy and stability can be affected by challenges including eye variations, occlusion and varying light conditions. To address these challenges, researchers have employed machine learning-based approaches to improve the accuracy of webcam-based eye-tracking data [14, 26, 54]. Advancements such as deep learning models, including DeepLabCut and neural networks, have improved gaze estimation accuracy, achieving a median error of about one degree of visual angle [75]. These have significantly enhanced the usability of webcam eye tracking systems, making them a promising tool for cognitive assessments, learning analytics, and adaptive educational technologies.

Beyond educational applications, eye tracking technology has been increasingly explored for predicting neurodivergence. Studies suggest that gaze behavior and eye movement patterns can provide valuable markers for identifying individual differences such as those experienced by neurodivergent populations. Recent studies highlight the potential of webcam-based eye tracking for assessing cognitive challenges in neurodivergent population. For instance, webcam-based eye tracking offers a non-invasive and scalable approach for assessing reading comprehension difficulties, task-unrelated thoughts (TUT), and executive functioning challenges commonly experienced by neurodivergent learners [71].

In clinical settings, eye-tracking data has been employed for neuropsychiatric assessments, predicting psychomotor test performance [1]. Researchers have also used scanpath analysis to differentiate between autistic and neurotypical individuals, showing that gaze patterns can serve as diagnostic markers for autism spectrum disorder (ASD) [65]. In educational environments, this technology has been applied to identify reading difficulties and thought processing patterns among neurodivergent learners, offering insights into how they interact with text and process information differently from neurotypical learners [71].

Eye-tracking research has also focused on its role in early autism detection, particularly in identifying distinct gaze behaviors associated with social cue processing and eye contact difficulties [31, 63]. Studies show that individuals with ASD exhibit different gaze patterns when engaging with social stimuli, such as faces and eye contact, compared to neurotypical individuals. Machine learning models trained on scanpath images and gaze patterns have successfully classified ASD diagnoses, achieving high classification accuracy [4, 13], effectively differentiating individuals with ASD from neurotypical individuals. This finding highlights the potential of gaze-based biomarkers as reliable indicators of ASD. These models, when implemented in real-world applications, could facilitate early detection, enabling timely intervention and personalized support.

Similar to ASD detection, eye-tracking technology has been used to predict dyslexia by analyzing reading patterns, fixation durations, and saccadic movements [28, 53]. Studies have shown that dyslexic readers exhibit distinct gaze behaviors, including longer fixation times and increased regressions that is, backward movements in text. Machine learning models trained on eye-tracking data have achieved high classification performance in distinguishing between dyslexic and non-dyslexic readers [51].

Compared to traditional diagnostic methods, such as MRI-based dyslexia screening [11, 19], eye-tracking approaches offer a non-invasive, cost-effective, and scalable solution. Early identification of dyslexia through eye movement analysis could facilitate timely interventions, allowing educators to provide specialized reading support and accommodations for learners with dyslexia [28].

In addition to learning disabilities, eye-tracking research has explored its application in predicting anxiety disorders, particularly Generalized Anxiety Disorder (GAD). Studies have found that eye movement patterns can reveal attentional biases, such as the avoidance of mild threat stimuli over time, a characteristic frequently observed in individuals with GAD [46].

Machine learning models trained on eye-tracking data combined with facial recognition technology have demonstrated high accuracy in predicting anxiety symptom severity [20, 25]. In educational settings, webcam-based eye tracking has been employed to detect mind wandering, providing opportunities for adaptive learning technologies that respond dynamically to students’ engagement levels and emotional states [23].

Current Study and Novelty

As eye-tracking technologies continue to evolve, they offer a scalable, accessible, and real-time approach for cognitive measurements in educational settings. By leveraging gaze behavior, fixation metrics, and machine learning models, researchers can develop personalized interventions and support diverse learning needs.

This study investigates how webcam-based eye tracking can be used to predict both broad neurodivergence and specific neurodivergent diagnoses in learners. Our study extends the application of gaze-based modeling to multiple neurodivergent conditions, including ADHD, dyslexia, and GAD, which are known to impact learning processes in different ways. This approach helps identify key gaze features that distinguish individual learner profiles. By comparing models trained on the entire population to those trained specifically on neurodivergent learners with specific diagnoses, the study evaluates the effectiveness of a generalized approach versus diagnosis-specific models.

Additionally, we employ forward feature selection and SHAP to determine the most influential gaze and cognitive features contributing to neurodivergence classification. This approach enhances the interpretability of machine learning models, allowing educators and researchers to gain an understanding of how these diagnoses are reflected in learner gaze behaviors. Such findings can inform the design of adaptive learning environments that accommodate the diverse cognitive needs of neurodivergent learners, leading to more inclusive and effective instructional strategies.

The goal of this study was to explore machine learning models in identifying neurodivergent learners and predicting specific neurodivergent diagnoses based on eye gaze and text features. We aimed to answer two key questions: (1) Can we predict whether a learner is neurodivergent or neurotypical? (2) Can specific neurodivergent diagnoses - including ADHD (Attention Deficit Hyperactivity Disorder) or ADD (Attention Deficit Disorder), Autism/ Asperger’s/ Autism Spectrum Disorder (ASD), Dyslexia/ Dyspraxia/ Dyscalculia/ Dysgraphia, Other language/reading/math/non-verbal learning disorders and Generalized Anxiety Disorder (GAD) - be accurately predicted? Accurate prediction of both broad neurodivergence and specific diagnoses will show that our models capture the distinct gaze patterns, and text characteristics that show cognitive processing differences. By examining which gaze and text features drive these predictions, we can understand how each learner group allocates attention, processes information, and where they encounter difficulties. Moreover, using multiple data streams to model learners can create the potential for understanding cognitive processing differences and inform targeted personalization.

METHOD

Participants

A total of 354 learners participated in an online study conducted through Prolific [48], a platform for online research. Participants self-reported whether they identified as neurodivergent or neurotypical, with 176 learners classified as neurodivergent and 178 as neurotypical (note this balance was artificially created through study recruitment methods in the Prolific platform). Table 1 shows the distribution of neurodivergent diagnoses.

Diagnoses | Number of Participants |

|---|---|

ADHD/ADD | 75 |

Autism/Asperger’s/ASD | 67 |

Dyslexia/Dyspraxia/Dyscalculia/Dysgraphia | 14 |

Other language/reading/math/non-verbal learning disorders | 13 |

Generalized Anxiety Disorder | 90 |

Other diagnoses | 38 |

No response/ never diagnosed | 34 |

Since the unspecified diagnoses and those who chose not to disclose their diagnoses could not be clearly identified, these categories were excluded from our analysis.

The gender distribution included 182 males, 150 females, 20 identifying as Other/Non-Binary, and 2 who preferred not to disclose. Participants also reported their racial and ethnic backgrounds, with 1 identifying as Black or African, 40 as Hispanic/Latina/Latino/Latinx, 19 as East Asian, 9 as Southeast Asian, 4 as South Asian, 3 as Other Asian, 5 as Native American, Alaskan Native, or First Nations, 4 as Middle Eastern or North African, 1 as Native Hawaiian or Pacific Islander, 251 identifying as White or Caucasian and 27 identifying with another race or ethnicity. Some individuals identified with multiple racial identities. Additionally, 2 participants preferred not to disclose their race. Participants' ages ranged from 18 to 84 years, with an average age of 37.

WebGazer: Webcam-Based Eye Tracking

WebGazer is a JavaScript-based, real-time eye-tracking library designed for seamless integration into web browsers with webcam access [49]. It utilizes various facial detection tools, including clmtrackr, js-objectdetect, and tracking.js [49], to identify key facial and eye regions within the webcam feed, enabling non-intrusive gaze tracking.

Once the eyes are detected, WebGazer locates the pupil by identifying its darker contrast relative to the iris. The eye region is then converted into a grayscale image patch and processed into a 120-dimensional feature vector, which is mapped to screen coordinates using ridge regression models. These models dynamically update as the user interacts with the screen, improving tracking accuracy over time. WebGazer’s sampling rate varies depending on browser performance and webcam specifications [12]. Operating entirely within the client’s browser, it only records x/y gaze coordinates without storing any video data, ensuring participant privacy and security. Prior research has demonstrated its ability to adapt to user interactions and provide reliable gaze approximation [49], making it a viable tool for large-scale, webcam-based cognitive research. Additionally, WebGazer has been applied to model attention in learners, particularly in predicting mind wandering and comprehension during learning tasks [23].

Study Design and Procedure

To ensure accurate gaze tracking, each participant then underwent WebGazer calibration before starting the reading task. Participants were instructed to maintain a well-lit environment to optimize tracking accuracy. The study involved reading a 40-paragraph text discussing psychological mechanisms influencing consumer behavior, with each paragraph averaging 46 words in length. The reading task was self-paced, with learners spending an average of 11.55 seconds per paragraph. While reading, WebGazer continuously recorded participants’ eye movements.

Throughout the reading session, participants were intermittently presented with seven thought probes assessing their attentional state. Each probe consisted of four questions aimed at capturing the participant’s cognitive state at that moment. After completing the reading task, participants answered 10 multiple-choice comprehension questions designed to assess their understanding of the text. The questions required that the text was well comprehension, as many answers were not explicitly stated in the text, encouraging active engagement with the material.

This study design as described above was reviewed and approved by the Institutional Review Board (IRB #2022-102) at Landmark College. Participants provided informed consent prior to data collection and could remove themselves from the study at any time (including after data collection was completed). Participants were also informed of exactly what data was collected and its future uses.

Feature Engineering

For each learner, gaze data was mapped to corresponding paragraphs of text. To estimate fixations from gaze data in relation to the text, we applied the fixation approximation method proposed by Hutt et al [22]. To contextualize the gaze data, we used natural language processing techniques to extract key linguistic features. Sentiment analysis was performed using TextBlob, which assigned sentiment scores ranging from -1 (negative) to 1 (positive). Textstat computed syllable count and the Flesch Reading Ease score to assess readability. Additionally, Natural Language Toolkit (NLTK) was used for part-of-speech tagging [6, 33, 59].

Both gaze and text-based features were calculated at the paragraph level. Gaze-related features captured onscreen and offscreen eye movements while text-based features provide context on how different textual characteristics influence reading behavior. Eye-tracking data was leveraged to derive fixation features, including the number of fixations and fixation duration statistics (mean, standard deviation, and skew). Prior research has established links between these fixation features and attentional processes across various tasks [21, 52].

Interaction-based features were also extracted to capture relationships between gaze behavior, text characteristics and reading patterns. These features help to understand how learners process written information and differentiate reading patterns across neurodivergent and neurotypical learners and identify specific neurodivergent diagnoses.

In total, 24 features were extracted for analysis, including gaze-based, text-based, NLP and interaction-based features. Table 2 presents a summary of the feature groups along with their descriptions

Feature Group | # | Description |

|---|---|---|

Gaze-Based Features | 9 | Features related to gaze behavior, including gaze count, fixation counts, duration, and dispersion. |

Text-Based Features | 4 | Features extracted from the text, including text response time and word count. |

NLP features | 2 | Natural language processing features, including sentiment, and readability scores. |

Interaction-Based Features | 9 | Interaction-based features capturing relationships between gaze behavior, NLP and text characteristics. |

Data Processing

The initial dataset consisted of 354 participants and 14,160 instances (individual paragraphs). We first filtered out instances with low-quality gaze data, reducing the dataset to 332 participants and 10,955 instances. Instances were considered low quality and removed if the text response time was less than 1 second or if the ratio of gaze count to text response time was below 5. Finally, instances with missing values were excluded, further reducing the dataset to 9,964 instances while maintaining the participant count at 332. The missing values primarily came from the fixation duration features, which were unavailable in cases where eye-tracking data failed to capture consistent fixations [70] . Since fixation duration is crucial for assessing attentional patterns, instances with missing values were removed to maintain data quality and consistency.

The cleaned dataset containing 9964 instances, from a total of 332 participants, was then subsetted into two categories – one for predicting whether a learner was neurodivergent or neurotypical and another for predicting specific neurodivergent diagnoses. The first category included all instances from the cleaned dataset to classify learners as neurodivergent or neurotypical. The second category for predicting specific neurodivergent diagnoses was created by filtering the cleaned dataset to include only neurodivergent participants resulting in 4939 instances and 164 participants.

Machine Learning Approach

We explored five supervised classification models – Random Forest (RF), Extreme Gradient Boosting (XGB), Logistic Regression (LR), K-Nearest Neighbors (KNN) and Naïve Bayes. – We selected these classical approaches based on prior research with similar data [22, 23, 27] These classification models perform reliably on limited data, and provide the interpretable predictions needed for guiding educational interventions. Due to the limited volume of data available we did not explore Deep Learning approaches at this time.

We implemented a forward feature selection method which iteratively selects features that contribute the most to our models’ performance. The process starts with an empty feature set and adds the feature that provides the most improvement in model performance at each iteration until a stopping criterion is met. Throughout this process, each newly added feature is evaluated for its contribution to model accuracy, ensuring that only the most informative features are retained. This approach improves model performance while reducing overfitting by eliminating redundant or less relevant features. We used learner-level stratified 5-fold cross-validation to ensure that each fold contained a balanced proportion of classes while preventing instances from the same participant from appearing in both the training and testing sets. This approach helped maintain class distribution and reduce the risk of overfitting.

To address the imbalance present in the dataset, we applied the Synthetic Minority Oversampling Technique (SMOTE) [5] to the training set to synthesize new samples from the minority class. This was done for both neurodivergence prediction and specific diagnoses prediction. To evaluate the effectiveness of oversampling, we trained models both with and without SMOTE, allowing for a comparative analysis of its impact on model performance.

We evaluated the models focusing on two performance metrics – Cohen’s kappa, which considers imbalanced datasets and corrects for chance agreement, ranging from -1 to 1 where 0 means no agreement beyond chance and 1 means complete agreement, and Area Under the Receiver Operating Characteristic Curve (AUROC), which accesses the models’ ability to distinguish between neurodivergent and neurotypical categories as well as specific neurodivergent diagnoses, ranging from 0 to 1 where 0.5 represents chance performance and 1 represents perfect performance [38].

After evaluating multiple classification models on the datasets, we selected the best performing models for distinguishing neurodivergent learners from neurotypical learners and predicting specific diagnoses. This was achieved using the kappa metric. Since kappa was the only metric used, its weight was set to 1.0, meaning the composite score was equivalent to the Kappa value. The model with the highest composite score, that is, the best-performing model above chance was selected.

We further used this selection method in choosing the best performing model by comparing models that used SMOTE to those that did not. This was done to determine whether applying SMOTE improved the models’ results or not. In the case where models achieved similar composite scores, the AUROC was used as a secondary metric to break the ties and identify the best model.

To ensure clarity, reliability, and avoid introducing bias associated with metrics like accuracy, kappa was selected as the primary metric for selecting the best model.

To understand the influence and direction of the selected features in both neurodivergence classification and specific diagnoses prediction, we employed SHapley Additive exPlanations (SHAP). Implementing SHAP allowed us to interpret how each feature contributed to the model’s predictions, revealing the magnitude and direction of its impact. SHAP enabled us to observe how the influence of these features varied across different diagnoses.

results

Predicting Neurodivergence – Neurodivergent versus Neurotypical

The focus here was to predict whether a learner is neurodivergent or neurotypical using their behavioral, cognitive and textual features. This classification can help identify what differentiates a neurodivergent from a neurotypical learner, thereby enabling educators to be able to identify a learner’s category and tailor their teaching strategies to meet an individual’s needs.

Table 3 presents the result of the model for identifying whether a learner is neurodivergent. After evaluating the performance of the models with and without SMOTE, Logistic Regression (LR) without SMOTE was selected as the best model for predicting neurodivergence. The base rate of 0.50 indicated that half of the learners in the dataset were labelled as neurodivergent. The model achieving a kappa score of 0.14 and an AUROC of 0.60 shows that there was an agreement beyond chance. This result establishes the baseline performance of LR model without using a balancing technique.

# | SMOTE | Model | Base rate | Kappa | AUROC |

|---|---|---|---|---|---|

332 | No | LR | 0.50 | 0.14 | 0.60 |

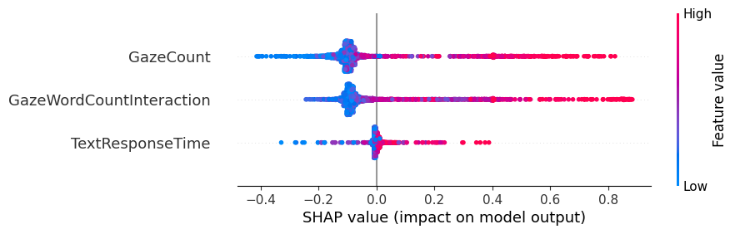

Of the three features selected by the forward feature selection technique for predicting neurodivergence, gaze count was the most influential feature, as seen by its wide range of SHAP values in Figure 1. It differentiated neurodivergence based on the direction and position of a person’s gaze, where high values of gaze had a strong positive impact in predicting neurodiversity. Gaze-to-word count feature captured how gaze behavior relates to the count of words per paragraph where gaze per word count positively influenced predicting neurodiversity. Text response time distinguished neurodivergence based on the time taken to respond to paragraphs of text. Higher response times contributed positively to predicting that a learner is neurodivergent. Gaze-to-word count and text response time features had smaller, but significant contributions to predicting neurodivergence when compared to gazes.

Predicting Neurodivergent Diagnoses

After predicting the 332 learners with 9964 instances as either neurodivergent or neurotypical, we also trained models to predict specific diagnoses. Identifying these diagnoses provides a granular understanding of cognitive differences among the 164 neurodivergent learners with 4939 instances allowing for more targeted interventions and personalized support strategies. Each diagnosis was treated as an individual target variable where the models were evaluated to determine the absence (diagnosis = 0) or presence (diagnosis = 1) of that diagnosis. Given the presence of overlap among neurodivergent diagnoses (a learner could report having more than one diagnosis), this approach enables the identification of co-occurring diagnoses shedding more light into individual learning profiles. To better understand the variability within the neurodivergent population, models were trained and evaluated for each self-reported diagnosis. Table 4 summarizes the performance metrics for predicting specific diagnoses. The results reveal some variability in model performance across different neurodivergent groups.

Diagnosis | # | SMOTE | Model | Base rate | Kappa | AUROC |

|---|---|---|---|---|---|---|

ADD/ADHD | 164 | Yes | LR | 0.43 | 0.13 | 0.61 |

Autism/ | 164 | No | RF | 0.38 | 0.11 | 0.56 |

Dyslexia/ Dyspraxia/ Dyscalculia/ Dysgraphia | 164 | No | RF | 0.08 | 0.15 | 0.59 |

Other | 164 | Yes | RF | 0.08 | 0.08 | 0.53 |

Generalized Anxiety | 164 | No | RF | 0.51 | 0.17 | 0.58 |

Models predicting ADD/ADHD had moderate performance, indicating the model’s ability to reliably predict whether or not a learner had this diagnosis. The Autism/Asperger’s group exhibited slightly lower predictive reliability, suggesting greater variability in cognitive patterns associated with this diagnosis compared to ADD/ADHD. For learners with Dyslexia/ Dyspraxia/ Dyscalculia/ Dysgraphia, the models showed higher prediction consistency compared to Autism/Asperger’s group, suggesting that there is a signal in specific learning disabilities having predictable cognitive profiles. Other Language/Reading/Math/Non-Verbal Learning Disorders group showed the lowest predictive performance. Models predicting Generalized Anxiety Disorder also demonstrated moderate reliability.

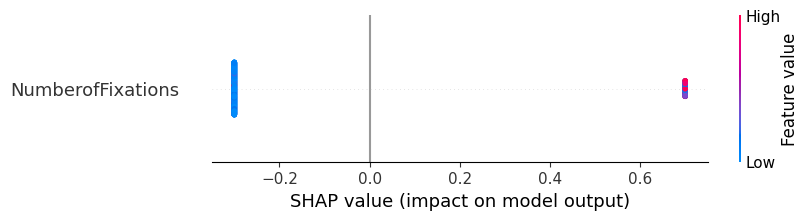

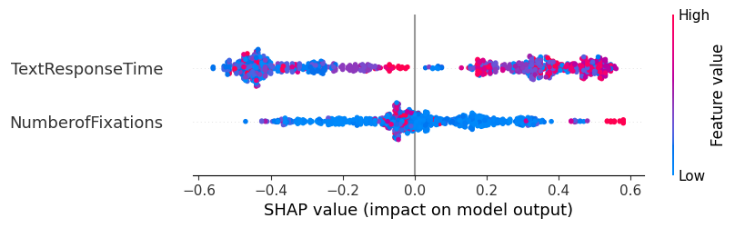

From the SHAP plot in Figure 2, the feature, number of fixations, had a positive impact on predicting ADD/ADHD when the value was high, indicating that learners with higher number of fixation clusters during tasks are more likely to exhibit patterns associated with ADD/ADHD. Whereas lower values had little impact on the models’ prediction of ADD/ADHD.

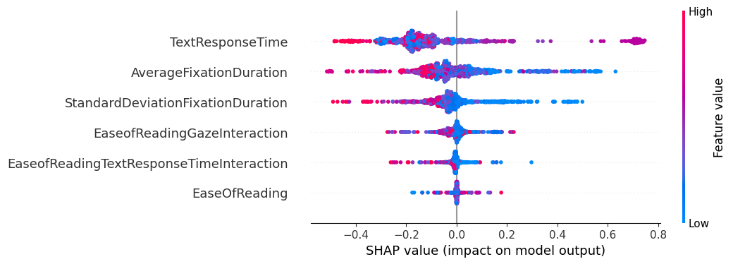

Five features were selected using the forward feature selection as shown in Figure 3. Text response time was the most impactful feature, with medium to high values positively contributed to predicting Autism/Asperger’s, suggesting that longer response times are indicative of this diagnosis. Low fixation duration standard deviation and low fixation duration average positively influenced predictions, highlighting the role of shorter fixation patterns. Also, the interaction between ease of reading and gaze behaviors and between ease of reading and text response time both exhibit a similar trend in that lower values positively influence the prediction of the diagnosis. Ease of reading features had a smaller influence compared to the other features but still had high scores positively influencing the prediction.

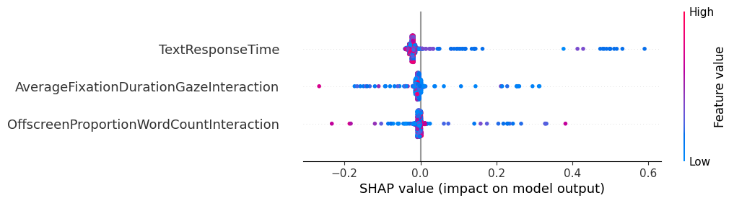

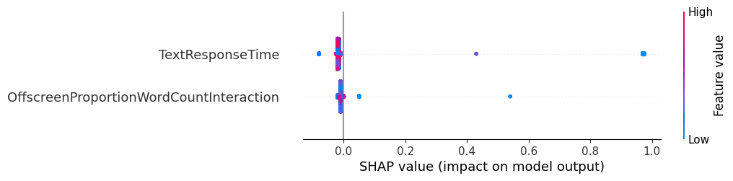

Three features were selected using forward feature selection for predicting Dyslexia: text response time, average fixation duration-to-gaze, and offscreen proportion per word count, as seen in Figure 4. Text response time was the most impactful feature, with low values positively contributing to predicting Dyslexia/Dyspraxia/Dyscalculia/Dysgraphia diagnosis, suggesting that shorter response times are indicative of this group of learners. Low fixation duration values per gaze and offscreen proportion-to-word count contributed positively and negatively to predicting the diagnosis. This suggests that the relationship between these features and the diagnosis is complex, with varying fixation patterns and offscreen gaze behaviors influencing predictions differently across individual cases.

The SHAP plot for predicting Other Language/Reading/Math/Non-Verbal Learning Disorders in Figure 5 reveals a complex relationship between the features and the model’s predictions. Text response time and offscreen proportion per word count features both exhibit mixed effects on the negative side, reflecting variability in the behaviors of learners not predicted to have this diagnosis. Shorter text response times and lower offscreen proportion per word count seem to have more influence with predicting this diagnosis.

In predicting Generalized Anxiety Disorder as shown in Figure 6, text response time impacts with both high and low values influencing prediction positively and negatively. The number of fixations shows that while low values impact prediction throughout the distribution, high values are mostly indicative of Generalized Anxiety Disorder in regions with higher SHAP values.

Discussion

Eye-tracking research has established gaze metrics as reliable indicators of attention and cognitive processes [61], yet the high cost and intrusiveness of research-grade trackers have largely confined this work to laboratory settings [41, 71]. Recent developments in webcam-based eye trackers, specifically the WebGazer, provides a scalable and cost effective tracker that allows real-time gaze measurement in ecologically valid learning environments [71]. Because neurodivergent diagnoses including ADHD, Autism and dyslexia are characterized by distinctive attentional and processing patterns [2, 24, 43], using WebGazer to collect gaze data from these learner groups allows us to understand those differences. In our study, participants completed a self-paced reading task while we recorded multi-stream data from both neurodivergent and neurotypical learners using WebGazer. Our analysis not only distinguished broad neurodivergence from typical profiles but also revealed diagnoses-specific gaze and reading patterns within neurodivergent groups. These findings demonstrate the feasibility of low-cost eye tracking for data-driven learner modeling and lay the groundwork for personalized interventions that respond to individual needs.

To interpret the most influential features that drove our prediction, we applied SHapley Additive exPlanations (SHAP) across both broad neurodivergence and diagnosis-specific models. The SHAP analysis revealed considerable variation in feature importance across different neurodivergent groups, emphasizing the diversity in cognitive and attentional processes among learners. Importantly, these findings must be considered alongside the machine learning model performance, as classification effectiveness varied across different neurodivergent profiles.

Main Findings

Although our sample size for this study was modest compared to large-scale machine learning studies, it remains substantial relative to most participant samples in neurodivergent research. Using this sample, we evaluated five classification models and found that logistic regression without SMOTE was the best-performing model for neurodivergence classification, achieving a kappa score of 0.14 and an AUROC of 0.60. While this result indicates only slight agreement beyond chance, it establishes a foundational baseline for further improvement.

For specific neurodivergent diagnoses, models exhibited varying performance. Considering the kappa scores, the best predictive performance was observed for Generalized Anxiety Disorder (AUROC = 0.58, Kappa = 0.17) and Dyslexia/Dyspraxia/Dyscalculia/Dysgraphia (AUROC = 0.59, Kappa = 0.15), suggesting more consistent cognitive patterns within these groups. The model for ADD/ADHD also demonstrated moderate predictive capability, achieving the highest AUROC (AUROC = 0.61, Kappa = 0.13). Conversely, models for Autism/Asperger’s/ASD (AUROC = 0.56, Kappa = 0.11) and Other Language/Reading/Math/Non-Verbal Learning Disorders (AUROC = 0.53, Kappa = 0.08) exhibited lower predictive performance, likely due to greater variability in these diagnoses.

The SHAP analysis further highlighted the most influential features for both neurodivergence classification and specific neurodivergent diagnoses. For broad neurodivergence classification, gaze count was the most predictive feature, suggesting that differences in gaze behaviors are an important factor in distinguishing neurodivergent learners from neurotypical learners. Gaze-to-word count interaction and text response time were also significant, indicating that neurodivergent learners may process text differently, by taking longer to engage with the material.

When predicting specific neurodivergent profiles, different feature sets were identified as the most important for each classification with commonalities observed across certain diagnoses. Selected through forward selection, text response time was a common predictor across multiple diagnoses - Autism/Asperger’s, Dyslexia/Dyspraxia/Dyscalculia/Dysgraphia, Other Language/Reading/Math/Non-Verbal Learning Disorders, and Generalized Anxiety Disorder.

For ADD/ADHD, fixation count was the strongest predictor, aligning with prior research that associates frequent attentional shifts with this disorder [39]. For Autism/Asperger’s/ASD, text response time and fixation duration were highly influential, consistent with studies indicating that individuals with ASD exhibit shorter fixation duration [34] and slower text engagement [15]. For Dyslexia/Dyspraxia/Dyscalculia/Dysgraphia, text response time, fixation duration-to-gaze, and offscreen proportion-to-word count were the most predictive features, with shorter response times indicating a higher likelihood of diagnosis, while lower values of fixation duration-to-gaze and offscreen proportion-to-word count showed mixed effects, reflecting varied reading strategies among these learners. Given the heterogeneity of this group – encompassing multiple distinct learning differences – the observed variability reflects diverse cognitive and reading strategies across these diagnoses. For Other Language/Reading/Math/Non-Verbal Learning Disorders, fixation duration and offscreen proportion per word count were key, reflecting challenges in visual processing and reading fluency. For Generalized Anxiety Disorder, SHAP analysis showed a mix of positive and negative effects related to text response time and fixations, mirroring findings in anxiety research that suggest variability in engagement [45, 46, 57].

Applications

We first start with how this work should not be applied. Though encouraging, the model accuracies reported in this work are not suitable for medical diagnosis. Given the potential for false positives or negatives, gaze-based detectors should not be used instead of validated behavioral and cognitive assessments. This approach should not be used for diagnostic reasons or to single out any individual student. However, by leveraging webcam-based eye tracking combined with machine learning, this approach provides a scalable, non-invasive, and accessible method to create more personalized and adaptive learning experiences. By responding to learners’ interaction patterns, future technology, if designed mindfully, can provide real-time alterations that support various individual differences, regardless of official diagnosis.

Such adaptations or interventions may include adjusting text presentation, modifying reading complexity, or integrating multimodal content to support learners with ADHD, dyslexia, or other neurodivergent diagnoses. More overt interventions, such as visual prompts or structured scaffolding, could provide real-time support when indicators of cognitive overload or disengagement are detected. Given the moderate accuracy of the models, all interventions should be designed to “fail soft”, that is, to have no negative impact on learning in the event of a false positive.

Additionally, gaze and text interaction data could be integrated into teacher dashboards, offering real-time formative feedback on learner’s cognitive engagement, enabling educators to refine their teaching methods and enhance classroom inclusivity. Researchers, in turn, can use this technology to study cognitive processing differences across neurodivergent and neurotypical learners, informing educational policies and intervention strategies.

With all technology, especially that which collects learners’ personal data, it is critical that we respect learners’ privacy. All applications should be transparent about when and why gaze is being recorded and how it is used. The intended use of this technology should remain within educational support, rather than high-stakes decision-making. Applications will need to build trust with learners and instructors that the data collected will not be used for other purposes.

Limitations and Future Work

A primary limitation of this study is the need for model refinement to improve predictive accuracy. While our approach demonstrated moderate success in classifying neurodivergent learners – including kappa of 0.15 for classifying ADD/ADHD diagnoses, which reflects slight agreement above chance – future research should focus on improving model performance by integrating multimodal data sources, such as facial expressions, keystroke dynamics, and physiological signals. With further advancements, the use of webcam-based eye tracking in combination with machine learning has the potential to contribute to more inclusive and adaptive educational environments that better support the needs of neurodivergent learners.

Another key limitation is the inherent variability in webcam-based eye-tracking accuracy. Unlike research-grade eye trackers, which provide high precision under controlled conditions, webcam-based tracking is more susceptible to external factors such as lighting conditions, webcam resolution, and participant positioning [18, 35, 74]. These inconsistencies can lead to missing or inaccurate gaze data, which may impact model performance [16] or overall data collection. While missing values were removed to maintain data quality, future work should explore improved gaze estimation algorithms and calibration techniques to enhance tracking robustness.

Beyond technical limitations, the dataset used in this study presents challenges related to sample size, diversity and generalizability. Roughly 19% of Americans identify as neurodivergent [47]; however, specific diagnoses are less common within this population [67, 72], making it difficult to recruit large samples for individual diagnosis. The reliance on self-reported neurodivergent diagnoses introduces potential biases, such as misclassification or underreporting of neurodivergent traits. Additionally, although the models were trained on a diverse population of learners, there was insufficient representation across racial and demographic groups. This lack of diversity limits the generalizability of the findings, as gaze behaviors and cognitive processing patterns may vary across different cultural and demographic backgrounds [60]. Future research should aim to validate these models using clinically diagnosed populations and ensure broader representation to improve model applicability across various learner profiles.

Another important limitation is the heterogeneity within the Dyslexia/Dyspraxia/Dyscalculia/Dysgraphia category. While these diagnoses were grouped together during data collection, the extent to which they share common cognitive and gaze-based patterns remains unclear. Future research should examine the distribution of diagnoses within the Dyslexia/Dyspraxia/Dyscalculia/Dysgraphia category and consider refining classification strategies to better account for distinct symptom profiles across these conditions.

We also note for this initial exploration, we used a single task (reading). Future work should consider if findings generalize to other learning tasks and environments, especially those that are more visual rich such as intelligent tutoring systems and educational games.

conclusion

This study explored the use of webcam-based eye tracking to predict whether a learner is neurodivergent or neurotypical and to identify specific neurodivergent diagnoses using machine learning models. Our findings demonstrate the feasibility of gaze-based features, and text characteristics in predicting neurodivergence with moderate success. Notably, text response time emerged as a key predictor across multiple neurodivergent profiles, reinforcing its potential as a generalizable indicator of cognitive processing differences.

Overall, these findings underscore the potential of webcam-based eye tracking as a scalable, non-intrusive method for cognitive modeling in educational settings. While current models demonstrate only moderate predictive power, they pave the way for improvement through enhanced feature engineering, larger datasets, and advanced machine learning techniques. This study highlights the importance of personalized learning analytics and lays the foundation for further exploration into real-time adaptive interventions for neurodivergent learners.

REFERENCES

- Adhikari, S. and Stark, D.E. 2017. Video-based eye tracking for neuropsychiatric assessment. Annals of the New York Academy of Sciences. 1387, 1 (2017), 145–152. DOI:https://doi.org/10.1111/nyas.13305.

- Allen, G. and Courchesne, E. 2001. Attention function and dysfunction in autism. Frontiers in Bioscience-Landmark. 6, 3 (Feb. 2001), 105–119. DOI:https://doi.org/10.2741/allen.

- Bell, C. 2023. Neurodiversity in the general practice workforce. InnovAiT. 16, 9 (Sep. 2023), 450–455. DOI:https://doi.org/10.1177/17557380231179742.

- Carette, R., Elbattah, M., Cilia, F., Dequen, G., Guérin, J.-L. and Bosche, J. 2025. Learning to Predict Autism Spectrum Disorder based on the Visual Patterns of Eye-tracking Scanpaths. (Feb. 2025), 103–112.

- Chawla, N.V., Bowyer, K.W., Hall, L.O. and Kegelmeyer, W.P. 2002. SMOTE: Synthetic Minority Over-sampling Technique. Journal of Artificial Intelligence Research. 16, (Jun. 2002), 321–357. DOI:https://doi.org/10.1613/jair.953.

- Chiche, A. and Yitagesu, B. 2022. Part of speech tagging: a systematic review of deep learning and machine learning approaches. Journal of Big Data. 9, 1 (Jan. 2022), 10. DOI:https://doi.org/10.1186/s40537-022-00561-y.

- Clouder, L., Karakus, M., Cinotti, A., Ferreyra, M.V., Fierros, G.A. and Rojo, P. 2020. Neurodiversity in higher education: a narrative synthesis. Higher Education. 80, 4 (Oct. 2020), 757–778. DOI:https://doi.org/10.1007/s10734-020-00513-6.

- Cunff, A.-L.L., Giampietro, V. and Dommett, E. 2024. Neurodiversity and cognitive load in online learning: A focus group study. PLOS ONE. 19, 4 (Apr. 2024), e0301932. DOI:https://doi.org/10.1371/journal.pone.0301932.

- Darici, D., Reissner, C. and Missler, M. 2023. Webcam-based eye-tracking to measure visual expertise of medical students during online histology training. GMS journal for medical education. 40, 5 (2023), Doc60. DOI:https://doi.org/10.3205/zma001642.

- Dostálová, N. and Plch, L. 2023. A Scoping Review of Webcam Eye Tracking in Learning and Education. Studia paedagogica. 28, 3 (2023), 113–131. DOI:https://doi.org/10.5817/SP2023-3-5.

- Elnakib, A., Soliman, A., Nitzken, M., Casanova, M.F., Gimel’farb, G. and El-Baz, A. 2014. Magnetic Resonance Imaging Findings for Dyslexia: A Review. Journal of Biomedical Nanotechnology. 10, 10 (Oct. 2014), 2778–2805. DOI:https://doi.org/10.1166/jbn.2014.1895.

- Eye Tracking - jsPsych: https://www.jspsych.org/6.3/overview/eye-tracking/. Accessed: 2024-08-20.

- Fakhar, U., Elkarami, B. and Alkhateeb, A. 2023. Machine Learning Model to Predict Autism Spectrum Disorder Using Eye Gaze Tracking. 2023 IEEE International Conference on Bioinformatics and Biomedicine (BIBM) (Dec. 2023), 4002–4006.

- Fikri, M.A., Santosa, P.I. and Wibirama, S. 2021. A Review on Opportunities and Challenges of Machine Learning and Deep Learning for Eye Movements Classification. 2021 IEEE International Biomedical Instrumentation and Technology Conference (IBITeC) (Oct. 2021), 65–70.

- Gonzalez, D.A., Glazebrook, C.M. and Lyons, J.L. 2015. The use of action phrases in individuals with Autism Spectrum Disorder. Neuropsychologia. 77, (Oct. 2015), 339–345. DOI:https://doi.org/10.1016/j.neuropsychologia.2015.09.019.

- Grootjen, J.W., Weingärtner, H. and Mayer, S. 2023. Highlighting the Challenges of Blinks in Eye Tracking for Interactive Systems. Proceedings of the 2023 Symposium on Eye Tracking Research and Applications (New York, NY, USA, May 2023), 1–7.

- Haddioui, I.E. and Khaldi, M. 2012. Learner Behaviour Analysis through Eye Tracking. (2012).

- Hagihara, H., Zaadnoordijk, L., Cusack, R., Kimura, N. and Tsuji, S. 2024. Exploration of factors affecting webcam-based automated gaze coding. Behavior Research Methods. 56, 7 (Oct. 2024), 7374–7390. DOI:https://doi.org/10.3758/s13428-024-02424-1.

- Harismithaa, L. and Sadasivam, G.S. 2022. Multimodal screening for dyslexia using anatomical and functional MRI data. Journal of Computational Methods in Sciences and Engineering. 22, 4 (Jul. 2022), 1105–1116. DOI:https://doi.org/10.3233/JCM-225999.

- Harshit, N.S., Sahu, N.K. and Lone, H.R. 2024. Eyes Speak Louder: Harnessing Deep Features From Low-Cost Camera Video for Anxiety Detection. Proceedings of the Workshop on Body-Centric Computing Systems (New York, NY, USA, Jun. 2024), 23–28.

- Henderson, J.M., Choi, W., Luke, S.G. and Desai, R.H. 2015. Neural correlates of fixation duration in natural reading: Evidence from fixation-related fMRI. NeuroImage. 119, (Oct. 2015), 390–397. DOI:https://doi.org/10.1016/j.neuroimage.2015.06.072.

- Hutt, S. and D’Mello, S.K. 2022. Evaluating Calibration-free Webcam-based Eye Tracking for Gaze-based User Modeling. Proceedings of the 2022 International Conference on Multimodal Interaction (New York, NY, USA, Nov. 2022), 224–235.

- Hutt, S., Wong, A., Papoutsaki, A., Baker, R.S., Gold, J.I. and Mills, C. 2024. Webcam-based eye tracking to detect mind wandering and comprehension errors. Behavior Research Methods. 56, 1 (Jan. 2024), 1–17. DOI:https://doi.org/10.3758/s13428-022-02040-x.

- Irvine, B., Elise, F., Brinkert, J., Poole, D., Farran, E.K., Milne, E., Scerif, G., Crane, L. and Remington, A. 2024. ‘A storm of post-it notes’: Experiences of perceptual capacity in autism and ADHD. Neurodiversity. 2, (Feb. 2024), 27546330241229004. DOI:https://doi.org/10.1177/27546330241229004.

- Jacobson, N.C. and Feng, B. 2022. Digital phenotyping of generalized anxiety disorder: using artificial intelligence to accurately predict symptom severity using wearable sensors in daily life. Translational Psychiatry. 12, 1 (Aug. 2022), 1–7. DOI:https://doi.org/10.1038/s41398-022-02038-1.

- Jain, T., Bhatia, S., Sarkar, C., Jain, P. and Jain, N.K. 2024. Real-Time Webcam-Based Eye Tracking for Gaze Estimation: Applications and Innovations. 2024 15th International Conference on Computing Communication and Networking Technologies (ICCCNT) (Jun. 2024), 1–7.

- Jaiyeola, G.D., Wong, A.Y., Bryck, R.L., Mills, C. and Hutt, S. 2025. One Size Does Not Fit All: Considerations when using Webcam-Based Eye Tracking to Models of Neurodivergent Learners’ Attention and Comprehension. Proceedings of the 15th International Learning Analytics and Knowledge Conference (New York, NY, USA, Mar. 2025), 24–35.

- Jothi Prabha, A. and Bhargavi, R. 2022. Prediction of Dyslexia from Eye Movements Using Machine Learning. IETE Journal of Research. 68, 2 (Mar. 2022), 814–823. DOI:https://doi.org/10.1080/03772063.2019.1622461.

- Jović, A., Brkić, K. and Bogunović, N. 2015. A review of feature selection methods with applications. 2015 38th International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO) (May 2015), 1200–1205.

- Kamalov, F., Elnaffar, S., Cherukuri, A. and Jonnalagadda, A. 2024. Forward feature selection: empirical analysis. Journal of Intelligent Systems and Internet of Things. Issue 1 (Jan. 2024), 44–54. DOI:https://doi.org/10.54216/JISIoT.110105.

- Kannan, R.M. and Sasikala, R. 2023. Predicting autism in children at an early stage using eye tracking. 2023 2nd International Conference on Vision Towards Emerging Trends in Communication and Networking Technologies (ViTECoN) (May 2023), 1–6.

- Kar, A. 2020. MLGaze: Machine Learning-Based Analysis of Gaze Error Patterns in Consumer Eye Tracking Systems. Vision. 4, 2 (Jun. 2020), 25. DOI:https://doi.org/10.3390/vision4020025.

- Kavitha, M., Naib, B.B., Mallikarjuna, B., Kavitha, R. and Srinivasan, R. 2022. Sentiment Analysis using NLP and Machine Learning Techniques on Social Media Data. 2022 2nd International Conference on Advance Computing and Innovative Technologies in Engineering (ICACITE) (Apr. 2022), 112–115.

- Keehn, B., Brenner, L.A., Ramos, A.I., Lincoln, A.J., Marshall, S.P. and Müller, R.-A. 2009. Brief Report: Eye-Movement Patterns During an Embedded Figures Test in Children with ASD. Journal of Autism and Developmental Disorders. 39, 2 (Feb. 2009), 383–387. DOI:https://doi.org/10.1007/s10803-008-0608-0.

- Kraft, D., Bieber, G. and Fellmann, M. 2023. Reliability factor for accurate remote PPG systems. Proceedings of the 16th International Conference on PErvasive Technologies Related to Assistive Environments (New York, NY, USA, Aug. 2023), 448–456.

- Kulke, L., Atkinson, J. and Braddick, O. 2015. Automatic Detection of Attention Shifts in Infancy: Eye Tracking in the Fixation Shift Paradigm. PLOS ONE. 10, 12 (Dec. 2015), e0142505. DOI:https://doi.org/10.1371/journal.pone.0142505.

- Kuo, Y.-L., Lee, J.-S. and Hsieh, M.-C. 2014. Video-Based Eye Tracking to Detect the Attention Shift: A Computer Classroom Context-Aware System. International Journal of Distance Education Technologies (IJDET). 12, 4 (2014), 66–81. DOI:https://doi.org/10.4018/ijdet.2014100105.

- Kuvar, V., Kam, J.W.Y., Hutt, S. and Mills, C. 2023. Detecting When the Mind Wanders Off Task in Real-time: An Overview and Systematic Review. Proceedings of the 25th International Conference on Multimodal Interaction (New York, NY, USA, Oct. 2023), 163–173.

- Luna-Rodriguez, A., Wendt, M., Kerner auch Koerner, J., Gawrilow, C. and Jacobsen, T. 2018. Selective impairment of attentional set shifting in adults with ADHD. Behavioral and Brain Functions. 14, 1 (Nov. 2018), 18. DOI:https://doi.org/10.1186/s12993-018-0150-y.

- Lundberg, S. and Lee, S.-I. 2017. A Unified Approach to Interpreting Model Predictions. arXiv.

- Mantiuk, R., Kowalik, M., Nowosielski, A. and Bazyluk, B. 2012. Do-It-Yourself Eye Tracker: Low-Cost Pupil-Based Eye Tracker for Computer Graphics Applications. Advances in Multimedia Modeling (Berlin, Heidelberg, 2012), 115–125.

- Mariette, F. and Gawie, S. 2024. A Cognitive Stance to Enhance Learner Information Processing Ability in the Classroom: Structural Equation Modelling Approach. ResearchGate. (Nov. 2024). DOI:https://doi.org/10.13187/jare.2023.1.18.

- Maw, K.J., Beattie, G. and Burns, E.J. 2024. Cognitive strengths in neurodevelopmental disorders, conditions and differences: A critical review. Neuropsychologia. 197, (May 2024), 108850. DOI:https://doi.org/10.1016/j.neuropsychologia.2024.108850.

- McDowall, A. and Kiseleva, M. 2024. A rapid review of supports for neurodivergent students in higher education. Implications for research and practice. Neurodiversity. 2, (Feb. 2024), 27546330241291769. DOI:https://doi.org/10.1177/27546330241291769.

- Mogg, K., Millar, N. and Bradley, B.P. 2000. Biases in eye movements to threatening facial expressions in generalized anxiety disorder and depressive disorder. Journal of Abnormal Psychology. 109, 4 (2000), 695–704. DOI:https://doi.org/10.1037/0021-843X.109.4.695.

- Nelson, A.L., Quigley, L., Carriere, J., Kalles, E., Smilek, D. and Purdon, C. 2022. Avoidance of mild threat observed in generalized anxiety disorder (GAD) using eye tracking. Journal of Anxiety Disorders. 88, (May 2022), 102577. DOI:https://doi.org/10.1016/j.janxdis.2022.102577.

- Neurodiversity in the U.S.: 19% of Americans identify as neurodivergent | YouGov: https://today.yougov.com/health/articles/50950-neurodiversity-neurodivergence-in-united-states-19-percent-americans-identify-neurodivergent-poll. Accessed: 2025-04-16.

- Palan, S. and Schitter, C. 2018. Prolific.ac—A subject pool for online experiments. Journal of Behavioral and Experimental Finance. 17, (Mar. 2018), 22–27. DOI:https://doi.org/10.1016/j.jbef.2017.12.004.

- Papoutsaki, A., Sangkloy, P., Laskey, J., Daskalova, N., Huang, J. and Hays, J. 2016. Webgazer: scalable webcam eye tracking using user interactions. Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence (New York, New York, USA, Jul. 2016), 3839–3845.

- Pasarín-Lavín, T., García, T., Abín, A. and Rodríguez, C. 2024. Neurodivergent students. A continuum of skills with an emphasis on creativity and executive functions. Applied Neuropsychology: Child. 0, 0 (Sep. 2024), 1–13. DOI:https://doi.org/10.1080/21622965.2024.2406914.

- Prabha, A.J., Bhargavi, R. and Harish, B. 2021. An Efficient Machine Learning Model for Prediction of Dyslexia from Eye Fixation Events. New Approaches in Engineering Research Vol. 10. (Aug. 2021), 171–179. DOI:https://doi.org/10.9734/bpi/naer/v10/11914D.

- Rayner, K. 1992. Eye Movements and Visual Cognition: Introduction. Eye Movements and Visual Cognition: Scene Perception and Reading. K. Rayner, ed. Springer. 1–7.

- Rello, L. and Ballesteros, M. 2015. Detecting readers with dyslexia using machine learning with eye tracking measures. Proceedings of the 12th International Web for All Conference (New York, NY, USA, May 2015), 1–8.

- Robal, T., Zhao, Y., Lofi, C. and Hauff, C. 2018. Webcam-based Attention Tracking in Online Learning: A Feasibility Study. Proceedings of the 23rd International Conference on Intelligent User Interfaces (New York, NY, USA, Mar. 2018), 189–197.

- Rojas, J.-C., Marín-Morales, J., Ausín Azofra, J.M. and Contero, M. 2020. Recognizing Decision-Making Using Eye Movement: A Case Study With Children. Frontiers in Psychology. 11, (Sep. 2020). DOI:https://doi.org/10.3389/fpsyg.2020.570470.

- Roy, R. and Jain, S. 2021. Global Neurodiverse Support Systems : Primary Research Findings on Key Challenges and Optimized Solutions in Mumbai, India. The Physician. 6, 3 (2021), 1–22. DOI:https://doi.org/10.38192/1.6.3.9.

- Rutter, L.A., Norton, D.J. and Brown, T.A. 2021. Visual attention toward emotional stimuli: Anxiety symptoms correspond to distinct gaze patterns. PLOS ONE. 16, 5 (May 2021), e0250176. DOI:https://doi.org/10.1371/journal.pone.0250176.

- Saini, S., Roy, A.K. and Basu, S. 2023. Eye-Tracking Movements—A Comparative Study. Recent Trends in Intelligence Enabled Research (Singapore, 2023), 21–33.

- Santucci, V., Santarelli, F., Forti, L. and Spina, S. 2020. Automatic Classification of Text Complexity. Applied Sciences. 10, 20 (Jan. 2020), 7285. DOI:https://doi.org/10.3390/app10207285.

- Šašinková, A., Čeněk, J., Ugwitz, P., Tsai, J.-L., Giannopoulos, I., Lacko, D., Stachoň, Z., Fitz, J. and Šašinka, Č. 2023. Exploring cross-cultural variations in visual attention patterns inside and outside national borders using immersive virtual reality. Scientific Reports. 13, 1 (Nov. 2023), 18852. DOI:https://doi.org/10.1038/s41598-023-46103-1.

- Skaramagkas, V., Giannakakis, G., Ktistakis, E., Manousos, D., Karatzanis, I., Tachos, N.S., Tripoliti, E., Marias, K., Fotiadis, D.I. and Tsiknakis, M. 2023. Review of Eye Tracking Metrics Involved in Emotional and Cognitive Processes. IEEE Reviews in Biomedical Engineering. 16, (2023), 260–277. DOI:https://doi.org/10.1109/RBME.2021.3066072.

- Soh, O.-K. 2016. Examining the reading behaviours and performances of sixth-graders for reading instruction: evidence from eye movements. Journal of e-Learning and Knowledge Society. 12, 4 (Sep. 2016). DOI:https://doi.org/10.20368/1971-8829/1137.

- Solovyova, A., Danylov, S., Oleksii, S. and Kravchenko, A. 2020. Early Autism Spectrum Disorders Diagnosis Using Eye-Tracking Technology. arXiv.

- Sun, Y., Li, Q., Zhang, H. and Zou, J. 2018. The Application of Eye Tracking in Education. Advances in Intelligent Information Hiding and Multimedia Signal Processing (Cham, 2018), 27–33.

- Sütoğlu, E., Sunar, S., Sevinç, G., Dilbaz, P., Eraslan, Ş. and Yeşilada, Y. 2021. CPS: A Tool for Classification and Prediction of Autism with STA Using Eye-tracking Data. 2021 15th Turkish National Software Engineering Symposium (UYMS) (Nov. 2021), 1–6.

- Vijayan, K.K., Mork, O.J. and Hansen, I.E. 2018. Eye Tracker as a Tool for Engineering Education. Universal Journal of Educational Research. 6, 11 (Nov. 2018), 2647–2655. DOI:https://doi.org/10.13189/ujer.2018.061130.

- Walters, A.S. 2025. Neurodiversity in children: Accommodate or celebrate? The Brown University Child and Adolescent Behavior Letter. 41, 1 (2025), 8–8. DOI:https://doi.org/10.1002/cbl.30840.

- Wang, K.D., McCool, J. and Wieman, C. 2024. Exploring the learning experiences of neurodivergent college students in STEM courses. Journal of Research in Special Educational Needs. 24, 3 (2024), 505–518. DOI:https://doi.org/10.1111/1471-3802.12650.

- Wang, R., Bush-Evans, R., Arden-Close, E., Mcalaney, J., Hodge, S., Bolat, E., Thomas, S. and Phalp, K. 2024. Usability of Responsible Gambling Information on Gambling Operator’ Websites: A Webcam-Based Eye Tracking Study. Proceedings of the 2024 Symposium on Eye Tracking Research and Applications (New York, NY, USA, Jun. 2024), 1–3.

- Wass, S.V., Smith, T.J. and Johnson, M.H. 2013. Parsing eye-tracking data of variable quality to provide accurate fixation duration estimates in infants and adults. Behavior Research Methods. 45, 1 (Mar. 2013), 229–250. DOI:https://doi.org/10.3758/s13428-012-0245-6.

- Wong, A.Y., Bryck, R.L., Baker, R.S., Hutt, S. and Mills, C. 2023. Using a Webcam Based Eye-tracker to Understand Students’ Thought Patterns and Reading Behaviors in Neurodivergent Classrooms. LAK23: 13th International Learning Analytics and Knowledge Conference (New York, NY, USA, Mar. 2023), 453–463.

- Yang, Y., Zhao, S., Zhang, M., Xiang, M., Zhao, J., Chen, S., Wang, H., Han, L. and Ran, J. 2022. Prevalence of neurodevelopmental disorders among US children and adolescents in 2019 and 2020. Frontiers in Psychology. 13, (Nov. 2022). DOI:https://doi.org/10.3389/fpsyg.2022.997648.

- Yousefi, M.S., Reisi, F., Daliri, M.R. and Shalchyan, V. 2022. Stress Detection Using Eye Tracking Data: An Evaluation of Full Parameters. IEEE Access. 10, (2022), 118941–118952. DOI:https://doi.org/10.1109/ACCESS.2022.3221179.

- Yüksel, D. 2023. Investigation of Web-Based Eye-Tracking System Performance under Different Lighting Conditions for Neuromarketing. Journal of Theoretical and Applied Electronic Commerce Research. 18, 4 (Dec. 2023), 2092–2106. DOI:https://doi.org/10.3390/jtaer18040105.

- Zdarsky, N., Treue, S. and Esghaei, M. 2021. A Deep Learning-Based Approach to Video-Based Eye Tracking for Human Psychophysics. Frontiers in Human Neuroscience. 15, (Jul. 2021). DOI:https://doi.org/10.3389/fnhum.2021.685830.

- Adhikari, S. and Stark, D.E. 2017. Video-based eye tracking for neuropsychiatric assessment. Annals of the New York Academy of Sciences. 1387, 1 (2017), 145–152. DOI:https://doi.org/10.1111/nyas.13305.

- Bell, D.C. 2023. Neurodiversity in the general practice workforce. InnovAiT. 16, 9 (Sep. 2023), 450–455. DOI:https://doi.org/10.1177/17557380231179742.

- Carette, R., Elbattah, M., Cilia, F., Dequen, G., Guérin, J.-L. and Bosche, J. 2025. Learning to Predict Autism Spectrum Disorder based on the Visual Patterns of Eye-tracking Scanpaths. (Feb. 2025), 103–112.

- Chawla, N.V., Bowyer, K.W., Hall, L.O. and Kegelmeyer, W.P. 2002. SMOTE: Synthetic Minority Over-sampling Technique. Journal of Artificial Intelligence Research. 16, (Jun. 2002), 321–357. DOI:https://doi.org/10.1613/jair.953.

- Clouder, L., Karakus, M., Cinotti, A., Ferreyra, M.V., Fierros, G.A. and Rojo, P. 2020. Neurodiversity in higher education: a narrative synthesis. Higher Education. 80, 4 (Oct. 2020), 757–778. DOI:https://doi.org/10.1007/s10734-020-00513-6.

- Cunff, A.-L.L., Giampietro, V. and Dommett, E. 2024. Neurodiversity and cognitive load in online learning: A focus group study. PLOS ONE. 19, 4 (Apr. 2024), e0301932. DOI:https://doi.org/10.1371/journal.pone.0301932.

- Darici, D., Reissner, C. and Missler, M. 2023. Webcam-based eye-tracking to measure visual expertise of medical students during online histology training. GMS journal for medical education. 40, 5 (2023), Doc60. DOI:https://doi.org/10.3205/zma001642.

- Dostálová, N. and Plch, L. 2023. A Scoping Review of Webcam Eye Tracking in Learning and Education. Studia paedagogica. 28, 3 (2023), 113–131. DOI:https://doi.org/10.5817/SP2023-3-5.

- Fakhar, U., Elkarami, B. and Alkhateeb, A. 2023. Machine Learning Model to Predict Autism Spectrum Disorder Using Eye Gaze Tracking. 2023 IEEE International Conference on Bioinformatics and Biomedicine (BIBM) (Dec. 2023), 4002–4006.

- Harshit, N.S., Sahu, N.K. and Lone, H.R. 2024. Eyes Speak Louder: Harnessing Deep Features From Low-Cost Camera Video for Anxiety Detection. Proceedings of the Workshop on Body-Centric Computing Systems (New York, NY, USA, Jun. 2024), 23–28.

- Hutt, S. and D’Mello, S.K. 2022. Evaluating Calibration-free Webcam-based Eye Tracking for Gaze-based User Modeling. Proceedings of the 2022 International Conference on Multimodal Interaction (New York, NY, USA, Nov. 2022), 224–235.

- Hutt, S., Wong, A., Papoutsaki, A., Baker, R.S., Gold, J.I. and Mills, C. 2024. Webcam-based eye tracking to detect mind wandering and comprehension errors. Behavior Research Methods. 56, 1 (Jan. 2024), 1–17. DOI:https://doi.org/10.3758/s13428-022-02040-x.

- Jacobson, N.C. and Feng, B. 2022. Digital phenotyping of generalized anxiety disorder: using artificial intelligence to accurately predict symptom severity using wearable sensors in daily life. Translational Psychiatry. 12, 1 (Aug. 2022), 1–7. DOI:https://doi.org/10.1038/s41398-022-02038-1.

- Jain, T., Bhatia, S., Sarkar, C., Jain, P. and Jain, N.K. 2024. Real-Time Webcam-Based Eye Tracking for Gaze Estimation: Applications and Innovations. 2024 15th International Conference on Computing Communication and Networking Technologies (ICCCNT) (Jun. 2024), 1–7.

- Jothi Prabha, A. and Bhargavi, R. 2022. Prediction of Dyslexia from Eye Movements Using Machine Learning. IETE Journal of Research. 68, 2 (Mar. 2022), 814–823. DOI:https://doi.org/10.1080/03772063.2019.1622461.

- Kannan, R.M. and Sasikala, R. 2023. Predicting autism in children at an early stage using eye tracking. 2023 2nd International Conference on Vision Towards Emerging Trends in Communication and Networking Technologies (ViTECoN) (May 2023), 1–6.

- Kuvar, V., Kam, J.W.Y., Hutt, S. and Mills, C. 2023. Detecting When the Mind Wanders Off Task in Real-time: An Overview and Systematic Review. Proceedings of the 25th International Conference on Multimodal Interaction (New York, NY, USA, Oct. 2023), 163–173.

- Mariette, F. and Gawie, S. 2024. A Cognitive Stance to Enhance Learner Information Processing Ability in the Classroom: Structural Equation Modelling Approach. ResearchGate. (Nov. 2024). DOI:https://doi.org/10.13187/jare.2023.1.18.

- McDowall, A. and Kiseleva, M. 2024. A rapid review of supports for neurodivergent students in higher education. Implications for research and practice. Neurodiversity. 2, (Feb. 2024), 27546330241291769. DOI:https://doi.org/10.1177/27546330241291769.

- Nelson, A.L., Quigley, L., Carriere, J., Kalles, E., Smilek, D. and Purdon, C. 2022. Avoidance of mild threat observed in generalized anxiety disorder (GAD) using eye tracking. Journal of Anxiety Disorders. 88, (May 2022), 102577. DOI:https://doi.org/10.1016/j.janxdis.2022.102577.

- Pasarín-Lavín, T., García, T., Abín, A. and Rodríguez, C. Neurodivergent students. A continuum of skills with an emphasis on creativity and executive functions. Applied Neuropsychology: Child. 0, 0, 1–13. DOI:https://doi.org/10.1080/21622965.2024.2406914.

- Prabha, A.J., Bhargavi, R. and Harish, B. 2021. An Efficient Machine Learning Model for Prediction of Dyslexia from Eye Fixation Events. New Approaches in Engineering Research Vol. 10. (Aug. 2021), 171–179. DOI:https://doi.org/10.9734/bpi/naer/v10/11914D.

- Rello, L. and Ballesteros, M. 2015. Detecting readers with dyslexia using machine learning with eye tracking measures. Proceedings of the 12th International Web for All Conference (New York, NY, USA, May 2015), 1–8.

- Rojas, J.-C., Marín-Morales, J., Ausín Azofra, J.M. and Contero, M. 2020. Recognizing Decision-Making Using Eye Movement: A Case Study With Children. Frontiers in Psychology. 11, (Sep. 2020). DOI:https://doi.org/10.3389/fpsyg.2020.570470.

- Roy, R. and Jain, S. 2021. Global Neurodiverse Support Systems : Primary Research Findings on Key Challenges and Optimized Solutions in Mumbai, India. The Physician. 6, 3 (2021), 1–22. DOI:https://doi.org/10.38192/1.6.3.9.

- Soares, R. da S.J., Barreto, C. and Sato, J.R. 2023. Perspectives in eye-tracking technology for applications in education. South African Journal of Childhood Education. 13, 1 (Jun. 2023), 8. DOI:https://doi.org/10.4102/sajce.v13i1.1204.

- Soh, O.-K. 2016. Examining the reading behaviours and performances of sixth-graders for reading instruction: evidence from eye movements. Journal of e-Learning and Knowledge Society. 12, 4 (Sep. 2016). DOI:https://doi.org/10.20368/1971-8829/1137.

- Solovyova, A., Danylov, S., Oleksii, S. and Kravchenko, A. 2020. Early Autism Spectrum Disorders Diagnosis Using Eye-Tracking Technology. arXiv.

- Sun, Y., Li, Q., Zhang, H. and Zou, J. 2018. The Application of Eye Tracking in Education. Advances in Intelligent Information Hiding and Multimedia Signal Processing (Cham, 2018), 27–33.

- Sütoğlu, E., Sunar, S., Sevinç, G., Dilbaz, P., Eraslan, Ş. and Yeşilada, Y. 2021. CPS: A Tool for Classification and Prediction of Autism with STA Using Eye-tracking Data. 2021 15th Turkish National Software Engineering Symposium (UYMS) (Nov. 2021), 1–6.

- Vijayan, K.K., Mork, O.J. and Hansen, I.E. 2018. Eye Tracker as a Tool for Engineering Education. Universal Journal of Educational Research. 6, 11 (Nov. 2018), 2647–2655. DOI:https://doi.org/10.13189/ujer.2018.061130.

- Wang, K.D., McCool, J. and Wieman, C. 2024. Exploring the learning experiences of neurodivergent college students in STEM courses. Journal of Research in Special Educational Needs. 24, 3 (2024), 505–518. DOI:https://doi.org/10.1111/1471-3802.12650.

- Wang, R., Bush-Evans, R., Arden-Close, E., Mcalaney, J., Hodge, S., Bolat, E., Thomas, S. and Phalp, K. 2024. Usability of Responsible Gambling Information on Gambling Operator’ Websites: A Webcam-Based Eye Tracking Study. Proceedings of the 2024 Symposium on Eye Tracking Research and Applications (New York, NY, USA, Jun. 2024), 1–3.

- Wong, A.Y., Bryck, R.L., Baker, R.S., Hutt, S. and Mills, C. 2023. Using a Webcam Based Eye-tracker to Understand Students’ Thought Patterns and Reading Behaviors in Neurodivergent Classrooms. LAK23: 13th International Learning Analytics and Knowledge Conference (New York, NY, USA, Mar. 2023), 453–463.

© 2025 Copyright is held by the author(s). This work is distributed under the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) license.