ABSTRACT

Improving competence requires practicing, e.g. by solving tasks. The Self-Assessment task type is a new form of scalable online task providing immediate feedback, sample solution and iterative improvement within the newly developed SAFRAN plugin. Effective learning not only requires suitable tasks but also their meaningful usage within the student’s learning process. So far, learning processes of students working on such Self-Assessment tasks have not been studied. Thus, SAFRAN was extended with activity logging allowing process mining. SAFRAN was used in a first-year computer science university course. Students' behavior was clustered and analyzed using log data. 3 task completion behavior patterns were identified indicating positive, neutral or negative impact on task processing. Differences in the use of feedback and sample solutions were also identified. The results are particularly relevant for instructors who can tailor adaptive feedback content better to its target group. The analytics approach described may be useful for researchers who want to implement and study adaptive and personalized task processing support.

Keywords

INTRODUCTION

Teaching can be described as a sequence of teaching-learning processes planned and designed by teachers [31]. As a central instrument for planning, controlling, and evaluating these processes, exercises in the form of tasks have long played a significant role in the learning context [24]. They serve to promote learning effectiveness by helping to apply and consolidate knowledge learned. This applies to both traditional and multimedia learning opportunities. Online tasks in particular offer many advantages. Students are able to work on them independent of location and time and receive immediate feedback on their performance. Students are able to learn and test their knowledge independently and, in some cases, self-directed, without the direct instruction and support of teachers as well as other students. However, there are also limitations. Learning in a virtual environment is, for example, apart from live sessions with teachers, predominantly an asynchronous learning process. When working on tasks students are left to their own devices and must show initiative if they do not understand something. This is a hurdle that not every student can overcome, which often leads to incorrect understanding or even abandonment of the task [16, 7]. Students can often receive feedback after completing a task, but this may not be helpful for or used by every student [15].

Self-Assessments as a competency-enhancing task type

The use of competency-enhancing (complex and problem-oriented) tasks that students can complete independently is intensively discussed by researchers and teachers [18, 22]. But not all traditional assessment strategies can be applied to online courses. For example, there are differences in the way a task is presented, the type of task, the complexity of the task, and appropriate support during the task. However, most tasks are at the lower two levels of Bloom's taxonomy [4] and are thus of lower complexity.

Recent developments enable scalable competency-enhancing tasks in online environments [12, 30, 8, 27, 29]. Self-Assessments can be used to set complex tasks; thus, they belong to the competency-enhancing task types. They are a special type of tasks with which students are able to evaluate their own solution based on assessment criteria and thus assess their own performance without third parties having to act as mediators. Students shall become a feedback provider themselves and gain an understanding of what a good work in the subject looks like, assuming they can accurately evaluate their own solution [2, 5]. But Self-Assessment tasks alone are not self-explanatory in case of an error in one's own solution. They are difficult to scale up in a virtual learning environment. Therefore, additional feedback is needed, which helps students to correct their own solution. This problem was identified and solved by [12] and implemented in a Moodle virtual learning environment [30]. In this approach, students begin the Self-Assessment process by selecting a relevant learning task to complete. Then the task, including instructions, is displayed and students are asked to create and submit a solution. After that, a list of assessment criteria set by the instructor is presented, a sample solution is provided on demand, and students are asked to evaluate the submitted solution. After the students have evaluated the solution using the provided assessment criteria, feedback based on their Self-Assessment is automatically selected from a feedback database defined by the trainer and presented to the students. Using the feedback, students can then reflect on the quality of their learning products and improve their solution in a new iteration (create, upload, self-assess the improved solution again, receive feedback, and accept or reject another iteration) until they self-assess their solution as correct or good enough or decide to complete the exercise [12, 13].

This type of online task is well suited to examine the task processing behavior patterns of students and their handling of feedback and sample solutions because, on the one hand, it consists of a reasonable set of possible task processing steps. On the other hand, it can be used to set and solve complex tasks of varying difficulty. For this reason, the process was adopted for use in a LMS.

Self-Assessment plugin: Improvement and Implementation

The prototypical implementation from [30] is a Moodle quiz type plugin. Since this form of quizzes was somewhat cumbersome in the implementation of the intended iterative process of working on Self-Assessment tasks and slowed it down, the support of the process was re-implemented as a Moodle activity plugin named SAFRAN (Self-Assessment with Feedback RecommendAtioNs), and thereby a simpler and faster editing process enabled. In addition, students were able to write their solution directly into an editor field, which was previously only possible by uploading .pdf, .png and .jpg files. Furthermore, additional information, such as process steps, clicks on feedback links, clicks on sample solutions and ratings of feedbacks with additional reasons for negative feedback, were saved in a log.

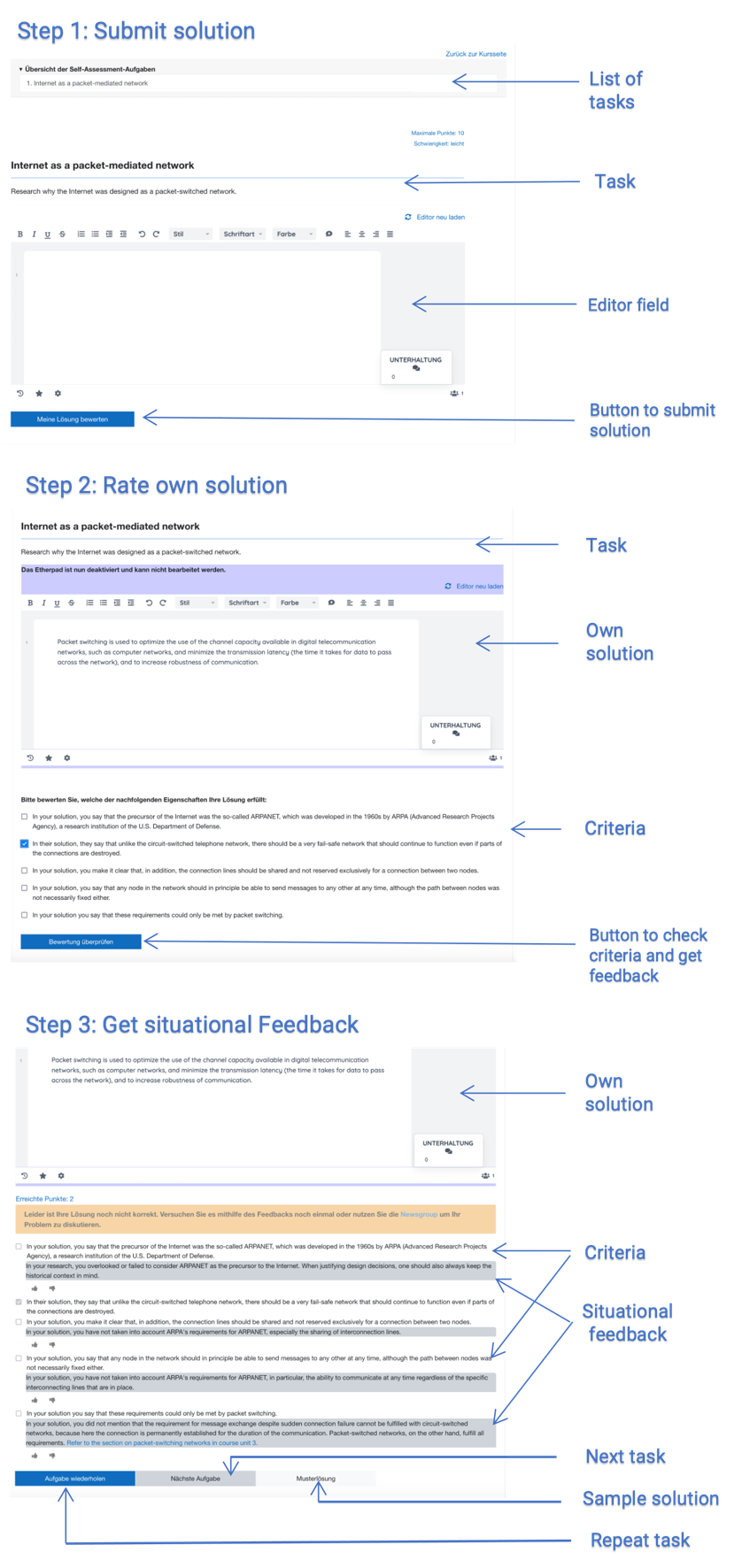

Figure 1 shows the user interface as well as the process of working on a Self-Assessment task in the enhanced SAFRAN plugin. In the first step, a student works on the task and submits his solution. In a second step, the student evaluates his solution by rating whether each indicated criterion is fulfilled by the submitted solution (checked) or not (unchecked). In the final step, the student receives feedback appropriate to his or her Self-Assessment of his or her solution. Here, the student has also the possibility to get access to the sample solution. Now, the editing process of the task can either be repeated to improve the solution based on the feedback or the provided sample solution, or the student may switch to another task, perform other activities within the course, or finish the task.

However, it is not yet sufficiently known how students actually process tasks of such type during the learning process. In order to provide students with usable and beneficial Self-Assessment tasks in a virtual learning environment, it is necessary to study how students deal with solving such tasks during their learning process. In this context, a closer look at the use of feedback and sample solutions is also relevant, as these are among the most important building blocks of the task-solving process.

To address these gaps, this study will answer the following two research questions:

RQ 1: How do students work with Self-Assessment Tasks in SAFRAN and what differences can be observed in the way they process them?

RQ 2: What behavioral patterns can be observed in the use of feedback and sample solutions by students when processing Self-Assessment tasks in SAFRAN?

Related Work

Since the start of digitalization in the educational environment and especially later on due to the transfer of traditional face-to-face teaching to technologically supported distance teaching accelerated by the COVID-19 pandemic [1], recent research has been concerned with the learning behavior of students in virtual learning environments. Research into this area provides information about the learning processes of different students. This knowledge is important to enable and support the successful acquisition of knowledge by students as much as possible [33]. An important point here is that knowledge acquisition, development and use can be identified through the observation and analysis of the handling of tasks [21]. Many studies use good grades as an indication of knowledge acquisition and use [19, 17, 34, 23, 9, 6, 3].

For example, in analyzing the activity logs of 124 participants from three Moodle courses at three different universities, [34] found a significant positive correlation between task completion and final grade. Their study also showed that students, who were very active within the course and had many logged events, received the highest grades. [17] found a similar result when analyzing the handling of Self-Assessment tasks. They found a positive correlation between engagement in the tasks and good performance in the final exam. For this purpose, they examined log data as well as the self-reports of 159 students of an Economic and Business Education university course.

In general, one could assume from this that students, who actively engage with the course and complete assignments, perform well. However, it remains unknown how these tasks were used for learning. For example, if the tasks were mandatory tasks that possessed a deadline. Thus, the completion of tasks was bound to obligatory aspects, such as time, correctness, and quantity. This could distort the picture of how tasks were handled. Thus, in most studies, a strong increase in activity was always observed during or shortly before a deadline [34, 9].

[25] recognized this lack of interpretability and limited their study to pure practice tasks without evaluation. They analyzed the potential relevance and impact of conducting non-evaluative assessments before rated assessments in an online mathematics course at a university. They found that the performance of practice tasks had a positive impact on the chances of passing the subject. However, as the complexity of the tasks increases, the relevance of participation in non-assessment practice tasks also increases. This result is consistent with standard learning theory [21]. [19] also investigated quiz-taking behavior. They analyzed students' interactions in several online quizzes from different courses and with different settings using process mining. Four different behaviors were identified, a standard quiz-taking behavior, a feedback-using behavior (students using feedback from previous attempts), the use of learning materials during the task, and multitasking behavior (performing other learning activities in the course while working on a task).

Thus, it is known that such behavior patterns exist, but little information is available on how students engage with tasks and whether there are differences in usage. Behavioral patterns of feedback and sample solutions use related to task completion are also not considered. However, this is important in order to gain a better understanding of how tasks are used and to provide appropriate learning opportunities for diverse students. Therefore, with this study we try to gain insight into the behavior of students in dealing with tasks and the corresponding feedback as well as sample solutions.

Methods

To identify task processing behavior patterns of students in a real learning environment with Self-Assessment tasks and corresponding feedback as well as sample solutions, the task processing behavior of students will be investigated by means of learning analytics. For this purpose, a time period within the course is chosen where it can be assumed that students are not engaged in exam preparations or settling in within the course. First, the study design as well as the used dataset will be explained, followed by data collection and analysis methods used.

Study Design and Dataset

For the study, 254 students of a computer science course on operating systems and computer networks were selected who volunteered to use an adaptive Moodle learning environment in winter term (WT) 2022 and agreed to the study by signing the consent form, which was approved in advance by the university's data protection officer. Students were informed about the use and handling of their data. Only anonymized data was used for analysis. Alternative printed and digital learning material was offered to non-participating students.

The course was divided into four course units. In each of these units, course material and exercises, such as multiple choice (23 occurrences), assignments corrected by tutor (30 occurrences) and Self-Assessments (41 occurrences), were provided. Assignments had a deadline and had to be submitted on time, all other exercises could be completed voluntarily and had no restrictions regarding deadline and repeatability. In addition, a usenet forum, recordings of live sessions, and questions for exam preparation were offered.

The Self-Assessment tasks [14] used in the study were evenly distributed over the individual learning units of the course. The level of difficulty of the tasks was determined by the teacher and was on average in the medium range. The number of Self-Assessment criteria ranged from 2 to 7.

The course started on October 1st, 2022 and ended on February 3rd, 2023. The course was completed with a final exam at the end of semester. In order to get an insight into the learning process, a period of eight weeks was chosen in the middle of the course from 17. October 17, 2022 to December 11, 2022. During this period, it was expected that students …:

- have already completed the introduction of the learning environment.

- are aware of the materials and exercises offered in the course.

- are not yet in the exam preparation phase.

Table 1 lists all possible activities that are distinguished during task processing by the SAFRAN plugin and stored in the log database. Thus, the task ID, the number of attempts, the selected criteria with which the student has evaluated his solution, the activity in which the student is, the timestamp, the user ID and the percentage of points achieved are stored. The activities that a student can perform while working on a task are limited by the plugin. Students are generally able to select a task from a list of tasks and thus open it (open_task_from_list). They can write a solution to the task in the editor and submit this solution for Self-Assessment (request_evaluation). They can evaluate their own solution based on criteria and get feedback for this self-evaluation (request_feedback). Afterwards, students can follow feedback links (clicked_on_link), view the sample solution (request_sample_solution), repeat the task (repeat_same_task), call up the next task in the list (open_next_task), or again select a task from the list (open_task_from_list). In addition, data on the feedback rating, a reason for each negative rating and the kind of feedback, were also collected.

pre-processed task activities | meaning |

|---|---|

questionid | ID of the task |

attempt | number of attempts by student for each task |

user_error_situation | number and order of selected criteria of a task iteration |

state | activities of students within a task including: - cancle_task (go back to course page) - clicked_on_link (clicked on a link in the feedback) - open_next_task (used button to the next task) - open_task_from_list (used task list to choose a task) - repeat_same_task (repeaded the same task) - request_evaluation (handed in solution and started rating) - request_feedback (rated the solution and got feedback) - request_sample_solution (opend the sample solution) - viewed_task_history (looked at their prior solution and solution rating) |

datetime | time the activity is called |

userid | ID of the user |

achived_points_percentage | result of student's last Self-Assessment attempt, compared to the maximum achievable assessment result |

feedbackid | ID of feedback |

feedback_rating | positive (1) and negative (0) rating of feedback by user |

feedback_reason | reason for negative feedback given by students |

From these traces of the participants' interaction with the Self-Assessment plugin, the following indicators were created and used:

- Number of attempts by students for each Self-Assessment.

- Number of sessions students have spent in SAFRAN.

- Number of Self-Assessment sessions per user

- Students' processing time for each Self-Assessment session.

- Number of task changes inside a session

- Number of completed tasks

- Number of sample solution calls per Self-Assessment task by students

- Time needed for students to view the sample solution after requesting feedback.

- Average percentage of points achieved on student Self-Assessment attempts

- Sequences of the different states and questions.

Data Collection & Analysis

Considering the objective of this study, it is an exploratory study using k-means clustering [26] and process mining methods [28] to identify and map students' behavioral patterns when completing Self-Assessment tasks, as well as to identify how they deal with feedback and sample solutions.

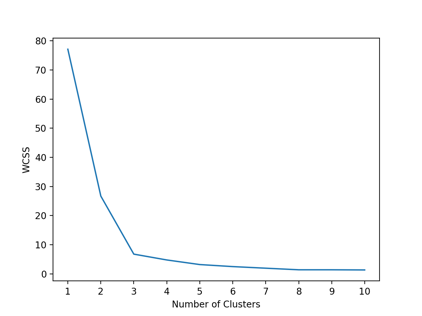

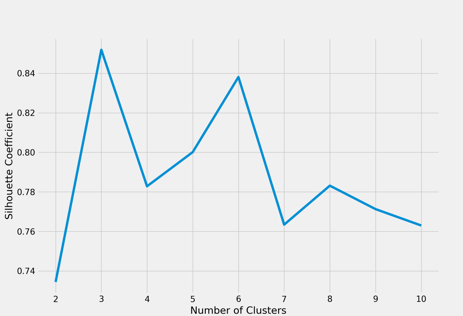

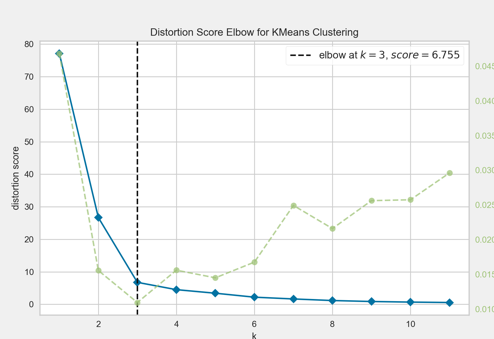

The trace data logged by the SAFRAN plugin were extracted from the database, cleaned, and processed for analysis. To determine the optimal number of clusters (groups of students), the with-cluster sum of squares WCSS [32] and the average silhouette measure [20] were used as clustering quality measures (Appendix 1 Fig 6, 7, 8). Process mining is used to identify processes based on the trace data (Leno et al., 2018). This data is thereby analyzed and mapped into a process model by using the sequence of events to construct the graph. Here, nodes correspond to activities, arcs represent relationships, and each node and arc is annotated with the corresponding frequency. The pm4py [10] Python process mining library was used to construct the process map.

In addition, to understand the relationship and significance of the use of sample solutions during task processing, the Pearson correlation was applied [11]. The Pearson correlation coefficient indicates a linear relation between two indicators and denotes the confidence interval at which the coefficient is significant. It ranges between −1 to +1 and values closer to −1 and +1 imply a strong correlation. A negative correlation coefficient implies a decrease in one indicator would result in an increase in another indicator, and vice versa.

Results and Discussion

General results on task processing, use of feedback and sample solutions by students

From a total of 254 observed participants, 144 dealt with Self-Assessment tasks at least once during the selected period. Thereby, the 41 available Self-Assessments were processed 1496 times.

During the study period, a student worked on an average of 11 tasks (SD=8.8). The average time needed to complete one task was 3.3 minutes (SD=3.2). Based on their own assessment, students achieved an average correctness of 80% (SD=24.2) of their solution. The tasks were completed in an average of four (SD=3.9) independent activity periods (sessions). A session lasted an average of 34 minutes (SD=34). Within a session, students switched tasks an average of 9.5 times (SD=9).

Tasks were repeated an average of 1.3 times independent of sessions (SD=2.2). The average time from requesting feedback to check one’s own solution to requesting the sample solution was 15 seconds (SD=4).

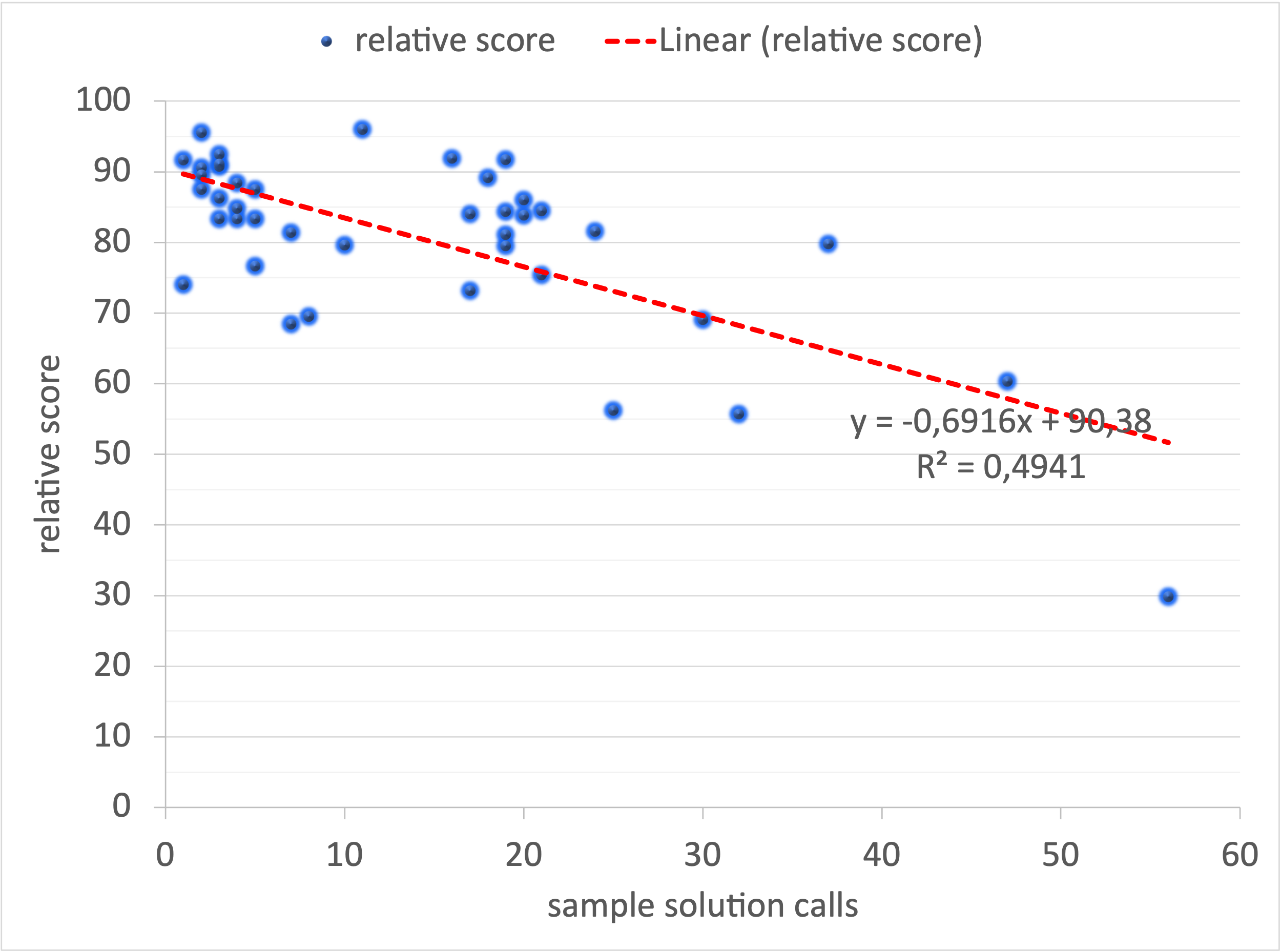

The sample solution was viewed a total of 570 times by students, whereas the percentage distribution of views for sample solutions to completed tasks was M=38%. To understand the relationship and significance of the use of sample solutions during task processing (Fig. 2), a correlation between the achieved relative score and the sample solution calls per task was found to have a strong significant negative correlation (-0.70, p<0.0001%). Thus, it could be assumed that a student with a low achieved task score is more likely to request the sample solution than a student with a high score.

Differences in students' Self-Assessment task processing

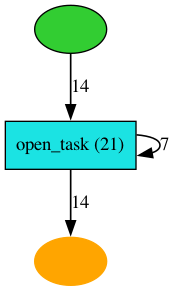

In general, three process clusters could be identified from the data. These clusters show respective process flows that the students performed during their Self-Assessment task processing sessions.

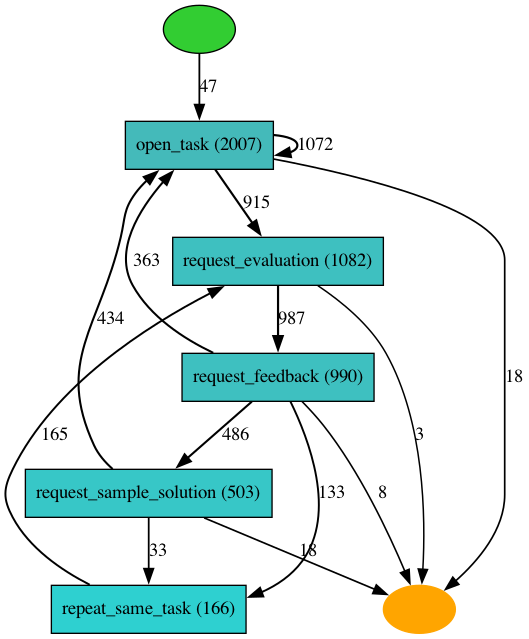

Cluster 1 (N=47) shows an intensive processing of tasks (Fig.3). Students in this cluster worked on the tasks for a longer period of time (M=43 min., SD= 26.1). Thereby, students in this cluster have worked on 11 tasks on average (SD 7.1), where solutions were partially or completely correct. Time on task is approximately 3.9 minutes. Based on their own assessment, students in this cluster achieved an average correctness of 81.5% (SD=23) of their solution and repeated a task an average of 2.5 times (SD=2.9). The sample solution was accessed 503 times by these students (50.1%). Students in this cluster seem interested in constructing their own correct solution (mastery of task) as evidenced by on average 2.5 repetitions leading to a relatively high correctness level. Students employed multiple pathways (i.e. activity sequences) mirroring different learning strategies, e.g., using feedback for improvement or using sample solution to identify and to correct deficits. Most frequently, students open a task, request a self-evaluation, request feedback, request sample solution, and repeat the task. The less frequently used pathway is students open a task, request a self-evaluation, request feedback, and repeat the task. As indicated by the relatively high correctness level, the Self-Assessment task type supports students with different learning strategies.

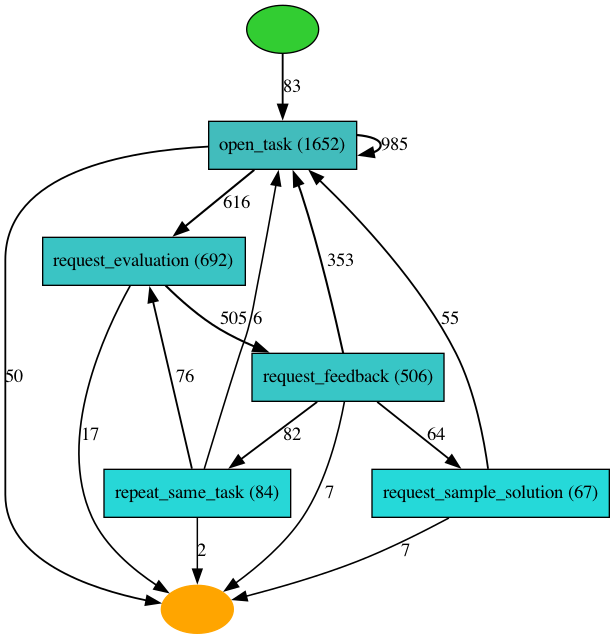

The second cluster (N=83) shows a less intensive task processing (Fig.4). Students in cluster 2 worked on average about M=17.6 minutes (SD=7.9) in one session. Thereby, students in this cluster worked on 8 tasks partially to completely on average (SD=8.4). Time on task is approximately 2.2 minutes. According to their own assessment, students in this cluster achieved an average correctness of 80.2% (SD=25.3) of their solution and repeated a task an average of 0.8 times (SD=1.6). The sample solution was accessed 67 times by these students (13.2%).

Students typically opened a task, request evaluation, request feedback, request sample solution, followed by a repeat same task or quit or open next task. The less frequently pathway is open task and next task/quit. Students in this cluster could either be successful learners who reached a high enough score with, at maximum, one iteration in shorter time than students in cluster 1. Or they could exhibit superficial self-evaluation behavior by selecting all criteria as fulfilled and thereby reaching an inappropriate high score.

Similar results can be observed in the log data of mandatory assignments (reviewed by correctors). This suggests that the probability that students exhibit superficial self-evaluation behavior is rather low, but cannot be excluded (cluster 1 (N=28) = 76% assignment correctness, cluster 2 (N=41) = 72% assignment correctness, cluster 3 (N=4) = 73% assignment correctness).

The third cluster (N=14) exhibits an incomplete task completion process (Fig.5). Students in this cluster never finished tasks and were limited to just opening different tasks. In doing so, they were active in a session for about M=8 seconds on average, SD=0.2, and switched between tasks about 2 times (SD=16).

Students in this cluster typically opened a task and then opened another task or quitted. Thus, students seem to be interested in getting a quick impression of the task, and may not be interested of working on the task. Students in this cluster often disappear from the course without further activity in the LMS.

Conclusion

In this study, a process-oriented approach was used to analyze the behavior of the students when dealing with Self-Assessment tasks and to identify differences (RQ1). In addition, the handling of feedback and sample solutions during the processing of Self-Assessment tasks should be considered more closely (RQ2). For this purpose, the process model from [12] was adopted and the prototypical implementation from [30] was adapted and further developed. The newly created Moodle Activity Plugin SAFRAN was able to offer students a simpler iteration of Self-Assessment task and could also provide additional information about the activities carried out in connection with students’ task processing.

In general, three different ways of processing the Self-Assessment tasks in the SAFRAN plugin could be observed. These would be an intensive Self-Assessment task processing, as seen in cluster 1 (Fig.3), as well as a moderate task processing of Self-Assessment tasks as seen in cluster 2 (Fig. 4). The process flows in clusters 1 and 2 differ only minimally, since the process steps for solving a task are largely specified by the SAFRAN plugin. They differ only in the proportionality of the activities (pathways). Thus, in cluster 1 the proportionality is approximately uniformly distributed across all activity options, whereas students of cluster 2 predominantly choose one specific process per session and keep it. Students in clusters 1 and 2 differ significantly in the average time required per session and task, in the number of times a sample solution is requested, and in the average number of repetitions per task. All these values are higher in cluster 1 than in cluster 2. Cluster 3, however, is very different from the other two in that it shows only a minimal task process. This mostly consists of just opening the task. Students in this cluster could be classified as task browsers. Similar behavioral patterns could be detected by [12, 19].

Based on the average Self-Assessment scores achieved, both Cluster 1 and Cluster 2 appear to be beneficial behaviors. However, this is not true for Cluster 3, which has an unfavorable task processing pattern. Here, the system would have to adaptively respond to students with such task processing patterns and motivate them to perform tasks in a favorable manner.

Regarding the use of feedback and sample solutions while processing Self-Assessment tasks, it could be observed that students use both feedback and sample solutions. Sample solutions are generally accessed relatively often, but this can strongly vary per task. It seems that the achieved score has an influence on the use and the necessity of a sample solution. The lower the achieved score, the more often students request a sample solution. Based on the average size of the feedback texts (approx. 33 words) and the amount of time students spend on the evaluation page (approx. 15 seconds) that provides feedback, it can be assumed that the feedback has been fully read and taken into account. The fact that students still call up the sample solution despite having received feedback could be due to the fact that for some students the feedback does not seem to be sufficient, so that efforts are apparently made to obtain the information that is still missing with the help of the sample solution. Such behavior indicates that the information content of existing feedback is not always sufficient and should be enriched with additional information. However, this should be adaptively adjusted to the specific needs of students according to their diversity-related characteristics.

Limitations

The study was only conducted for one course and one subject area, limiting the generalizability of the results. Further studies should therefore also include other subject areas. Since the study period is relatively small compared to the entire semester, no change in learning behavior over time was examined. Also, beneficial task processing patterns were be determined based on Self-Assessment results and the intended Self-Assessment task processing only. However, since we do not yet have results from the final exam, the benefits of the identified task processing patterns cannot be confirmed yet.

In addition, there were also students who did not show any activities of Self-Assessment task processing. However, this does not necessarily imply drop-out, since they could have done other activities in the course, such as reading or mandatory assignments.

ACKNOWLEDGMENTS

This research was supported by the Center of Advanced Technology for Assisted Learning and Predictive Analytics (CATALPA) of the FernUniversität in Hagen, Germany.

REFERENCES

- Adedoyin, O.B. and Soykan, E. 2023. Covid-19 pandemic and online learning: The challenges and opportunities. Interactive Learning Environments. (2023), 31:2, 863–875.

- Andrade, H.L. 2019. A critical review of research on Student Self-Assessment. Frontiers in Education. 4:87, (2019).

- Andreswari, R., Fauzi, R., Valensia, L. and Chanifah, S. 2022. Conformance analysis of student activities to evaluate implementation of outcome-based education in early of pandemic using process mining. SHS Web of Conferences. 139, (2022), 03018.

- Bloom, B.S. 1956. Taxonomy of educational objectives: The classification of educational goals by a committee of College and University Examiners. Longmans.

- Brown, G.T. and Harris, L.R. 2013. Student Self-Assessment. SAGE Handbook of Research on Classroom Assessment. (2013), 367–393.

- Chung, C.-Y. and Hsiao, I.-H. 2020. Investigating patterns of study persistence on self-assessment platform of programming problem-solving. Proceedings of the 51st ACM Technical Symposium on Computer Science Education. (2020), 162–168.

- Coussement, K., Phan, M., De Caigny, A., Benoit, D.F. and Raes, A. 2020. Predicting student dropout in subscription-based online learning environments: The beneficial impact of the logit leaf model. Decision Support Systems. 135, (2020), 113325.

- Csapó, B. and Molnár, G. 2019. Online diagnostic assessment in support of personalized teaching and learning: The edia system. Frontiers in Psychology. 10:1522, (2019).

- Fouh, E., Breakiron, D.A., Hamouda, S., Farghally, M.F. and Shaffer, C.A. 2014. Exploring students learning behavior with an interactive etextbook in computer science courses. Computers in Human Behavior. 41, (2014), 478–485.

- Fraunhofer Institute for Applied Information Technology (FIT), process mining group 2019. PM4PY. State-of-the-art-process mining in Python.

- Freund, R.J., Wilson, W.J. and Sa, P. 2006. Regression analysis: Statistical modeling of a response variable. Elsevier Academic Press.

- Haake, J.M., Seidel, N., Karolyi, H. and Ma, L. 2020. Self-Assessment mit High-Information Feedback. In: Zender, R., Ifenthaler, D., Leonhardt, T. & Schumacher, C. (ed.). DELFI 2020–Die 18. Fachtagung Bildungstechnologien der Gesellschaft für Informatik e.V. (Bonn, 2020), Bonn: Gesellschaft für Informatik e.V., 145–150.

- Haake, J.M., Kasakowskij, R., Karolyi, H., Burchard, M. and Seidel, N. 2021. Accuracy of self-assessments in higher education. In: Kienle, A., Harrer, A., Haake, J. M. & Lingnau, A. (ed.), DELFI 2021 (Virtual, 2021), Bonn: Gesellschaft für Informatik e.V., 97–108.

- Haake, J.M., Ma, L. and Seidel, N. 2021. Self-Assessment Questions - Operating Systems and Computer Networks. DOI= 10.5281/zenodo.5021350, 2021.

- Hattie, J. and Timperley, H. 2007. The power of feedback. Review of Educational Research. 77, 1 (2007), 81–112.

- Hew, K.F. and Cheung, W.S. 2014. Students’ and instructors’ use of massive open online courses (moocs): Motivations and challenges. Educational Research Review. 12, (2014), 45–58.

- Ifenthaler, D., Schumacher, C. and Kuzilek, J. 2022. Investigating students' use of self-assessments in higher education using learning analytics. Journal of Computer Assisted Learning. (2022), 39(1), 255–268.

- Jordan, A., Ross, N., Krauss, S., Baumert, J., Blum, W., Neubrand, M., Löwen, K., Brunner, M. and Kunter, M. 2006. Klassifikationsschema für Mathematikaufgaben: Dokumentation der Aufgabenkategorisierung im COACTIV-Projekt [Classification scheme for mathematics tasks: Documentation of task categorization in the COACTIV project]. Max-Planck-Institut für Bildungsforschung [Max Planck Institute for Human Development].

- Juhaňák, L., Zounek, J. and Rohlíková, L. 2019. Using process mining to analyze students' quiz-taking behavior patterns in a learning management system. Computers in Human Behavior. 92, (2019), 496–506.

- Kaufman, L. and Rousseeuw, P.J. 2009. Finding groups in data: An introduction to cluster analysis. John Wiley & Sons.

- Kleinknecht, M. 2019. Aufgaben und Aufgabenkultur. Zeitschrift für Grundschulforschung. 12, 1 (2019), 1–14.

- Klieme, E. 2018. Unterrichtsqualität [Teaching quality]. Handbuch Schulpädagogik. Waxmann. 393–408.

- Kokoç, M., Akçapınar, G. and Hasnine, M.N. 2021. Unfolding Students’ Online Assignment Submission Behavioral Patternsusing Temporal Learning Analytics. Educational Technology & Society. 24, 1 (2021), 223–235.

- Maier, U., Kleinknecht, M., Metz, K., Schymala, M. and Bohl, T. 2010. Entwicklung und Erprobung eines Kategoriensystems für die fächerübergreifende Aufgabenanalyse. Schulpädagogische Untersuchungen Nürnberg. 38, (2010).

- Martínez-Carrascal, J.A. and Sancho-Vinuesa, T. 2022. Using Process Mining to determine the relevance and impact of performing non-evaluative quizzes before evaluative assessments. Learning Analytics Summer Institute Spain (LASI Spain) (Salamanca, June 20–21, 2022), 52–60.

- Murphy, K.P. 2012. Machine learning a probabilistic perspective. MIT Press.

- Priemer, B., Eilerts, K., Filler, A., Pinkwart, N., Rösken-Winter, B., Tiemann, R. and Zu Belzen, A.U. 2019. A framework to foster problem-solving in STEM and computing education. Research in Science & Technological Education. 38, 1 (2019), 105–130.

- Romero, C. 2011. Handbook of Educational Data Mining. CRC Press.

- Rudian, S. and Pinkwart, N. 2021. Generating adaptive and personalized language learning online courses in Moodle with individual learning paths using templates. 2021 International Conference on Advanced Learning Technologies (ICALT). (2021), 53–55.

- Steinkohl, K., Burchart, M., Haake, J. M., & Seidel, N. 2021. Self-assess question type plugin for moodle, https://github.com/D2L2/qtype_selfassess.

- Terhart, E. 1994. SchulKultur Hintergründe, Formen und Implikationen eines schulpädagogischen Trends [SchoolCulture Backgrounds, forms and implications of a school pedagogical trend]. Zeitschrift für Pädagogik. 40, 5 (1994), 685–699.

- Thorndike, R.L. 1953. Who belongs in the family? Psychometrika. 18, 4 (1953), 267–276.

- Wei, X., Saab, N. and Admiraal, W. 2021. Assessment of cognitive, behavioral, and Affective Learning Outcomes in massive open online courses: A systematic literature review. Computers & Education. 163, (2021), 104097.

- Zhang, Y., Ghandour, A. and Shestak, V. 2020. Using learning analytics to predict students performance in Moodle LMS. International Journal of Emerging Technologies in Learning (iJET). 15, 20 (2020), 102.

AppendiX

© 2023 Copyright is held by the author(s). This work is distributed under the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) license.