ABSTRACT

Educational researchers have done remarkable work in analyzing the impact of VR on education and measuring the learners’ experience, engagement, motivation, etc of using VR in education. Most of the studies conducted reveal that VR in education has a positive impact as the learners immersively experience the Virtual Reality Learning Environment (VRLE) and interact with the virtual objects in the first-person perspective. Certain experiments conducted with VR also claim that using VR in education increases the presence but decreases learning due to an overload of extraneous cognitive load. Due to the contradictory claims made by different authors on the use of VR in education, it has become important to understand the learning processes happening while using VR. The existing studies conducted to understand the learning processes in VR have considered the cognitive factors, and affective factors leading to the learning outcomes. Those studies have used the data collected from pre-tests, post-tests, self-reported questionnaires, interviews, surveys, physiological devices, and human observers. However, no study has considered the data related to the behavior of the learners due to interacting with VRLE to understand the learning processes. This is due to the non-existence of an efficient data collection mechanism that is able to log all the interaction behavior of the learners. Hence, we developed a data collection mechanism that is able to log automatically all the interaction behavior of the learners in real time along with timestamps. We also conducted a study with 14 participants by deploying the developed data collection mechanism in a VRLE. The purpose of this doctoral thesis is to understand the learning processes happening in VRLE from the lens of the interaction behavior of the learners. The analysis done on the data collected can also be further used to predict the learners’ performance based on their interaction behavior.

Keywords

1. INTRODUCTION AND RELATED WORK

Virtual Reality (VR), is the technology that can make the users

experience a 3D virtual world by immersing in it and interacting

with the virtual objects present there in a first-person

perspective similar to the real world. Virtual Reality (VR)

technology, due to its unique characteristics of immersion,

interaction, and imagination [15, 14, 10] has found its

application in various domains such as the Automotive

industry, Military, Healthcare, Sports domain, etc including

the education domain. In education, the learners use VR

to acquire knowledge and skills on the learning contents

that involve 1) invisible phenomena such as electricity,

magnetism, etc [9], 2) microscopic concepts such as DNA [12],

a human artillery system [8], etc, and 3) dangerous and

hazardous procedures such as fire fighting, welding, etc [11]. As the application of VR in education is increasing,

the number of research being done on VR in education

has also seen an exponential increase in the last decade.

The experiments conducted in the research so far have

used the data collected from 1) pre-tests and post-tests to

measure the impact of VR on learning, 2) self-reported

questionnaires, interviews, and surveys [10] to measure the user

experience, engagement, and usability of the VR systems and to

compare VR-aided and VR-non-aided learning systems [1],

3) devices such as i) physiological sensors to assess the

affective state of the learners while performing the tasks [3],

ii) eye trackers to assess the learners’ intended area of

interest [13] iii) body trackers to adapt the size of the

virtual objects with respect to the size of the users, and

iv) orientation of the head-mounted displays (HMD) and

handheld controllers (HHC) to assess the response time, and 4)

human observers to understand the behavior and procedural

performance of the learners [8]. The learning outcomes

measured in the existing studies using the existing data

collection mechanism are 1) cognitive skills (knowledge

acquisition, knowledge retention, and knowledge transfer), 2)

affective skills (motivation, satisfaction, etc), and 3) procedural

skills (sequential execution, duration of completion) [10]. In

spite of a lot of work being done on measuring the learning

outcomes, there is little work done to understand the learning

processes to know about how the learners learn in Virtual

Reality learning environment (VRLE). The limited works done

to understand the learning processes too have considered the

cognitive factors, and affective factors [4, 2, 7]. However, the

procedural skills constituting one of the learning outcomes are

not considered in understanding the learning processes.

This could be due to the fact that procedural skills are

measured using the data related to the behavior of the

learners provided by human observers [8]. However, the data

provided by human observers can get biased and also need

to satisfy interrater reliability tests [7]. Hence there is

no efficient mechanism to collect behavioral data of the

learners while they interact with VRLE. Moreover, the

studies conducted to understand the learning processes have

been done on a desktop VR rather than in an immersive

VR learning environment [6]. The desktop VR system

uses a mouse and keyboard for interaction. Whereas, in

immersive VR systems, interactions happen through the

buttons of hand-held controllers (HHC). As there is no

mechanism existing to collect interaction behavioral data

(IBD) in immersive VRLE, there has been no research

done to understand learning processes in immersive VR

systems by considering the learners’ behavior. Therefore, this

research thesis aims to explore learning processes using IBD

collected in a VR learning environment. To reach this aim, the

research thesis will follow three main phases. First, the

development of a specialized IBD collection mechanism

and its deployment in an immersive VRLE. Second, the

collection of interaction behavioral data from studies conducted

in the VRLE to explore the learning processes. Third,

the development of a VR-based adaptive tutoring system

that can provide personalized adaptive feedback, scaffolds,

and VR learning content to the learners based on their

interaction behavior. The contributions of the research project

are:

1) Measuring the impact of VR on learning the subject area of

electronics engineering as VR studies in electronics engineering

are limited.

2) Deployment of the developed IBD collection mechanism as

there was no system existing to log the learners’ behavior in

real-time.

3) An approach to predict the learning using the IBD and the

performance of the learners by fitting them with a regression

model.

4) An approach to model the learners’ behavior using the IBD

logged and process mining techniques

2. CURRENT RESEARCH PROGRESS

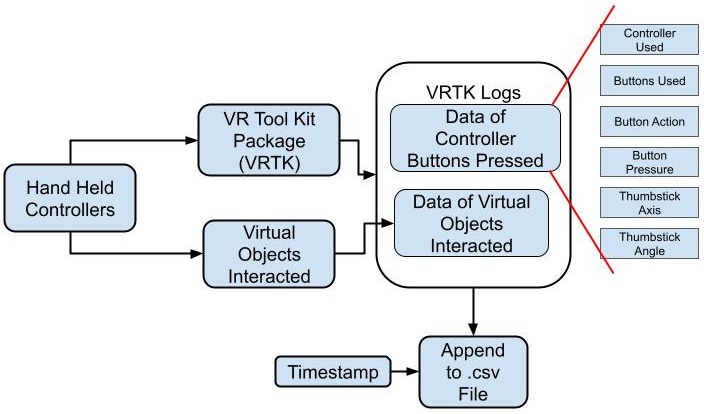

The important works done in our research so far are 1. We adopted and improvised MaroonVR [9], an open-sourced VRLE used to learn the physics concepts of electromagnetic induction. Electromagnetic induction is a phenomenon in which the electromotive force (emf) is induced when a magnet is moved through a closed loop coil. We used two scenes of MaroonVR viz Faraday’s law scene and the falling coil experiment scene. The improvisations are done to the VRLE by enhancing interactions in the Faraday’s law scene, converting the simulated falling coil experiment scene into an interactive scene, and including an embodiment-integrated learning scene in the environment. In the embodiment scene, the learners take the perspective of the magnet and through their walking action the emf gets induced rather than due to dragging the magnet in the other two scenes. The modifications were made to MaroonVR to make the VRLE more suitable for experiential learning [11] and embodied learning [5] to happen. The learners can feel the haptic vibration when the emf gets induced due to the magnet dragging in and out of the coil. The emf also gets plotted in real-time in the virtual graph present in the VRLE. 2. We developed an IBD collection mechanism that is able to log all the interaction behavior of the learners in VRLE in real time along with the time stamp. The process involved in the development of the IBD collection mechanism contains two steps viz. i) creation of a report folder to save the data file in .csv, and ii) appending the log data into the created .csv report file as shown in Figure 1. More details about the IBD collected is discussed in section 3. 3. We deployed the IBD collection mechanism in MaroonVR and conducted a study with 14 engineering undergraduate students and collected the IBD.

3. INTERACTION BEHAVIORAL DATA

We use Oculus Quest 2 from Meta, an immersive VR system in which the learners view the VRLE using a head-mounted display (HMD) and interact with the objects present in it using the buttons present in the hand-held controllers (HHC). The interactive actions performed on the virtual objects of VRLE by various buttons present in HHC constitute interaction behavioral data (IBD) which are discussed in the following sub-sections.

3.1 Interaction Behavioral Data Logger

The IBD collection mechanism deployed in the VRLE collects information about the interaction made through the HHC buttons, the virtual objects with which the interactions happen, and the timestamp. As we employ the Unity game engine to modify and program the interactions in the VRLE, we wrote a \(c\#\) code to create a folder in the desktop computer (to which HMD is tethered) in which all the interaction behavior data can be logged in a .csv file. We use the VR Tool Kit (VRTK) package of Unity to collect all the actions done on HHC buttons. We wrote another \(c\#\) code in Unity to append the .csv file with information about the virtual objects with which the interaction has happened. The code is written so that the timestamp gets recorded in a separate column corresponding to the respective rows to which new data is appended. The IBD collection mechanism is shown in Figure 1.

3.2 Buttons and Interactions

The buttons of the HHCs that involve in the interactions are the trigger buttons, grip buttons, thumbstick, primary buttons, and secondary buttons. The trigger buttons are generally used to interact with the virtual interfaces such as interface buttons, and interface sliders. In MaroonVR, the interface buttons that are used to change the number of turns in the coil, and to change the diameter of the area enclosed by the coil can be interacted with using the trigger button. The grip buttons are generally used to grab, drag, and drop/throw virtual objects. In MaroonVR, virtual objects such as virtual magnets, iron bars, and door handles can be interacted with using the grip button. Thumbstick present in the HHC can be used to teleport from one place to another place within the 3D world without actually moving in the real world. The primary button and the secondary button are used to switch to and switch back from the embodiment scene in MaroonVR respectively. The HHC buttons and the interactive actions performed by them are tabulated in Table 1.

| Controller Buttons | Interactive Actions | Virtual Objects Interacted |

|---|---|---|

| Trigger | Select, Unselect, and Change values | Virtual user interfaces such as virtual buttons to select between different coil turns, different coil diameters, play/pause buttons, and sliders |

| Grip | Grab, Drag, and Drop | Magnet, Iron bar, door handles, Perspective scene |

| Thumbstick | Teleport | - |

| Primary Button | Switch to embodiment scene | - |

| Secondary Button | Switch back from embodiment scene | - |

3.3 Action Logs

The interactive actions performed using various buttons of HHC as mentioned in Table 1 are logged into the IBD logger. The IBD logger contains information such as HHC used (left or right), buttons of the HHC used (refer to Table 1), button actions (pressed, released, clicked, etc), button pressure (a value between 0 and 1), thumbstick axis (x and y co-ordinates), and thumbstick angle (angle range between 0\(^{\circ }\) and 360\(^{\circ }\)), objects interacted (refer to Table 1), and timestamp in different columns of the .csv report file. The actions done by the learners can be identified from the buttons of the HHC used, button actions, and objects interacted columns of the IBD logger. The action logs identified from the IBD logger such as reading instruction, coil interaction, magnet interaction, iron bar interaction, scene switching, and non-interactive actions are described in Table 2.

| Actions | Description |

|---|---|

| Reading instruction | Reading the instructions before starting the interactions |

| Coil interaction | Changing the parameters of the coil such as number of turns and diameter |

| Magnet interaction | Grabbing, dragging, and dropping of the magnet present in Faraday’s law experiment lab, and falling coil experiment lab |

| Iron bar interaction | Grabbing, dragging, and dropping of the iron bar present in falling coil experiment lab |

| Scene switching | Moving from one scene to another scene among three different scenes |

| Non-interactive actions | The learners look and walk around in the VRLE rather than interacting with virtual objects |

3.4 Experimentation

We conducted a study with 14 participants who are undergraduate engineering students from a non-electrical background. The data relating to the participants’ self-efficacy and self-regulated learning were collected from the responses provided by the participants to the respective questionnaires. A pre-test was also conducted to assess the participants’ prior knowledge of electromagnetic induction. The participants were then allowed to play a VR game known as “First Steps” for 15 minutes to get familiarize themselves with the VR system. Then they interacted with MaroonVR VRLE for 30 minutes and the data related to their interaction behavior gets collected automatically in the IBD logger. After the VR intervention, a post-test was conducted to assess the learning outcome. The participants then answered a series of self-reported questionnaires such as learners’ experience (M=6.66, SD=0.47), and enjoyment (M=5.22, SD=0.48) on a 7-point Likert scale and VR engagement (M=4.21, SD=1.18) on a 5-point Likert scale. Also, no participant reported any kind of motion sickness or nausea during or after the VR intervention as the movement of the participants in the virtual world and real world is synchronized.

| Actions | Frequency (number of Occurrences) | Duration(in seconds) |

|---|---|---|

| Reading instruction | 14 | 4160 |

| Coil interaction | 67 | - |

| Magnet interaction | 118 | 1397 |

| Iron bar interaction | 30 | 97 |

| Scene switching | 29 | - |

| Non-interactive actions | - | 17255 |

The details about the behavior of the participants with respect to actions, frequency of occurrences of the actions, and the duration of performing the respective actions during VR intervention are shown in Table 3. The entries in the table show the behavior of all 14 participants collectively. The action of coil interaction involves the interaction of varying the coil parameters such as turns, and diameter by clicking using the trigger button in HHC. Similarly, the action of scene switching also involves clicking the door handle to enter another scene. Hence, the number of occurrences of these actions is evaluated rather than the duration of occurrences. Whereas, for non-interactive actions such as walking and looking around the environment, duration is calculated rather than frequency.

4. LEARNING PROCESSES ANALYSIS

We have developed an IBD collection mechanism, deployed it in a VRLE, conducted a study with 14 participants, and identified the actions done by the learners from their interaction behavior. All through these processes we used experiential learning theory that has four components viz concrete experience, reflective observation, abstract conceptualization, and active experimentation [11]. The existing studies on the learning processes in VR have considered only the cognitive factors and affective factors [4] that are the aspects of the first three elements of experiential learning theory. Whereas, active experimentation which can be assessed by the interaction behavior is not considered in understanding the learning processes in VR. Therefore, we will extract the temporal and spatial features of the actions identified from the IBD collected and do pattern mining to find the behavioral pattern of the learners leading to higher performance. This would help us to find how learners learn in a VRLE from the lens of interaction behavior. We also propose that the results obtained would be further used to model the learners’ proficiency. We also propose to develop an algorithm to provide personalized adaptive feedback, scaffolds, and learning content based on learners’ interaction behavior and proficiency.

5. CONCLUSION

We conclude that IBD developed and deployed in the VRLE is able to log all the interactive actions performed by the learners. However, the experiment was conducted with a small sample of 14 participants. Hence, to establish the generalizability of the study the experiment needs to be conducted with a larger number. As the learners experiencing the VRLE are expected to see the virtual graph while they interact with the magnet, the information related to their seeing needs to be logged. However, the current data collection mechanism is unable to provide information related to the learners’ seeing. Hence, further work is required to ensure that the learners see the intended area while they perform tasks in the VRLE and relevant information needs to be logged. We will use the logged IBD to explore the learning processes in VR. Also, in the current experiment, the learners interacted with the virtual objects and viewed the virtual graph for the corresponding changes in the voltage level. In the future, the VRLE will be modified to include task-based challenges like glowing electric bulbs having different wattage ratings by interacting with virtual objects. We also propose to develop a mechanism to provide personalized feedback, scaffolds, and VR learning content to the learners based on their behavior in VRLE.

During the doctoral consortium, we expect to recommend suggestions and feedback related to our current progress in our research. We specifically expect the recommendations on establishing the connection between the behavior of the learners and the learning outcome, and personalization of the learning system in the VR context.

6. REFERENCES

- P. Albus, A. Vogt, and T. Seufert. Signaling in virtual reality influences learning outcome and cognitive load. Computers & Education, 166:104154, 2021.

- B. Chavez and S. Bayona. Virtual reality in the learning process. In World conference on information systems and technologies, pages 1345–1356. Springer, 2018.

- Z. Feng, V. A. González, R. Amor, R. Lovreglio, and G. Cabrera-Guerrero. Immersive virtual reality serious games for evacuation training and research: A systematic literature review. Computers & Education, 127:252–266, 2018.

- G. Makransky and G. B. Petersen. Investigating the process of learning with desktop virtual reality: A structural equation modeling approach. Computers & Education, 134:15–30, 2019.

- G. Makransky and G. B. Petersen. The cognitive affective model of immersive learning (camil): A theoretical research-based model of learning in immersive virtual reality. Educational Psychology Review, 33(3):937–958, 2021.

- G. Makransky, T. S. Terkildsen, and R. E. Mayer. Adding immersive virtual reality to a science lab simulation causes more presence but less learning. Learning and instruction, 60:225–236, 2019.

- E. Olmos-Raya, J. Ferreira-Cavalcanti, M. Contero, M. C. Castellanos, I. A. C. Giglioli, and M. Alcañiz. Mobile virtual reality as an educational platform: A pilot study on the impact of immersion and positive emotion induction in the learning process. EURASIA Journal of Mathematics, Science and Technology Education, 14(6):2045–2057, 2018.

- R. Pathan, R. Rajendran, and S. Murthy. Mechanism to capture learner’s interaction in vr-based learning environment: design and application. Smart Learning Environments, 7(1):1–15, 2020.

- J. Pirker, M. Holly, I. Lesjak, J. Kopf, and C. Gütl. Maroonvr—an interactive and immersive virtual reality physics laboratory. In Learning in a Digital World, pages 213–238. Springer, 2019.

- J. Radianti, T. A. Majchrzak, J. Fromm, and I. Wohlgenannt. A systematic review of immersive virtual reality applications for higher education: Design elements, lessons learned, and research agenda. Computers & Education, 147:103778, 2020.

- G. Sankaranarayanan, L. Wooley, D. Hogg, D. Dorozhkin, J. Olasky, S. Chauhan, J. W. Fleshman, S. De, D. Scott, and D. B. Jones. Immersive virtual reality-based training improves response in a simulated operating room fire scenario. Surgical endoscopy, 32(8):3439–3449, 2018.

- L. Sharma, R. Jin, B. Prabhakaran, and M. Gans. Learndna: an interactive vr application for learning dna structure. In Proceedings of the 3rd International Workshop on Interactive and Spatial Computing, pages 80–87, 2018.

- J. Wade, L. Zhang, D. Bian, J. Fan, A. Swanson, A. Weitlauf, M. Sarkar, Z. Warren, and N. Sarkar. A gaze-contingent adaptive virtual reality driving environment for intervention in individuals with autism spectrum disorders. ACM Transactions on Interactive Intelligent Systems (TiiS), 6(1):1–23, 2016.

- L. Yang, J. Huang, T. Feng, W. Hong-An, and D. Guo-Zhong. Gesture interaction in virtual reality. Virtual Reality & Intelligent Hardware, 1(1):84–112, 2019.

- H. Zhang. Head-mounted display-based intuitive virtual reality training system for the mining industry. International Journal of Mining Science and Technology, 27(4):717–722, 2017.

© 2023 Copyright is held by the author(s). This work is distributed under the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) license.